What is the process of data compression. Coding with Shannon Fano trees. Move and copy compressed files and folders

Lecture number 4. Compress information

Principles of compression information

The purpose of data compression is to provide a compact representation of the data generated by the source, for their more economical saving and transmission through communication channels.

Let us have a file size 1 (one) megabyte. We need to get a smaller file from it. Nothing complicated - launch the archiver, for example, WinZip, and result in the result, for example, a file of 600 kilobytes. Where are the remaining 424 kilobytes?

Compression of information is one of the ways to coding. In general, codes are divided into three large groups - compression codes (effective codes), noise-resistant codes and cryptographic codes. Codes designed to compress information are divided, in turn, on codes without loss and loss codes. Coding without loss implies absolutely accurate data recovery after decoding and can be used to compress any information. Loss coding is usually a much higher degree of compression than coding without loss, but allows some deviations of decoded data from the source.

Types of compression

All methods of compressing information can be divided into two large non-cycle classes: compression with loss Information and compression without loss information.

Compression without loss of information.

These compression methods are interested in us first, since it is them that they are used in the transfer of text documents and programs, when issuing a customer made by the work performed or when creating backup copies of information stored on a computer.

The methods of compression of this class cannot allow the loss of information, so they are based only on eliminating its redundancy, and information has redundancy almost always (though, if someone has no longer complicated it). If there was no redundancy, there would be nothing to compress.

Here is a simple example. In Russian, 33 letters, ten digits and more about one and a half dozen punctuation marks and other special characters. For text that is recorded only with capital Russian letters (As in telegrams and radiograms), it would be enough for sixty different values. However, each character is usually encoded by byte, which contains 8 bits and can express 256 different codes. This is the first base for redundancy. For our "telegraph" text, it would be enough for six bits on a symbol.

Here is another example. In international encoding characters ASCII. To encode any symbol, the same amount of bits (8) is given, while everyone has long and it is well known that the most common symbols make sense to encode fewer characters. So, for example, in the "Alphabet of Morse", the letters "E" and "T", which are often encoded, are encoded by one sign (respectively, this is a point and dash). And such rare letters, as "Yu" (- -) and "C" (- -) are encoded by four signs. Ineffective encoding is the second base for redundancy. Programs that compress information can enter their encoding (different for different files) and attribute a certain table (dictionary) to a compressed file, from which the unpacking program learns how to this file Those or other characters or their groups are encoded. Algorithms based on transcoding information are called hafman algorithms.

The presence of repeated fragments is the third basis for redundancy. In the texts it is rare, but in the tables and in the graph, the repetition of codes is a common phenomenon. For example, if the number 0 is repeated twenty times in a row, it makes no sense to put twenty zero bytes. Instead, they put one zero and coefficient 20. Such algorithms based on revealing repetitions are called methodsRle. (RUN. Length. Encoding.).

Big repeating sequences of the same bytes are particularly different graphic illustrations, but not photographic (there are many noise and neighboring points differ significantly in parameters), and those that artists paint "smooth" color as in animated films.

Compression with loss of information.

Compression with loss of information means that after unpacking the compacted archive, we will receive a document that is somewhat different from the one at the very beginning. It is clear that the greater the degree of compression, the greater the loss value and vice versa.

Of course, such algorithms are not applicable for text documents, database tables and especially for programs. Minor distortions in a simple unformatted text can be survived somehow, but the distortion of at least one bit in the program will make it absolutely inoperable.

At the same time, there are materials in which it is worth sacrificing a few percent of the information to get a compression of tens of times. These include photographic illustration, video footage and musical compositions. Loss of information in compression and subsequent unpacking in such materials is perceived as the appearance of some additional "noise". But since when creating these materials, a certain "noise" is still present, its slight increase does not always look critical, and the winnings in the sizes gives a huge (10-15 times in music, at 20-30 times in the photo and video footage).

Compression algorithms with loss of information include such well-known algorithms like JPEG and MPEG. JPEG algorithm is used when compressing photo images. Graphic files compressed by this method have a JPG extension. MPEG algorithms are used when compressed video and music. These files may have different extensions, depending on the specific program, but the most famous are.mpg for video I.MRZ for music.

Compression algorithms with loss of information apply only for consumer tasks. This means, for example, that if the photo is transmitted to view, and music for playback, then such algorithms can be applied. If they are transmitted for further processing, for example, for editing, no loss of information in the source material is unacceptable.

The magnitude of the permissible loss in compression is usually possible to control. This allows you to experiment and achieve an optimal size / quality ratio. In photographic illustrations intended for playback on the screen, a loss of 5% of the information is usually uncritical, and in some cases can be allowed 20-25%.

Compression algorithms without loss of information

Shannon-Fano code

For further reasoning it will be convenient to submit our original file With text as a source of characters that one appear at its output. We do not know in advance which symbol will be as follows, but we know that the letter "A" will appear with the probability of P1, with the probability of P2 -BUVA "B", etc.

In the simplest case, we will consider all text symbols independent of each other, i.e. The probability of the appearance of the next symbol does not depend on the value of the previous symbol. Of course, for meaningful text it is not, but now we are considering a very simplified situation. In this case, the approval is true "The symbol carries the more information, the less likely to appear."

Let's imagine the text, the alphabet of which consists of only 16 letters: a, b, b, g, d, e, z, z, and, k, l, m, n, o, p, r. Each of these signs can Code with only 4 bits: from 0000 to 1111. Now imagine that the probabilities of the appearance of these characters are distributed as follows:

The sum of these probabilities is naturally united. We divide these characters into two groups so that the total probability of the characters of each group is ~ 0.5 (Fig.). In our example, it will be groups characters a-in and Mr. Circles in the figure, indicating symbol groups, are called vertices or nodes (nodes), and the design itself from these nodes is a binary tree (B-Tree). We assign your code to each node, denoting one node number 0, and the other number 1.

Again, we break the first group (A-B) into two subgroups so that their total probabilities be as closer to each other. Add to the code of the first subgroup number 0, and to the second code - digit 1.

We will repeat this operation until on each vertex of our "tree" will remain one character. Full tree for our alphabet will have 31 node.

Symbol codes (extreme right nodes of wood) have the codes of unequal length. So, the letter A having a probability P \u003d 0.2 for our imaginary text is encoded with only two bits, and the letter P (not shown in the figure), having the probability P \u003d 0.013, is encoded with a six-bit combination.

So, the principle is obvious - common symbols are encoded by a smaller number of bits, rarely found - large. As a result, the average amount of bits on the symbol will be equal

where Ni is the number of bits encoding the i-th symbol, PI is the likelihood of the appearance of the i-th symbol.

Huffman code.

The Huffman algorithm is elegantly implements the general idea of \u200b\u200bstatistical coding using prefix sets and works as follows:

1. We write out all the symbols of the alphabet in order of increasing or decreasing the likelihood of their appearance in the text.

2. Consistently combine two characters with the smallest probabilities of the appearance of the appearance into a new composite character, the likelihood of which we assume equal to the sum of the probabilities of the components of its characters. In the end, we construct a tree, each node of which has the total probability of all nodes below it.

3. Track the path to each sheet of wood, marking the direction to each node (for example, right - 1, left - 0). The resulting sequence gives a code word corresponding to each symbol (Fig.).

Build a code tree for communication with the following alphabet:

Disadvantages of methods

The greatest complexity with codes, as follows from the previous discussion, is the need to have a probability table for each type of compressible data. This is not a problem if it is known that English or Russian text is compressed; We simply provide encoder and decoder suitable code tree for English or Russian text. In the general case, when the probability of symbols for the input data is unknown, the static codes of Huffman work inefficiently.

The solution to this problem is a statistical analysis of the coded data performed during the first pass on the data, and the compilation on it is based on its codewood. Actually coding is performed by the second pass.

Another lack of codes is that the minimum code word length for them cannot be less than one, while the entropy of the message may well be 0.1, and 0.01 bits / letter. In this case, the code becomes significantly redundant. The problem is solved by using the algorithm to block blocks, but then the encoding / decoding procedure is complicated and the code tree is significantly expanded, which must ultimately be saved along with the code.

These codes do not take into account the relationships between characters that are present in almost any text. For example, if in the text on english language We are found in the letter Q, we can confidently say that the letter U will go after it.

Group coding - Run Length Encoding (RLE) is one of the oldest and most simple archiving algorithms. The compression in the RLE occurs due to the replacement of the chains of the same byte on the pair "counter, value". ("Red, red, ..., red" is written as "n red").

One of the implementations of the algorithm is: they are looking for the most frequently occurring byte, they call it the prefix and make replacing the chains of the same symbols on the triple "prefix, counter, value". If this byte is met in the source file once or twice in a row, it is replaced by a pair of "prefix, 1" or "prefix, 2". There is one unused pair "Prefix, 0", which can be used as a sign of the end of packaged data.

When encoding EXE files, you can search and package the sequence of the AxAYAZawat form ... which are often found in the resources (rows in the Unicode encoding)

The positive parties of the algorithm can be attributed to what it does not require additional memory when working, and is quickly executed. The algorithm is used in the formats of PCX, TIFF, NMR. An interesting feature of group coding in PCX is that the degree of archiving for some images can be significantly increased only by changing the order of colors in the image palette.

LZW code (Lempel-Ziv & Welch) is today one of the most common compression codes without loss. It is with the help of the LZW code that there is compression in such graphical formats as TIFF and GIF, with the help of modifications LZW, there are very many universal archivers. The operation of the algorithm is based on the search in the input file of repeating symbol sequences, which are encoded by combinations with a length of 8 to 12 bits. Thus, the greatest efficiency this algorithm It has on text files and graphic files in which there are large monochrome sections or repeating sequences of pixels.

The absence of losses of information with LZW coding led to the wide distribution of TIFF-based format based on it. This format does not impose any restrictions on the size and depth of the color of the image and is widespread, for example, in printing. Another LZW based format - GIF is more primitive - it allows you to store images with a color depth of not more than 8 bits / pixels. At the beginning of the GIF file there is a palette - a table that sets the correspondence between the color index - the number in the range from 0 to 255 and the true, 24-bit color value.

Compression algorithms with information loss

The JPEG algorithm was developed by a group of firms called Joint Photographic Experts Group. The aim of the project was to create a highly efficient compression standard for both black and white and color images, this goal and was achieved by developers. Currently, JPEG finds the widest application where a high compression ratio is required - for example, in the Internet.

Unlike the JPEG encoding LZW algorithm is coding with losses. The encoding algorithm itself is based on a very complex mathematics, but in general terms it can be described as follows: the image is divided into squares 8 * 8 pixels, and then each square is converted into a sequential chain of 64 pixels. Next, each such chain is subjected to the so-called DCT transformation, which is one of the varieties of discrete Fourier transform. It lies in the fact that the input sequence of pixels can be represented as the sum of the sinusoidal and cosine components with multiple frequencies (the so-called harmonics). In this case, we need to know only the amplitudes of these components in order to restore the input sequence with a sufficient degree of accuracy. The greater the number of harmonic components we know, the less the discrepancy between the original and the compressed image will be. Most JPEG encoders allow you to adjust the compression ratio. This is very achieved simple way: The higher the degree of compression is established, the smaller the harmonic will present each 64-pixel block.

Of course, the strength of this type of coding is a large compression ratio while maintaining the original color depth. It is this property that led to its wide application in the Internet, where the decrease in the size of the files is of paramount importance in multimedia encyclopedias, where storage is required to be more graphics in limited volume.

The negative property of this format is unrelated by any means, inherently inherent worsening image quality. It is this sad fact that does not allow it to be used in printing, where quality is placed at the head of the corner.

However, JPEG format is not the limit of perfection in the desire to reduce the size of the destination file. IN lately Intensive research is underway in the field of so-called wavelet transformation (or splash transformation). Based on the most complex mathematical principles, wavelet encoders allow to obtain greater compression than JPEG, with smaller information losses. Despite the complexity of the mathematics of the wavelet transformation, in the software implementation it is easier than JPEG. Although the algorithms of wavelet compression are still in the initial stage of development, a great future is prepared.

Fractal compression

Fractal image compression is an image compression algorithm with losses based on the use of iconic functions (IFS, as a rule, as a result of affine transformations) to images. This algorithm is known in that in some cases it allows to obtain very high compression ratios ( best examples - up to 1000 times with acceptable visual capacity) for real photos of natural objects, which is not available for other image compression algorithms in principle. because of complex situation With the patent of widespread the algorithm did not receive.

Fractal archiving is based on the fact that using the system coefficients of the icted functions, the image is preached in a more compact form. Before considering the archiving process, we will analyze how IFS builds an image.

Strictly speaking, IFS is a set of three-dimensional affine transformations that translated one image to another. The transformation is subjected to points in the three-dimensional space (x coordinate, at the coordinate, brightness).

The basis of the fractal coding method is the detection of self-like plots in the image. For the first time the possibility of applying the theory of systems of iconic functions (IFS) to the problem of image compression was studied by Michael Barnsley and Alan Sloan. They patented their idea in 1990 and 1991. Jackwin (Jacquin) introduced a fractal coding method, which uses domain and range subimage blocks (Domain and Range SUBIMAGE BLOCKS), block blocks covering the entire image. This approach has become the basis for most fractal coding methods used today. He was improved by Juval Fisher (Yuval Fisher) and a number of other researchers.

In accordance with this method, the image is divided into a plurality of non-reciprocating rank siblings (Range subimages) and sets a set of overlapping domain disabilities (Domain subimages). For each rank block, the coding algorithm finds the most suitable domain unit and an affine conversion that translates this domain unit to this rank block. The image structure is displayed in the rank block system, domain blocks and transformations.

The idea is as follows: Suppose the original image is a fixed point of some compressive display. Then it is possible to remember this display instead of the image itself, and to restore it is sufficiently repeatedly apply this display to any starting image.

On the Banach theorem, such iterations always lead to a fixed point, that is, to the original image. In practice, the whole difficulty lies in finding the most suitable compressive display and in its compact storage. As a rule, the mapping algorithms (that is, compression algorithms) are largely overwhelming and require large computational costs. At the same time, the restoration algorithms are quite effective and fast.

Briefly, the method proposed by Barnesley can be described as follows. The image is encoded by several simple transformations (in our case affine), that is, it is determined by the coefficients of these transformations (in our case A, B, C, D, E, F).

For example, the image of the koch curve can be encoded by four affine transformations, we unambiguously determine it with all 24 coefficients.

As a result, the point will definitely go somewhere inside the black area on the source image. Having done such an operation many times, we fill all the black space, thereby restoring the picture.

The most famous two images obtained using IFS: Serpinsky's triangle and Fern Barnsley. The first is set three, and the second is five affine transformations (or, in our terminology, lenses). Each conversion is set by literally read bytes, while the image built with their help may occupy several megabytes.

It becomes clear how the archiver works, and why he needs so much time. In fact, fractal compression is the search for self-like domains in the image and the definition of the parameters of affine transformations for them.

In the worst case, if the optimizing algorithm does not apply, it will take a bust and comparison of all possible fragments of the image of different sizes. Even for small images, when taking into account discreteness, we obtain the astronomical number of the disorded options. Even a sharp narrowing of conversion classes, for example, by scaling only a certain number of times, will not allow achieving acceptable time. In addition, the image quality is lost. The overwhelming majority of research in the field of fractal compression are now aimed at reducing the archiving time required to obtain a high-quality image.

For a fractal compression algorithm, as well as for other compression algorithms with losses, mechanisms are very important with which it will be possible to adjust the degree of compression and the degree of losses. To date, a large set of such methods has been developed. First, it is possible to limit the number of transformations, deliberately ensuring the compression ratio is not below the fixed value. Secondly, you can demand that in a situation where the difference between the processed fragment and the best approximation will be higher than a certain threshold value, this fragment has been crushed necessarily (several lenses are necessarily started). Thirdly, it is possible to prohibit fragment fragments less than, for example, four points. By changing the threshold values \u200b\u200band the priority of these conditions, you can very flexibly control the image compression ratio: from batchwise conformity, to any compression ratio.

Comparison with JPEG.

Today, the most common graphic archiving algorithm is JPEG. Compare it with fractal compression.

First, we note that both, and another algorithm operate with 8-bit (in gray grades) and 24-bit full-color images. Both are algorithms of compression loss and provide close archiving coefficients. And the fractal algorithm, and JPEG has the opportunity to increase the degree of compression due to an increase in losses. In addition, both algorithms are very well parallel.

Differences begin if we consider the time you need to archive / unzip algorithms. Thus, the fractal algorithm compresses hundreds and even thousands of times longer than JPEG. Unpacking the image, on the contrary, will occur 5-10 times faster. Therefore, if the image is compressed only once, and transferred over the network and is unpacked many times, then it is more profitable to use a fractal algorithm.

JPEG uses the decomposition of the image on cosine functions, so the loss in it (even for the minimum losses given) is manifested in waves and halo on the border of sharp colors. It is for this effect that it does not like to use when compressing images that are prepared for high-quality printing: there this effect can be very noticeable.

The fractal algorithm is delighted with this shortage. Moreover, when printing images, each time you have to perform the scaling operation, since the printing device of the printing device does not coincide with the image raster. When converting, there can also be several unpleasant effects with which you can fight or scaling the image programmatically (for cheap printing devices like ordinary laser and inkjet printers), either providing a printing device with its processor, hard drive and a set of image processing programs (for expensive photophonating machines). As you can guess, with the use of a fractal algorithm such problems practically does not occur.

The ousting JPEG fractal algorithm in widespread use will not happen soon (at least due to the lowest archiving speed), but in the field of multimedia applications, in computer Games Its use is quite justified.

Nowadays, many users think about how the process of compressing information is carried out in order to save free space on Winchester, because it is one of the most effective tools Use of useful space in any drive. It is often enough to modern users who face a shortage of free space on the drive, you have to delete any data, trying to release the desired place in this way, while more advanced users most often use data compression in order to reduce its volume.

However, many people do not even know how the process of compressing information is called, not to mention which algorithms are used and what gives the use of each of them.

Is it worth compressing the data?

Data compression is important today and need to any user. Of course, in our time, almost everyone can acquire advanced data storage devices, providing for the possibility of using a sufficiently large amount of free space, as well as equipped with high-speed information translation channels.

However, it is necessary to correctly understand that over time, the amount of data that must be transmitted over time. And if literally ten years ago, a volume of 700 MB was considered standard for a regular film, then today films made in HD quality may have volumes equal to several dozen gigabytes, not to mention how much free space is high-quality paintings. in Blu-ray format.

When data compression is necessary?

Of course, it is not worth it to be the fact that the process of compression information will bring you a lot of use, but there is a certain number of situations under which some of the information compression methods are extremely useful and even necessary:

- Transfer of certain documents through email. In particular, this concerns those situations where you need to transfer information in a large volume using various mobile devices.

- Often the process of compressing information in order to reduce the place occupied by it is used when publishing certain data on various sites when you want to save traffic;

- Saving free space on the hard disk when it is not possible to replace or add new storage tools. In particular, the most common situation is the one when there are certain restrictions in the affordable budget, but it lacks free disk space.

Of course, in addition to the above, there is still huge number There may be different situations in which the process of compressing information may be required in order to reduce its volume, but these are today the most common.

How can I compress the data?

Today there are a wide variety of information compression methods, but all of them are divided into two main groups - this is compression with certain losses, as well as compression without loss.

The use of the last group of methods is relevant when the data must be restored with extremely high accuracy, up to one bit. This approach is the only relevant in the event that a compression of a specific text document is compressed.

It should be noted that in some situations there is no need to maximize the reduction of compressed data, therefore it is possible to use such algorithms in which the compression of information on the disk is carried out with certain losses. The advantage of compression with losses is that such a technology is much more simple in implementation, and also provides the highest possible degree of archiving.

Compression with losses

Information with losses ensure an order of magnitude better compression, while maintaining sufficient quality of information. In most cases, the use of such algorithms is carried out to compress analog data, such as all sorts of images or sounds. In such situations, unpacked files can differ quite strongly from the original information, but they are practically not distingurable for the human eye or ear.

Compression without loss

Algorithms for compressing information without loss provide the most accurate data recovery, eliminating any loss of compressible files. However, it is necessary to correctly understand the fact that in this case it is not ensured so effective compression of files.

Universal methods

Among other things, there is a certain series universal methodswhich is carried out an effective process of compression information in order to reduce the place occupied by it. In general, you can allocate only three main technologies:

- Flow conversion. In this case, a description of the new incoming uncompressed information is carried out through already processed files, and no probabilities are calculated, and symbols are coding based on the only files that have already been subjected to a certain processing.

- Statistical compression. This process of compression information in order to reduce the place occupied on the disk is distributed into two subcategories - adaptive and block methods. The adaptive option provides for the calculation of probability for new files according to information, which has already been processed during the encoding process. In particular, such methods should also include various adaptive variants of Shannon Fano algorithms and Huffman. The block algorithm provides for a separate calculation of each block of information, followed by adding to the compressed block itself.

- Convert block. Incoming information is distributed to several blocks, and subsequently occurs a holistic transformation. It should be said that certain methods, especially those that are based on the permutation of several blocks, ultimately may lead to a significant decrease in the volume of compressible information. However, it is necessary to correctly understand that after carrying out such treatment, in the end, there is a significant improvement in which the subsequent compression through other algorithms is carried out much simpler and quickly.

Compression when copying

One of the most important components reserve copy is the device for which it will move necessary to the user information. The larger amount of these data will be moved, the more volumetric device you need to use. However, if you are implemented a data compression process, then in this case the problem of the lack of free space is unlikely to remain relevant for you.

Why do you need it?

The ability to compress information when it makes it possible to significantly reduce the time you want to copy the necessary files, and at the same time achieve effective savings of free space on the drive. In other words, when using compression, the information will be copied much more compact and quickly, and you can save your money and finances that were necessary to buy a more voluminous drive. Among other things, having compressed information, you also reduce the time that you will need when transporting all data to the server or copy them through the network.

Data compression for backup can be carried out in one or more files - in this case everything will depend on what kind of program you use and what information is subject to compression.

When choosing a utility, be sure to look at how the program you chose can compress data. It depends on the type of information, as a result, the effectiveness of the compression of text documents may be more than 90%, while it is effective for no more than 5%.

Before starting the process of compressing a file or folder, it is very important to understand all the benefits received from this, and disassemble the compression methods available in Windows 7:

- NTFS file compression

- Compression (zip) folders.

Data compression reduces the file size by minimizing its redundant data. IN text file. With redundant data, there are often certain signs, such as a space symbol or general vowels (E and A), as well as strings of characters. Data compression creates a compressed version of the file, minimizing these redundant data.

These two compression methods will be compared below. In addition, impact will be considered various files and folders on the action of compressed files and folders.

File nTFS system Supports file compression based on a separate file. The file compression algorithm here is a compression algorithm without loss, this means that when compressing and unpacking the file, the data is not lost. In other algorithms in compression and subsequent decompression, part of the data is lost.

NTFS compression available on NTFS File System hard disksIt has the following limitations and features:

- Compression - attribute for a file or folder.

- Folders and files on the volume NTFS, or compressed, or not.

- New files created in a compressed folder are cleaned by default.

- The status of a compressed folder does not necessarily reflect the status of compression of files in this folder. For example, folders can be compressed without compressing its content, and some or all files in a compressed folder can be unpaid.

- Working with NTFS-compressed files without unpacking them, as they are unpacked and compressed again without user intervention.

- If the compressed file is open, the system automatically unpacks it.

- When closing windows file Again it squeezes.

- To simplify recognition, NTFS compressed file names and folders are displayed in another color.

- NTFS-compressed files and folders remain in a compressed form, only on the NTFS volume.

- NTFS Compressed files cannot be encrypted.

- Compressed file bytes are not available for applications; They see only uncompressed data.

- Applications that open compressed files can work with them as not compressed.

- Compressed files cannot be copied in another file system.

Note: You can use the Command Line Command Line tool to manage the NTFS compression.

Move and copy compressed files and folders.

Displaced or copied compressed files and folders can change their compression status. Below are five situations in which the impact of copying and moving to compressed files and folders is considered.

Copying within the NTFS section partition.

How does the state of the compressed file or folder change, if you copy it within the NTFS section? When copying a file or folder inside the NTFS file system, a section, file or folder inherits the state of compression of the target folder. For example, if you copy a compressed file or folder into a unpacked folder, a file or folder will be automatically unpacked.

Move within NTFS section.

What happens to the file compression or folder when moving within the NTFS section?

When you move a file or folder within the NTFS section, a file or folder saves its initial compression state. For example, when moving a compressed file or folder in an uncompressed folder, the file remains compressed.

Copying or moving between NTFS sections.

What happens to a compressed file or folder when copying or moving it between NTFS sections?

When you move the file or folder between the NTFS partitions, the file or folder inherits the state of compression of the target folder. Since Windows 7 examines the movement between sections as copying with a subsequent deletion operation, the files inherit the compression state of the target folder.

When copying a file to a folder that already contains a file with the same name, the copied file accepts the compression attribute of the target file, regardless of the folder compression state.

Copying or moving between FAT and NTFS volumes.

What happens to the file compression that is copied or moved between FAT and NTFS volumes?

Compressed files copied to the FAT section are not compressed, since FAT volumes do not support compression. However, if you copy or move files from the FAT section to the NTFS section, they inherit the folder compression attribute to which you copy them.

When copying files, file system NTFS calculates disk space based on the size of an uncompressed file. This is important because the files during the copying process are not compressed, and the system must guarantee sufficient space. If you are trying to copy a compressed file to the NTFS section, and it does not have a free space for an uncompressed file, you will have an error message that you will notify the disk space deficiency for the file.

As mentioned above, one of the important tasks of preliminary preparation of data to encryption is to reduce their redundancy and align the statistical patterns of the applied language. Partial reduction of redundancy is achieved by compressing data.

Compress information represents the process of converting the source message from one code system to another, as a result of which decreases message size. Algorithms intended for compression of information can be divided into two large groups: implementing compression without loss (reversible compression) and implementing compression with losses (irreversible compression).

Reversible compression It implies absolutely accurate data recovery after decoding and can be applied to compress any information. It always leads to a decrease in the volume of the output flow of information without changing its informativeness, that is, without loss information structure. Moreover, from the output stream, using a restoring or decompression algorithm, you can get the input, and the recovery process is called decompression or unpacking and only after the unpacking process, the data is suitable for processing in accordance with their internal format. Compression without loss is applied to texts, executable files, high-quality sound and graphics.

Irreversible compression It is usually a much higher degree of compression than coding without loss, but allows some deviations of decoded data from the source. In practice, there is a wide range of practical tasks, in which compliance with the requirement of accurate restoration of the initial information after decompression is not required. This, in particular, refers to the compression of multimedia information: sound, photo or video images. For example, JPEG and MPEG multimedia information formats are widely applied, which use irreversible compression. The irreversible compression is usually not used in conjunction with cryptographic encryption, since the main requirement for the cryptosystem is the identity of the decrypted data from the original. However, when using multimedia technologies, the data presented in digital video, often exposed to irreversible compression before serving in the cryptographic system for encryption. After transferring information to the consumer and decryption, multimedia files are used in a compressed form (that is, not restored).

Consider some of the most common ways of reversible data compression.

The most well-known simple approach and algorithm for compressing information is reversible - this is the encoding of the series of sequences (RUN LENGTH ENCODING - RLE). The essence of the methods of this approach consists in replacing the chains or series of repeated bytes to one coding byte-revenue and the number of their repetitions. The problem of all similar methods is only in the definition of the method, with which the unpacking algorithm could be distinguished in the resulting stream of bytes coded series from other, non-coded byte sequences. The solution to the problem is usually achieved by the expansion of the labels at the beginning of the coded chains. Such labels can be characteristic bits in the first pape of the coded series, the values \u200b\u200bof the first byte of the coded series. The disadvantage of the RLE method is a fairly low compression ratio or the cost of encoding files with a small number of series and, even worse - with a small number of repetitive bytes in the series.

With uniform encoding information, the same bit is allocated to the message, regardless of the likelihood of its appearance. At the same time, it is logical to assume that the total length of the transmitted messages will decrease if frequent messages encoded with short code words, and rarely encountered - longer. The problems arising from this are related to the need to use codes with variable code word. There are many approaches to building such codes.

Some of the widespread in practice are vocabulary methods, the main representatives of which include the algorithms of the Ziva family and Lemplage. Their basic idea is that fragments input stream ("phrases") are replaced by a pointer to the place where they have already appeared in the text. In the literature, such algorithms are indicated as algorithms LZ compression.

A similar method quickly adapts to the structure of the text and can encode short functional words, since they appear very often in it. New words and phrases can also be formed from parts of previously encountered words. Decoding of compressed text is carried out directly, - there is a simple replacement of the pointer to the finished phrase from the dictionary to which the one indicates. In practice, the LZ-method is achieving good compression, its important property is very fast work decoder.

Another approach to compression of information is code Huffman, the encoder and decoder of which have a fairly simple hardware implementation. The idea of \u200b\u200bthe algorithm consists of following: knowing the probabilities of occurrence of characters into a message, you can describe the procedure for constructing variable length codes consisting of a whole number of bits. Symbols are more likely to be assigned more short codes, whereas less often encountered characters are longer. Due to this, a reduction in the average length of the code word and greater compression efficiency is achieved. Huffman codes have a unique prefix (the beginning of the code word), which allows you to unambiguously decode them, despite their variable length.

The procedure for the synthesis of the classic Khaffman code assumes the presence of a priori information on the statistical characteristics of the message source. In other words, the developer should know the likelihood of those or other characters, of which messages are formed. Consider the synthesis of Huffman's code on a simple example.

p (s 1) \u003d 0.2, p (s 2) \u003d 0.15, p (s 3) \u003d 0.55, p (s 4) \u003d 0.1. Sort the symbols in descending the probability of appearance and imagine in the form of a table (Fig. 14.3, a).

The code synthesis procedure consists of three main steps. The first trigger of the rows of the table occurs: two rows corresponding to the symbols with the smallest probabilities of the occurrence are replaced by one with a total probability, after which the table is reordered again. The convolution continues until only one line with a total probability equal to one (Fig. 14.3, b) remains in the table.

Fig. 14.3.

At the second stage, the codewood is constructed using a folded table (Fig. 14.4, a). The tree is built, starting with the last column of the table.

Fig. 14.4.

The root of the tree forms a unit located in the last column. In this example, this unit is formed from the probabilities of 0.55 and 0.45 depicted in the form of two nodes of the tree associated with the root. The first of them corresponds to the symbol S 3 and, thus, the further branching of this node does not occur.

The second node marked with a 0.45 probability is connected to two nodes of the third level, with probabilities 0.25 and 0.2. The probability of 0.2 corresponds to the symbol S 1, and the probability of 0.25, in turn, is formed from the probabilities of 0.15 appearance of the symbol S 2 and 0.1 appearance of the symbol S 4.

Ribs connecting individual code tree nodes, numbers 0 and 1 numbers (for example, left ribs - 0, and right - 1). In the third, final stage, a table is built in which the source symbols are compared and the codes of the Huffman code. These code words are formed as a result of reading numbers marked with ribs forming the path from the root of the tree to the corresponding symbol. For the example under consideration, the Huffman code will take the view shown in the table on the right (Fig. 14.4, b).

However, the classic Huffman algorithm has one significant disadvantage. To restore the contents of the compressed message, the decoder should know the frequency table that enjoyed the encoder. Therefore, the length of the compressed message increases by the length of the frequency table, which should be sent ahead of the data, which may not be reduced to no effort to compress the message.

Another variant static coding Huffman It is to view the input stream and building coding based on the collected statistics. This requires two files on the file - one to view and collect statistical information, the second is for coding. In the static encoding of Huffman, the input symbols (chains of bits of different lengths) are set to the correspondence of the bits chain also variable lengths - their codes. The length of the code of each symbol is taken by a proportional binary logarithm of its frequency taken with the opposite sign. And the total set of all those encountered different symbols is the stream alphabet.

There is another method - adaptive or dynamic coding of Huffman. His general principle It is to change the coding scheme depending on the nature of the input flow changes. Such an approach has a single-pass algorithm and does not require the preservation of information about the coding used explicitly. Adaptive coding can give a greater compression ratio compared with static, since changes in the frequencies of the input flow are more fully taken into account. When using adaptive huffman encoding, the complication of the algorithm consists in the need to constantly adjust the wood and codes of the symbols of the main alphabet in accordance with the changing statistics of the input stream.

Huffman methods give high speed and moderately good quality compression. However, the encoding of Huffman has minimal redundancy, provided that each character is encoded in the alphabet of the symbol code by a separate chain of two bits - (0, 1). The main disadvantage this method Is the dependence of the degree of compression from the proximity of the probability of symbols to 2 in some negative degree, which is due to the fact that each character is encoded by an integer bit.

A completely different solution offers arithmetic coding. This method is based on the idea of \u200b\u200bconverting the input stream to a single floating point. Arithmetic coding is a method that allows packaging characters of the input alphabet without losses, provided that the frequency distribution of these characters is known.

The estimated required sequence of symbols when compressed by the arithmetic encoding method is considered as some binary fraction from the interval)

Magnetometry in the simplest version The ferrozond consists of a ferromagnetic core and two coils on it

Magnetometry in the simplest version The ferrozond consists of a ferromagnetic core and two coils on it Effective job search course search

Effective job search course search The main characteristics and parameters of the photodiode

The main characteristics and parameters of the photodiode How to edit PDF (five applications to change PDF files) How to delete individual pages from PDF

How to edit PDF (five applications to change PDF files) How to delete individual pages from PDF Why the fired program window is long unfolded?

Why the fired program window is long unfolded? DXF2TXT - export and translation of the text from AutoCAD to display a dwg traffic point in TXT

DXF2TXT - export and translation of the text from AutoCAD to display a dwg traffic point in TXT What to do if the mouse cursor disappears

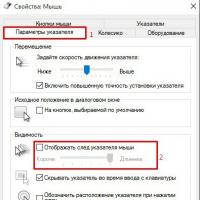

What to do if the mouse cursor disappears