How to enable NVIDIA G-SYNC support and fully disclose its potential. G-SYNC technology review: We change the rules of the game How to disable on a laptop G Sync

Do you have a monitor with the support of G-Sync and the NVIDIA video card? Consider what G-SYNC is how to enable it and configure it correctly to fully use the potential and the capabilities of this technology. Keep in mind that its inclusion itself is not all.

Each gamer knows what vertical synchronization (V-SYNC). This feature synchronizes image frames in such a way as to exclude the effect of the screen break. If you turn off the vertical synchronization on the usual monitor, then decrease input Lag (delay) This will notice that the game will better respond to your commands, but thereby frames will not be synchronized accordingly and reaches the screen break (English. Screen Tearing).

V-SYNC eliminates the screen breaks, but simultaneously causes an increase in the delay of the output of the picture on control, so playing becoming less comfortable. Each time you move the mouse appears the impression that the effect of motion occurs with a small delay. And here the G-SYNC function comes to help, which allows you to eliminate these lack of.

What is the G-SYNC?

Quite expensive, but effective solution for nVIDIA video cards GeForce is the use of G-Sync technology, which eliminates the screen breaks without using an additional delay (INPUT LAG). But for its implementation, you need a monitor that includes the G-SYNC module. The module adjusts the frequency of the screen update to the number of frames per second, which does not arise the additional delay and eliminates the effect of the screen break.

Many users after buying such a monitor only include NVIDIA G-SYNC support in panel settings. control NVIDIA With the belief that it's all that should be done. Theoretically, because G-Sync will work, but if you want to fully maximize the use of this technology, you need to use a number of additional features related to the appropriate setting of classical vertical synchronization and restricting FPS in games to a few more frames than the maximum update frequency monitor. Why? All this will learn from the following recommendations.

Turning on the G-SYNC on the NVIDIA control panel

Let's start with the simplest basic solution, that is, from the moment the G-SYNC module is turned on. This can be done using the NVIDIA control panel. Right-click on the desktop and select NVIDIA Control Panel (NVIDIA control panel).

Then go to the Display tab - Setting the G-SYNC. Here you can enable technology using the Enable G-Sync field. Mark it.

Then you can specify whether it will work only in full screen mode, or can also be activated in games running in the window mode or full-screen window (no boundaries).

If you select the "Enable G-Sync for Full Screen Mode" option, the function will only work in games that have the installed mode to the entire screen (this option can be changed in the settings of specific games). Games in the window mode or full-screen windows will not use this technology.

If you want the "window" games also used G-SYNC technology, then enable the option "Enable G-Sync for WindowD and Full Screen Mode". When this parameter is selected, the function intercepts the current active window and overlaps its action by activating the support of the changed screen update in it. To activate this option, it is possible to restart the computer.

How to verify that this technology is included. To do this, open the Display menu at the top of the window and check the G-SYNC Indicator field in it. Thanks to this, you will be informed that G-Sync is enabled when you start the game.

Then go to the Manage 3D Settings tab in the Side Menu. In the "Global Settings" section, find the field "Preferred Refresh Rate" (Preferred Screen Update Frequency).

Set here the "Highest Available" (maximum possible). Some games can impose their own update frequency, it may lead to the fact that the G-Sync will not be fully involved. Thanks to this parameter, all game settings will be ignored and the ability to use the maximum monitor update frequency will always be enabled, which in devices with G-SYNC most often is 144Hz.

In general, this is the basic setting that needs to be performed to enable G-SYNC. But if you want to fully use the potential of your equipment, you should read further instructions.

What to do with V-SYNC, if I have G-Sync? Leave it on or disable it?

This is the most common dilemma of monitors with G-Sync. It is customary to think that this technology completely replaces the classic V-SYNC, which can be completely turned off in the NVIDIA control panel or simply ignore.

First you need to understand the difference between them. The task of both functions is theoretically the same - overcoming the effect of the screen break. But the method of action is significantly different.

V-SYNC synchronizes frames, adjusting them to the constant frequency of the monitor update. Consequently, the function acts as an intermediary, capturing a picture and, accordingly, the display of the frame, so as to adapt them to a constant frame rate, thereby preventing the image breaks. As a result, this can lead to input Lag (delay), because the V-SYNC must first "capture and arrange" an image, but then to display it on the screen.

G-SYNC works exactly on the contrary. It does not adjust the image, but the monitor update frequency to the number of frames displayed on the screen. Everything is done hardware using the G-SYNC module built into the monitor, so there is no additional delay in the display of the picture, as is the case in the case of vertical synchronization. This is its main advantage.

The whole problem is that G-SYNC works well only when FPS is in the supported update frequency range. This range captures frequencies from 30 Hz to the value, how much does the monitor (60Hz or 144 Hz) support. That is, this technology works in a complete measure when the FPS does not fall below 30 and does not exceed 60 or 144 frames per second depending on the maximum supported update frequency. It looks very well shown below the infographics created by the Blurbusters service.

What happens if the frame rate per second goes beyond this range? G-SYNC will not be able to configure the screen update, so that outside the range does not work. You will find exactly the same problems as an ordinary monitor without a G-SYNC and will work will be classic vertical synchronization. If it is turned off, then the screen breaks. If it is turned on, I will not see the break effect, but IPUT LAG will appear (delay).

Therefore, in your interests remain in the G-SYNC update range, which is at least 30 Hz and the maximum of how much the monitor supports (most often 144 Hz, but there are 60 Hz displays). How to do it? Using the appropriate parameters of vertical synchronization, as well as through the limit of the maximum amount of FPS.

What, therefore, from this conclusion? In a situation where the number of frames per second falls below 30 Fps, it is necessary to leave the upright vertical synchronization. These are rare cases, but if it comes to them, then V-Sync ensures that the effect of breaking the picture does not arise. If the upper limit is exceeded, everything is simple here - you need to limit maximum amount Frames per second, so as not to approach the upper boundary, when the V-SYNC is intersected, thereby ensuring continuous operation of the G-Sync.

Therefore, if you have a 144 Hz monitor, you need to enable FPS limit at level 142 so as not to approach the upper limit. If the monitor is 60 Hz - set the limit 58. If even the computer is able to make more FPS, then it will not do it. Then the V-SYNC will not turn on and only G-Sync will be active.

Enable vertical synchronization in NVIDIA settings

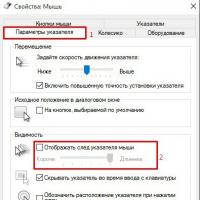

Open the NVIDIA control panel and go to the Manage 3D Settings tab. "(3D Parameters Management) tab. In the Global Setting section, locate the Vertical Sync option and set the "ON" option.

Due to this, the vertical synchronization will always be ready to turn on if the FPS falls below 30 FPS, and the monitor with G-SYNC technology would not have done.

FPS restriction to a smaller value than the maximum screen update frequency

The best way to limit frames per second is the use of the RTSS program ( Rivatuner Statistics Server). Definitely better decision is the use of a limiter built into the game, but not everyone has it.

Download and run the program, then in the game list on the left side, check the Global field. Here you can set a common limiter for all applications. On the right side, find the field "Framerate Limit". Install the limit for monitors 144Hz - 142 FPS, respectively, for 60Hz -58 FPS devices.

When the limit is set, it will not be a delay with the activation of classic vertical synchronization and play is much more comfortable.

G-Sync Technology Overview | Brief History of Fixed Update Frequency

Long ago monitors were bulky and contained electron-ray tubes and electron guns. Electronic guns bombard the screen photons to illuminate color phosphor points that we call pixels. They draw on left to right every "scanning" string from the top to the bottom. Adjusting the velocity of the electron gun from one full update to the next not very practiced earlier, and there was no special needs before the appearance of three-dimensional games. Therefore, the CRT and the associated analog video standards have been developed with a fixed update frequency.

LCD monitors gradually replaced CRT, and digital connectors (DVI, HDMI and DisplayPort) were replaced with analog (VGA). But associations responsible for the standardization of video signals (led by VESA) did not switched from a fixed update frequency. Cinema and television are still based on the incoming signal with a constant frame rate. And again, the transition to the adjustable update frequency does not seem so necessary.

Adjustable frame frequencies and fixed update frequencies do not coincide

Before the appearance of modern 3D graphics, the fixed update frequency problem for displays was not. But it originated when we first encountered powerful graphics processors: the frequency on which GPU visualized individual frames (which we call the frame rate, usually expressed in FPS or frames per second), is inconsistent. It changes over time. In heavy graphic scenes, the card can provide 30 fps, and if you look at the empty sky - 60 FPS.

Disable synchronization leads to ruptures

It turns out that the variable frame rate of the graphics processor and the fixed frequency of the renewal of the LCD panel work is not very good together. In such a configuration, we face a graphic artifact called "Rip". It appears when two or more incomplete frames are visualized together during one monitor update cycle. Usually they are shifted, which gives a very unpleasant effect while driving.

The image above shows two well-known artifacts that are often found, but they are difficult to fix. Since these are the display artifacts, you will not see this on conventional game screenshots, however, our pictures show that you actually see during the game. To remove them, you need a camera with a high-speed shooting mode. Or if you have a video capture card, you can write an uncompressed video stream from the DVI port and clearly see the transition from one frame to another; This method we use for FCAT tests. However, it is best to observe the described effect with your own eyes.

The erosion effect is visible on both images. The top is done with the camera, the bottom - through the function of the video capture. The bottom picture is "cut" horizontally and looks off-shifted. On the upper two images, the left snapshot was made on the Sharp screen with a speed of 60 Hz, right - on the ASUS display with a frequency of 120 Hz. The gap on the 120-hedes display is not expressed as much as the update frequency is twice as high. Nevertheless, the effect is visible, and manifests itself the same way as on the left image. An artifact of this type is a clear sign that images have been made when the vertical synchronization is disabled (V-SYNC).

Battlefield 4 on GeForce GTX 770 with V-Sync disabled

The second effect, which is visible in the pictures of Bioshock: Infinite, is called bond (Ghosting). It is especially clearly visible at the bottom of the left image and is associated with the retention of the screen. If short, then individual pixels do not quickly change the color, which leads to the luminescence of this type. Separately taken frame cannot convey, what effect the federation has on the game itself. Panel with a response time from gray to gray 8 ms, such as Sharp, will result in a blurred image at any movement on the screen. That is why these displays are usually not recommended for shooters from the first person.

V-SYNC: "Shilo on soap"

Vertical synchronization, or V-SYNC - this is a very old solution to break problems. When this video card is activated, it tries to match the screen update frequency, completely removing the break. The problem is that if your video card cannot keep the frame rate above 60 FPS (on the display with a speed of 60 Hz), the effective frame rate will ride between values, multiple screen update frequency (60, 30, 20, 15 fps, etc. d.), which in turn will lead to tangible slowchairs.

When the frame rate drops below the update frequency with active V-SYNC, you will feel slowing

Moreover, since the vertical synchronization causes the video card to wait and sometimes relies on the invisible surface buffer, the V-SYNC can add additional input delay to the visualization chain. Thus, V-Sync can be both salvation and a curse, solving some problems, but at the same time provoking other disadvantages. An unofficial survey of our employees showed that gamers, as a rule, turn off vertical synchronization, and include only when the ruptures become unbearable.

Creative approach: NVIDIA represents G-Sync

When you start a new video card GeForce GTX 680. NVIDIA has included driver mode called Adaptive V-Sync (adaptive vertical synchronization) that tries to level problems when the V-SYNC is turned on when the frame rate is above the monitor update frequency and quickly turning it off when a sharp performance drops below the update frequency. Although the technology conscientiously performed its functions, it was only a traverse problem that did not allow to get rid of breaks if the frame rate was below the monitor update frequency.

Sales G-Sync. Much more interesting. If we speak in general, NVIDIA shows that instead of making video cards to work at a fixed display frequency, we can force new monitors to work on non-permanent frequency.

GPU frame frequency determines the monitor update frequency, removing the artifacts associated with enable and disconnect V-SYNC

A batch mechanism for transmitting DISPLAYPORT connector opened new features. Using variable displacement intervals in the DISPLAYPORT video signal and replacing the monitor scaling device to a module operating with variable warning signals, the LCD panel can operate on a variable frequency of the update frequency associated with the frame rate that the video card displays (within the monitor update frequency). In practice, NVIDIA creatively approached using the special features of the DisplayPort interface and tried to catch two hares at the same time.

Even before the start of the tests, I would like to pay tribute to a creative approach to solving a real problem affecting the game on PC. This is innovation in all its glory. But what are the results G-Sync. on practice? Let's find out.

NVIDIA sent us an engineering sample monitor ASUS VG248QE. in which the scaling device is replaced by the module G-Sync.. With this display, we are already familiar. An article is devoted to him "ASUS VG248QE Overview: 24-inch game monitor with an update frequency 144 Hz for $ 400" In which the monitor earned the TOM Award "s Hardware Smart BUY. Now it's time to learn how new NVIDIA technology will affect the most popular games.

G-Sync Technology Overview | 3D LightBoost, Built-in Memory, Standards and 4K

Browsing the press materials from NVIDIA, we asked themselves a lot of questions, both about the place of technology in the present and its role in the future. During a recent trip to the head office of the company in Santa Clara, our colleagues from the United States received some answers.

G-Sync and 3D LightBoost

The first thing we noticed is that NVIDIA sent a monitor ASUS VG248QE. modified to support G-Sync.. This monitor also supports NVIDIA 3D LightBoost technology, which was originally designed to increase the brightness of 3D displays, but for a long time unofficially used in 2D mode, using a pulsating background backlight of the panel to reduce the floss effect (or motion blur). Naturally, it became interesting whether this technology in G-Sync..

Nvidia gave a negative answer. Although the use of both technologies at the same time would be an ideal solution, today gating background illumination on a variable update frequency leads to problems with flickering and brightness. It is incredibly difficult to decide because it is necessary to configure brightness and track pulses. As a result, now you have to choose between two technologies, although the company is trying to find a way to use them simultaneously in the future.

Built-in memory of the G-SYNC module

As we already know G-Sync. Eliminates step-by-step input delay associated with V-SYNC, because there is no longer any need to complete the scanning of the panel. Nevertheless, we noticed that the module G-Sync. It has built-in memory. Module can buffer frames yourself? If so how long will the frame be required to pass through the new channel?

According to NVIDIA, the frames are not buffered in the memory of the module. As data arrives, they are displayed on the screen, and the memory performs some other functions. However, processing time for G-Sync. Noticeably less than one millisecond. In fact, almost with the same delay we encounter when the V-SYNC is turned off, and it is related to the features of the game, video driver, mice, etc.

Will G-Sync be standardized?

This question was asked in the last interview with AMD, when the reader wanted to know the company's reaction to technology G-Sync.. However, we wanted to ask this directly from the developer and find out whether NVIDIA plans to bring the technology to the industrial standard. In theory, the company may offer G-Sync. As an upgrade for the DISPLAYPORT standard, providing variable update frequency. In the end, NVIDIA is a member of the VESA Association.

However, new specifications for DisplayPort, HDMI or DVI are not planned. G-Sync. And so supports DisplayPort 1.2, that is, the standard is not needed.

As noted, NVIDIA is working on compatibility G-Sync. With the technology that is now called 3D LightBoost (but will soon wear another name). In addition, the company is looking for a way to reduce the cost of modules G-Sync. And make them more accessible.

G-SYNC at Ultra HD Permissions

NVIDIA promises the appearance of monitors with support G-Sync. and permissions up to 3840x2160 pixels. However, the model from ASUS, which we will consider today, supports only 1920x1080 points. At the moment, Ultra HD monitors use the STMicro Athena controller, which has two scaling devices to create a damned on the display tiles. We are wondering if there will be a module G-Sync. Maintain MST configuration?

In truth, displays 4K with a variable frame of frames still have to wait. There is also no separate scaling device supporting the 4K resolution, the nearest should appear in the first quarter of 2014, and the monitors, they are equipped, and only in the second quarter. Since the module G-Sync. Replaces the scaling device, compatible panels will begin to appear after that moment. Fortunately, the module initially supports Ultra HD.

What happens to 30 Hz?

G-Sync. Can change the screens update frequency up to 30 Hz. This is explained by the fact that low frequencies Screen Updates The image on the LCD screen begins to collapse, which leads to the appearance of visual artifacts. If the source provides less than 30 fps, the module will update the panel automatically, avoiding possible problems. This means that one image can be played more than once, but the lower threshold is 30 Hz, which will ensure the maximum high-quality image.

G-Sync Technology Overview | Panels 60 Hz, SLI, Surround and Availability

Technology is limited only to panels with high update?

You will notice that the first monitor with G-Sync. Initially, there is a very high frequency of the screen update (above the level necessary for technology) and the resolution of 1920x1080 points. But the ASUS display has its own limitations, for example, a 6-bit TN panel. We became curious, the introduction of technology G-Sync. Is it planned only in displays with a high update rate or can we see it on more common 60-gentle monitors? In addition, I want to get access to the resolution of 2560x1440 points as quickly as possible.

NVIDIA has repeatedly repeated that the best experience from G-Sync. You can get when your video card holds the frame rate in the range of 30 - 60 FPS. Thus, the technology can really benefit from the usual monitors with a frequency of 60 Hz and a module G-Sync. .

But why then use a 144 Hz monitor? It seems that many monitors producers have decided to implement a low motion blur function (3D LightBoost), which requires high update frequency. But those who decided not to use this feature (but why not, because it is not compatible with G-Sync.) can create a panel with G-Sync. For much smaller money.

Speaking of permits, it can be noted that everything is added in this way: QHD screens with an update frequency of more than 120 Hz can begin to be issued in early 2014.

Are there problems with SLI and G-SYNC?

What is needed in order to see the G-SYNC in Surround mode?

Now, of course, you do not need to unite two graphic adapterTo ensure the display of the image screen as 1080p. Even the middle class video card based on Kepler will be able to provide the performance level required for a comfortable game on this resolution. But it is also not possible to run two cards in SLI on three G-Sync. -Monitians in Surround mode.

This limitation is associated with modern outputs for displays on NVIDIA maps, which, as a rule, have two DVI ports, one HDMI and one DisplayPort. G-Sync. Requires the availability of DisplayPort 1.2, and the adapter will not work (like the MST concentrator). The only option is to connect three monitors in Surround mode to three cards, i.e. For each monitor, a separate card. Naturally, we assume that NVIDIA partners will start letting out the "G-Sync Edition" cards with large quantity DISPLAYPORT connections.

G-Sync and Triple Bufferization

For a comfortable game with vertical synchronization, active triple buffering was required. Does she need for G-Sync. ? The answer is no. G-Sync. not only does not require triple buffering, since the channel never stops, it is, on the contrary, harms G-Sync. Because adds an additional delay frame without performance increases. Unfortunately, the triple bufferization of the game is often asked independently, and they cannot be bypass.

What about games that usually react poorly to turn off V-SYNC?

Games like Skyrim, which is part of our test package, are designed to work with vertical synchronization on the 60 Hz panel (although it sometimes complicates life due to input delay). To test them requires a modification of certain falams with extension.ini. As behaving behaved G-Sync. With games based on GameBryo and Creation engines sensitive to vertical sync settings? Are they limited to 60 FPS?

Secondly, you need a monitor with the NVIDIA module G-Sync. . This module replaces the screen scaling device. And, for example, to the separated ULTRA HD display add G-Sync. It is impossible. In today's review, we use a prototype with a resolution of 1920x1080 pixels and the update frequency to 144 Hz. But even with it, you can make an idea of \u200b\u200bwhat impact will have G-Sync. If manufacturers start installing it in a cheaper panel of 60 Hz.

Thirdly, the presence of the DisplayPort 1.2 cable is required. DVI and HDMI are not supported. In the near future, this means that the only option for work G-Sync. On three monitors in Surround mode is their connection through a triple SLI bundle, since each card has only one DisplayPort connectors, and the DVI adapters on DisplayPort do not work in this case. The same applies to MST concentrators.

And finally, you should not forget about support in drivers. The last package of version 331.93 Beta is already compatible with G-Sync. And we assume that the future versions with the WHQL certificate will be equipped with it.

Test stand

| Test Stand Configuration | |

| CPU | Intel Core I7-3970X (Sandy Bridge-E), base frequency 3.5 GHz, overclocking up to 4.3 GHz, LGA 2011, 15 MB of general cache L3, Hyper-Threading On, Energy Saving Functions on. |

| Motherboard | MSI X79A-GD45 Plus (LGA 2011) X79 Express Chipset, BIOS 17.5 |

| RAM | G.Skill 32 GB (8 x 4 GB) DDR3-2133, F3-17000CL9Q-16GBXM X2 @ 9-11-10-28 and 1.65 V |

| Storage device | Samsung 840 Pro SSD 256 GB SATA 6Gbit / s |

| Video card | NVIDIA GeForce GTX 780 Ti 3 GB NVIDIA GeForce GTX 760 2 GB |

| Power Supply | Corsair AX860I 860 W |

| System software and drivers | |

| OS. | Windows 8 Professional 64-Bit |

| DirectX | DirectX 11. |

| VideoReriver | NVIDIA GeForce 331.93 Beta |

Now it is necessary to figure out, in what cases G-Sync. It has the biggest impact. The likelihood is that you are already using a monitor with a speed of 60 Hz. Among gamers are more popular models with a frequency of 120 and 144 Hz, but NVIDIA rightly assumes that most of the enthusiasts on the market will still adhere to the level of 60 Hz.

When active vertical synchronization on a monitor with a refresh rate of 60 Hz, the most noticeable artifacts appear in cases where the card cannot provide a frequency of 60 frames per second, which leads to irritating jumps between 30 and 60 FPS. There are noticeable technologies. If you disabled vertical synchronization, the breaks effect will be most noticeable in scenes where you often rotate the camera or in which there are many movements. Some players distract it so much that they simply include V-SYNC and suffer to slowing down and input delays.

At the update frequency 120 and 144 Hz and a higher frame rate, the display is updated more often, reducing the time when one frame is saved over several screen scans with insufficient performance. However, problems with active and inactive vertical synchronization are saved. For this reason, we will test ASUS monitor in mode 60 and 144 Hz with and turned off G-Sync. .

G-Sync Technology Overview | Testing G-SYNC with V-Sync enabled

It's time to start testing G-Sync. . It remains only to install a video capture card, an array of several SSD and go to tests, right?

No, wrong.

Today we measure no performance, but quality. In our case, tests can show only one thing: the frame rate at a specific point in time. On the quality and experience of using the technology included and turned off G-Sync. They do not tell anything level anything. Therefore, it will have to rely on our carefully verified and eloquent description, which we will try as close as possible to reality.

Why not just write a video and not to give it to the court readers? The fact is that the camera records video on fixed speed 60 Hz. Your monitor also plays video at a constant update frequency of 60 Hz. Insofar as G-Sync. Introduces the variable update frequency, you will not see the technology in action.

Given the number of available games, the number of possible test combinations is countless. V-SYNC ON, V-SYNC Off, G-Sync. incl. G-Sync. Off, 60 Hz, 120 Hz, 144 Hz, ... List can be continued for a long time. But we will start with the frequency of updating 60 Hz and active vertical synchronization.

Probably the easiest to start with your own demonstration utility NVIDIA, in which the pendulum swings from side to side. The utility can mimic the frame rate of 60, 50 or 40 FPS. Or the frequency can vary between 40 and 60 fps. Then you can disable or enable V-SYNC and G-Sync. . Although the test and invented, he demonstrates the possibility of technology. You can observe the scene at 50 FPS with the vertical synchronization enabled and think: "Everything is quite good, and visible slowchairing can be endured." But after activation G-Sync. I immediately want to say: "What did I think? The difference is obvious as day and night. How could I live with this before?"

But let's not forget that this is a technical demonstration. I would like evidence based on real games. To do this, run the game with high system requirements, such as Arma III.

ARMA III can be installed in the test machine. GeForce GTX 770. And set ultrasoundwork. With disabled vertical synchronization, the frequency of frames ranges from 40 - 50 fps. But if you enable V-SYNC, it will drop to 30 fps. Performance is not high enough to have permanent fluctuations between 30 and 60 FPS. Instead, the frequency frames of the video card simply decreases.

Since the image slowing down was not, a significant difference during activation G-Sync. Unnoticed, except that the real frame rate jumps at 10 - 20 FPS above. Input delay should also be reduced, since the same frame is not saved for several monitor scans. It seems to us that Arma is generally less "durgan" than many other games, so the delay is not felt.

On the other hand, in Metro: Last Light influence G-Sync. more pronounced. With video card GeForce GTX 770. The game can be run when resolving 1920x1080 points with very high detail settings, including 16x AF, normal tessellation and motion blur. In this case, you can choose SSAA parameters from 1x to 2x to 3x to gradually reduce the frame rate.

In addition, the game's environment includes a hallway, in which it is easy to "stretch" forward and back. Running a level with an active vertical synchronization of 60 Hz, we went to the city. Fraps showed that with a three-fold smoothing of SSAA, the frame rate was 30 FPS, and with disabled smoothing - 60 FPS. In the first case, the slow motion and delay are noticeable. With SSAA disabled, you will get an absolutely smooth picture on 60 FPS. However, the activation of 2x SSAA, leads to fluctuations from 60 to 30 fps, from which each duplicated frame creates inconvenience. This is one of the games in which we would definitely disable vertical synchronization and simply ignored the gaps. Many still have already developed a habit.

but G-Sync. Ensures all negative effects. You no longer have to look at the FRAPS counter in anticipation of the sections below 60 fps to reduce another graphic parameter. You, on the contrary, can increase some of them, because even in case of slowing down to 50-40 FPS, there will be no obvious slow-moving. And what if you turn off vertical synchronization? You will learn about it later.

G-Sync Technology Overview | Testing G-SYNC with V-Sync disabled

The findings in this material were created on the basis of a survey of authors and friends Tom "S Hardware on Skype (in other words, the sample of the respondents is small), but almost all of them understand that such vertical synchronization and with what disadvantages have to be put up to users. According to them They resort to the help of vertical synchronization only if the gaps are due to a very large scatter in the frame rate and the renewal frequency of the monitor become unbearable.

As you can imagine, the effect of the turned off vertical synchronization on the visual component is difficult to confuse, although it strongly affects the specific game and its detail settings.

Take, for example, Crysis 3. . The game with easily can put on your knees your graphic subsystem at the highest graphics parameters. And, since Crysis 3. It is a first-person shooter with a very dynamic gameplay, ruptures can be quite tangible. In the example above, the FCAT output was captured between two frames. As you can see, the tree is completely cut.

On the other hand, when we forcibly turn off the vertical synchronization in Skyrim, the breaks are not so strong. Please note that in this case the frame rate is very high, and several frames appear on the screen with each scanning. Such reviews, the number of movements on the frame is relatively low. When playing Skyrim in such a configuration there are problems, and perhaps it is not the most optimal. But it shows that even when the vertical synchronization is disabled, the feeling from the game may change.

As a third example, we chose a frame with the depicting the shoulder of Lara Croft from the game Tomb Raider, where you can see a rather clear image break (also look at the hair and strap T-shirts). Tomb Raider is the only game from our sample, which allows you to choose between double and triple buffering when the vertical synchronization is activated.

The last schedule shows that Metro: Last Light with G-Sync. At 144 Hz, in general, it provides the same performance as when the vertical synchronization is disabled. However, it is impossible to see the lack of breaks. If you use the technology with a screen of 60 Hz, the frame rate is strengthened in 60 FPS, but it will not be slow-moving or delays.

In any case, those of you (and us) who conducted an incredible amount of time behind graphic tests, watching the same benchmark again and again, could get used to them and visually determine how good one or another result is. So we measure the absolute performance of video cards. Changes in the picture with active G-Sync. Immediately rush into the eyes, since smoothness appears, as with the V-SYNC enabled, but without breaks, characteristic of the V-Sync disabled. It is a pity that now we can not show the difference in the video.

G-Sync Technology Overview | Compatibility with games: almost great

Checking other games

We tested a few more games. Crysis 3. , Tomb Raider, Skyrim, Bioshock: Infinite, Battlefield 4. We visited the test bench. All of them, except Skyrim, won from technology G-Sync. . The effect depended on the competitive game. But if you saw it, they would immediately admit that they ignored the shortcomings that were present earlier.

Artifacts can still appear. For example, the slip effect associated with smoothing, with a smooth movement is noticeable. Most likely, you will want to expose smoothing as usually as possible to remove unpleasant irregularities that were not so noticeable before.

Skyrim: special case

The Creation Graphic Engine, which developed Skyrim, activates the default vertical synchronization. To test the game frequency above 60 FPS, in one of the game .ini-files you need to add the line iPresentInterval \u003d 0.

Thus, Skyrim can be tested in three ways: in the initial state, allowing drive NVIDIA "Use application settings", enable G-Sync. in the driver and leave Skyrim settings intact and then enable G-Sync. And disable V-Sync in the game file with extension.ini.

The first configuration in which the experienced monitor is exhibited by 60 Hz, showed stable 60 FPS on ultrasounds with video card GeForce GTX 770. . Consequently, we got a smooth and pleasant picture. However, entering the user still suffers from delay. In addition, stretch from side to the side revealed a noticeable blur of movements. However, in this way most people play on the PC. Of course, you can buy a screen with a frequency of update 144 Hz, and it will actually eliminate blur. But since GeForce GTX 770. Provides an update frequency at the level of about 90-100 frames per second, there will be tangible technologies when the engine will vary between 144 and 72 FPS.

At 60 Hz G-Sync. It has a negative impact on the picture, it is likely that it is associated with active vertical synchronization, despite the fact that the technology should work with the V-SYNC disabled. Now the side stretch (especially closer to the walls) leads to pronounced slowchairs. This is a potential problem for 60-hentz panels with G-Sync. At least in such games like Skyrim. Fortunately, in the case of the ASUS VG248Q monitor, you can switch to 144 Hz mode, and despite the active V-SYNC, G-Sync. It will work at such a frequency of frames without complaints.

Complete shutdown of vertical synchronization in Skyrim leads to a more "clear" mouse control. However, the image breaks appear (not to mention other artifacts, such as flickering water). Turning on G-Sync. Leaves slowing down at 60 Hz, but at 144 Hz, the situation is significantly improved. Although in video card reviews, we test the game with a disabled vertical synchronization, we would not recommend playing without it.

For Skyrim, perhaps the best solution will turn off G-Sync. And play 60 Hz, which will give constant 60 frames per second at the graphics settings selected to you.

G-Sync Technology Overview | G-SYNC - what did you wait for?

Even before we received a test sample of ASUS monitor with technology G-Sync. We have already pleased the fact that Nvidia is working on a very real problem affecting the game, which has not yet been suggested. Until now, you may turn on or not to include vertical synchronization. At the same time, any decision was accompanied by compromise, negatively affecting gaming experience. If you prefer not to include vertical synchronization until the image breaks become unbearable, we can say that you choose a smaller of two angry.

G-Sync. Solves the problem by providing the monitor the ability to scan the screen on the variable frequency. Such innovation is the only way to continue to promote our industry, keeping technical advantage personal computers Over game consoles and platforms. Nvidia, no doubt, will endure criticism for not developed the standard that competitors could apply. However, for its solution, the company uses DisplayPort 1.2. As a result, only two months after the announcement of the technology G-Sync. She was in our hands.

The question is whether NVIDIA implements everything that promised in G-Sync?

Three talented developers, praising the quality of technology that you have not yet seen in action can inspire anyone. But if your first experience with G-Sync. Based on a demonstration test with a pendulum from NVIDIA, you definitely define a question, whether such a huge difference is possible, or the test represents a special script that is too good to be true.

Naturally, when checking technology in real games, the effect is not so unambiguous. On the one hand, there were exclamations "Wow!" And "go crazy!", On the other, it seems, I see the difference. " Best Activation Impact G-Sync. Noticeably when changing the frequency of the renewal of the display with 60 Hz to 144 Hz. But we also tried to spend a test for 60 Hz with G-Sync. To find out what you get (hope) with cheaper displays in the future. In some cases, a simple transition from 60 to 144 Hz will greatly affect, especially if your video card can provide a high frame rate.

Today we know that ASUS plans to introduce support G-Sync. in the model ASUS VG248QE. That, according to the company, next year will be sold at a price of $ 400. The monitor has a native resolution of 1920x1080 pixels and an update frequency of 144 Hz. Version without G-Sync. Already received our SMART BUY reward for outstanding performance. But personally for us is a 6-bit TN panel is a disadvantage. I really want to see 2560x1440 pixels on an IPS matrix. We even agree to the refresh rate of 60 Hz, if it helps to reduce the price.

Although on cES exhibition We are waiting for a whole bunch of announcements, official NVIDIA comments regarding other displays with modules G-Sync. And we did not hear their prices. In addition, we are not sure what the company's plans are about the module for the upgrade, which should allow you to implement the module G-Sync. in the already purchased monitor ASUS VG248QE. in 20 minutes.

Now we can say what you should wait. You will see that in some games the influence of new technologies cannot be confused, and in others it is less pronounced. But anyway G-Sync. Replies to the "bearded" question, include or not include vertical synchronization.

There is another interesting thought. After we tested G-Sync. How many other AD will be able to avoid comment? The company infatized our readers in their interview (English), noting that it will soon determine this opportunity. If she has something in the plans? The end of 2013 and the beginning of 2014 prepare us a lot of interesting news for discussion, including Battlefield 4. Mantle versions, the upcoming NVIDIA Maxwell architecture, G-Sync. , AMD XDMA engine with CrossFire support and rumors about new two-type video cards. Now we lack video cards, the amount of GDDR5 memory is more than 3 GB (NVIDIA) and 4 GB (AMD), but they are less than $ 1000 ...

Instruction

To correct this option, open the menu of your game, find the "Options" or "Parameters" menu, in the "Video" subparagraph, look for Vertical Sync (Vertical Sync). If the English-speaking menu and text options, then look for the display of the disabled or "off" switch. After that, click the "Apply" or Apply button to save this parameter. Changes come into effect after restarting the game.

Another case, if there is no such parameter in the application. Then you will have to configure synchronization through the video card driver. Setup is different for AMD Radeon video cards or nVIDIA GeForce..

If your video card refers to the GeForce family, right-click on the desktop and select the menu item "NVIDIA Control Panel". Another option is to open the control panel through the Start menu, there will be a start icon with the same name. If neither in the control panel, nor in the desktop menu, you did not find the desired icon, look at the clock in the right corner of the screen, there will be a green NVIDIA icon, like an eye - double-click on it. According to the result, the video card settings menu opens.

The driver control panel window consists of two parts, in the left side there are categories of actions, and in the right - possible options and information. Select the lower line "Manage 3D Parameters" on the left side. In the right part of the window, on the "Global Parameters" tab, find the "Vertical Synchropulse" option at the very top. On the contrary, the current setting will be indicated: "Enable", "Disable" or "Application Settings". Select the "Disable" option from the drop-down list and confirm your choice by clicking the Apply button.

At the owners aMD video cards Radeon Driver Setup is made through special application Catalyst. To start it, right-click on the desktop and select Catalyst Control Center. Or open the computer control panel and locate the icon with the same name. Third way - in system region The screen is near the clock, in the lower right corner, look for a red round symbol and click the mouse twice. The result of all these actions is the same - the configuration control center of your video card will open.

The principle is the same as in the NVIDIA control panel. In the left part of the window there will be categories of settings, and in the right - detailed settings And tips to them. Select "Games" or Gaming in the left column, and then the "3D application settings" submenu. The right part of the settings of different video card parameters will appear. Scroll down the page below and find the inscription "wait for a vertical update", and under it the switch slider with four marks. Move this slider to the leftmost position, the following will be the inscription "Always Disabled". Click the Apply button in the lower right corner of the window to save changes.

Testing technique

The ASUS ROG SWIFT PG278Q monitor was tested by our new technique. We decided to give up slow and sometimes inaccurate Spyder4 ELITE in favor of a faster and accurate colorimeter X-Rite I1DISPLAY PRO. Now, this colorimeter will be used to measure the basic display parameters, together with the Argyll CMS software package of the latest version. All operations will be carried out in Windows 8. During testing, the screenshot frequency is 60 Hz.

In accordance with the new method, we measure the following monitor parameters:

- White brightness with illumination power from 0 to 100% with 10% increments;

- Brightness of black with highlight power from 0 to 100% with 10% increments;

- The contrast of the display with illumination power from 0 to 100% in increments of 10%;

- Color coverage;

- Flower temperature;

- Gamma curves of three main RGB colors;

- Gamma-curve gray;

- Delta E (according to CIEDE2000).

For calibration and analysis DELTA E applies graphic interface For Argyll CMS - DISPCALGUI, the latest version at the time of writing the article. All measurement described above are carried out before and after calibration. During tests, we measure the basic monitor profiles - the default, SRGB (if available) and Adobe RGB (if available). Calibration is carried out in the default profile, with the exception of special cases that will be said additionally. For monitors with advanced color coverage, we choose the SRGB hardware emulation mode if it is available. In the latter case, the colors are converted by internal LUT monitor (which may have a bit down to 14 bits per channel) and are displayed on a 10-bit matrix, while an attempt to narrow the color coverage to the SRGB border tools to the OS color correction tools will reduce the color coding accuracy. Before all tests, the monitor warms up within an hour, and all its settings are reset to factory.

We will also continue our old practice of publishing Calibration profiles for the monitors tested at the end of the article. At the same time, the 3DNews test laboratory warns that this profile will not be able to correct the disadvantages of the specifically of your monitor. The fact is that all monitors (even within the same model) will necessarily differ from each other with their small errors of color reproduction. Make two identical matrices are impossible physically - they are too complex. Therefore, for any serious monitor calibration, a colorimeter is required or a spectrophotometer. But the "universal" profile created for a particular copy, in general, can correct the situation and other devices of the same model, especially in case of cheap displays with pronounced color rendering defects.

Viewing angles, uniformity of illumination

The first thing that was interested in us in ASUS PG278Q is the viewing angles, because the monitor uses the TN-matrix - its biggest problems are always associated with them. Fortunately, everything turned out not so bad. Of course, the IPS matrices are more, but ASUS PG278Q does not have to turn to eliminate contrast distortion and color reproduction.

But the problems with the illumination of the screen developers ASUS PG278Q could not be avoided. The monitor has a small illumination in all four corners and at the top. If the game is launched on the display, it will not be easy to see the illumination, but it is worth running some film in the dark room (with conventional vertical black stripes on top and bottom) - and the defect immediately becomes noticeable.

Testing without calibration

The maximum brightness of the ASUS PG278Q amounted to 404 cd / m 2 - even more than the manufacturer promises. Such a high value is justified by 3D support, because when using active shutter points, the perceived brightness of the monitor may fall twice. The maximum brightness of the black field was 0.40 kD / m 2, which is also quite good. As a result, static contrast fluctuates about the value of 1000: 1 on the entire brightness range of the backlight. Excellent result - such a high contrast characteristic of high-quality IPS matrices. MVA, however, out of reach.

With the color coverage of our experimental, everything is so good as required. The SRGB color space is covered by 107.1%. The white point is located next to the reference D65 point.

If we talk about games, then with the color palette of ASUS PG278Q full order, but with professional photo processing, there may be problems because of a slightly oversaturated colors due to excessive color coverage compared to SRGB. However, the display considered by us is designed just for games, so you should not pay a lot of attention to this deficiency.

Color aSUS temperature The PG278Q during the measurements kept at the level of 6,000 k, which is 500 to the norm below. This means that light colors can be noticeable lightweight shade.

|

|

|

Only the red gamma curve was not far from the standard, and the blue and green curves asked, although they tried to hold on together. At the same time, with a gamut of gray, the monitor is practically good. When measuring dark tones, it practically does not deviate from the reference curve, and when moving to light, it moves, but not much.

The average value of the accuracy of Delta E color reproduction was 2.08 units, and the maximum is 7.07 units. The results, of course, are not the best, but, first, ASUS PG278Q is still intended for games, and not for photo processing, secondly, for the TN-matrix, the results obtained by us are quite satisfactory.

Testing after calibration

Usually after calibration, the brightness of the white drops, and it is very strong - by 10% or more, even with quite high-quality panels. In the case of ASUS PG278Q, it fell by about 3% - to 391 kD / m 2. Brightness of the black field Hardware calibration did not affect. As a result, static contrast decreased to 970: 1.

Calibration practically did not affect the color coverage, but the white point returned to the place put on her, let and moved only a little bit.

After calibration, the color temperature rose a little, but it did not reach the reference. Now the gap between the measured and reference value was approximately 100-200 K instead of 500 k, which, however, is completely tolerant.

The position of three major gamma curves, unfortunately, has almost changed after calibration, while the gamma of gray began to look a little better.

|

|

|

But on the accuracy of color reproduction, the calibration has affected the best. Increased Delta E value dropped to 0.36 units, maximum to 1.26 units. Excellent results for any matrix, and for TN + Film - just fantastic.

Testing G-Sync: Method

The G-SYNC manual from NVIDIA shows the testing settings in several games at which the number of frames per second will range between 40 and 60 FPS. It is in such conditions at a refreshment frequency of 60 Hz, the most "friezes" with the V-SYNC enabled. To begin with, we compare three scenarios of use: with V-Sync, without it and with G-SYNC - all at a frequency of 60 Hz.

But remember that the raising frequency of the update from 60 to 120/144 Hz itself makes breaks less noticeable without vertical synchronization, and with V-SYNC reduces "friezes" from 13 to 8/7 ms, respectively. Is there real benefits from G-Sync compared to V-SYNC at 144 Hz? Check it.

I would like to emphasize that if you believe the description, in the case of the G-Sync, the update rate does not make sense completely. Therefore, it is not quite correct to say that we, for example, compared the V-SYNC and G-SYNC at a frequency of 60 Hz. V-SYNC was indeed at a frequency of 60 Hz, and the G-Sync means updating the screen on demand, and not with a certain period. But even with the G-SYNC enabled, we can still select the screenshot frequency in the driver control panel. In this case, FRAPS in games when activating G-Sync shows that it acts exactly such a frame frequency ceiling, as if V-Sync worked. It turns out that this setting regulates the minimum lifetime of the frame and, accordingly, the screen update interval. Roughly speaking, the frequency range is set in which the monitor operates, from 30 to 60-144 Hz.

In order to enable G-SYNC, you need to go to the NVIDIA control panel, find the appropriate link in the left corner of the screen and put a tick near the single checkbox. Technology is supported in drivers for Windows 7 and 8.

Then you need to make sure that the "3D Settings" section also includes G-SYNC - it can be found in the Vertical Sync submenu.

On this, everything: the G-SYNC function turned on for all games running in full screen mode - in the window this function can not work yet. For testing, we used the stand with graphic card GeForce GTX Titan Black.

The tests were conducted in Assasin's Creed: Black Flag, as well as at Counter-Strike: Global Offensive. We tested new technology in two ways: they just played, and then staged a gap hunt using a script that smoothly moved the game chamber, that is, "moved the mouse" horizontally. The first method allowed to estimate the feeling from the G-Sync "in battle", and the second - more clearly see the difference between the on / off vertical synchronization and G-Sync.

G-Sync in Assasin's Creed: Black Flag, 60 Hz

Without V-SYNC and G-SYNC at a frequency of 60 Hz The ruptures were perfectly noticeable almost at any movement of the camera.

The gap is noticeable in the right upper part of the frame, near the mast of the ship

When you turn on the V-SYNC, the image breaks are disappeared, but "friezes" appeared, which did not benefit the gameplay.

Two vehicle masts in the photo - one of the signs of "Fries"

After switching on G-Sync Rales and Frisi disappeared completely, the game began to work smasher. Of course, a periodic decrease in frame frequency up to 35-40 FPS was noticeable, but due to the synchronization of the display and the video card did not cause so tangible brakes as with vertical synchronization.

However, as they say, it is better to see once than to hear than a hundred times, so we made a short video, which shows the work of new "assassins" with enabled and turned off vertical synchronization, as well as with G-SYNC. Of course, the video cannot pass "live" impressions completely at least because of the shooting at a frequency of 30 frames per second. In addition, the Camera "sees" the world is different than a person's eye, so artifacts can be noticeable to video, which in the real world cannot be seen, - for example, the two-time image. Nevertheless, we tried to make this video as visual as possible: at least the presence or absence of breaks on it is quite noticeable.

Now launch Assasin's Creed: Black Flag with minimal settings and see what has changed. The number of frames per second in this game mode did not exceed 60 FPS - the displayed screen update frequency. Without enabled vertical synchronization on the screen, breaks were noticeable. But it was worth turning on the V-SYNC, as the gaps were missing and the "picture" began to look almost the same as at G-Sync.

At the exhibition maximum settings Charts The number of frames per second began to fluctuate about 25-35 fps. Of course, immediately returned gaps without V-Sync and Friza with him. Even the inclusion of G-SYNC could not correct this situation - with such a low quantity of the brake, the GPU itself generates.

G-Sync in Assasin's Creed: Black Flag, 144 Hz

With V-SYNC and G-SYNC disabled On the screen it was possible to find gaps, but thanks to the update frequency of 144 Hz there were much less than before. When turned on V-sync. The breakdowns disappeared, but more often began to meet "friezes" - almost as at the frequency of updating the screen in 60 Hz.

Turning on G-Sync.As before, it was possible to correct the situation, but the strongest improvement in the picture was noticeable only at a high frequency of frames - from 60 FPS and higher. That's just without reducing settings or adding the second GEFORCE GTX TITAN BLACK level video card to achieve such a high frame rate did not work.

G-Sync in Counter-Strike: Global Offensive, 60 and 144 Hz

In network games on the gameplay and image quality, not only the video card and the monitor are affected, but also ping - than it is higher, the greater the "response" of the game. During our tests, Ping was at the level of 25-50 ms, and the frequency of frames during the test was fluid about 200 fps.

Image settings used in Counter-Strike: Global Offensive

Without using G-SYNC and V-SYNC In CS, as in Assasin's Creed, gaps were observed. When turned on V-SYNC at a frequency of 60 Hz Playing has become more difficult - the frame rate fell to 60 fps, and the game character began to run unevenly due to large number "Fries".

When turned on G-Sync. Freimreite remained at the level of 60 frames per second, but "friezes" has become much smaller. It cannot be said that they disappeared at all, but to spoil the impression from the game stopped.

Now increase the screenshot frequency and see what will change. With a G-SYNC and V-SYNC disconnected by 144 Hzthe gaps have become much less than at 60 Hz, but they did not completely disappear. But when turned on V-sync. All gaps disappeared, and "friezes" became practically inconspicuous: playing in this mode is very comfortable, and the speed of movement is not reduced. Turning on G-Sync. And at all turned the image into a candy: the gameplay became so smooth that even 25-MS Ping began to influence the gameplay.

Testing the Ulmb mode

Ultra Low Motion Blur is turned on from the monitor menu, but it is previously necessary to disable the G-SYNC and set the screens update frequency at 85, 100 or 120 Hz. Lower or high frequencies are not supported.

The practical application of this "chip" is obvious: the text on sites is less lubricated during the scrolling, and in strategies and other RTS games moving units look more detailed.

ASUS ROG SWIFT PG278Q in 3D

ASUS ROG SWIFT PG278Q - the world's first monitor capable of playing a stereoscopic picture when resolving 2560x1440 thanks to the DisplayPort 1.2 interface. Also, in principle, a rather big achievement. Unfortunately, the monitor does not have a built-in IR transmitter, so we took a transmitter from the NVIDIA 3D Vision set, and the glasses are from the 3D Vision kit. This bundle has earned without problems, and we were able to test the stereoscopic 3D as it should.

No effect of ghosting and other artifacts found by the pseudo-type video, we did not find. Of course, sometimes in games some objects were on the wrong depth, but it is impossible to attribute this to the disadvantages of the monitor. On ASUS PG278Q you can watch stereo films and play similar games. The main thing is to pull the video adapter.

⇡ Conclusions

Not much wanting to increase the achievements of NVIDIA, it should be noted that in general G-Sync is such an innovation that comes down to getting rid of the long-standing and malicious atavism - regularly updating the LCD panels, which in it originally do not need. It turned out that for this it is enough to make small changes to the DisplayPort protocol, which by clicking the fingers got into the 1.2A specification and, if you believe the promises of AMD, it will be very soon for use in the display controllers from many manufacturers.

So far, however, only the proprietary version of this solution in the form of the G-SYNC is available, which we have fun to test in the ASUS ROG SWIFT PG278Q monitor. The irony is that it is just such a monitor for which the advantages of G-Sync are not very noticeable. Screen update at a frequency of 144 Hz in itself reduces the number of notorious gaps so much that many will be ready to close their eyes on this problem. And with vertical synchronization, we have less pronounced "friezes" and input delay compared to 60-Hz screens. G-sync in such a situation can only bring the smoothness of the game to the ideal.

Nevertheless, synchronization of the screen update with rendering frames on the GPU is still a more elegant and economical solution than a constant update on an ultrahigh frequency. We will also not forget that the use of G-SYNC is not limited to matrices with a frequency of 120/144 Hz. First, they come to mind 4k monitors, which are still limited to the frequency of 60 Hz both by the specifications of the matrices and by bandwidth Video inputs. Then - monitors on IPS, also not able to move to 120/144 Hz due to restrictions of the technology itself.

At the update frequency of 60 Hz, the G-SYNC effect cannot be exaggerated. If the frame change frequency is constantly exceeding 60 FPS, then simple vertical synchronization eliminates the scope of the gaps is just as successful, but only the G-SYNC can save smooth frames when the frame is dropped below the update frequency. In addition, thanks to the G-SYNC, the productivity range of 30-60 FPS becomes much more playable, which either reduces the performance requirements of the GPU, or allows you to set more aggressive quality settings. And again, the thought returns to 4k monitors, to play on which with good graphics it is necessary extremely powerful iron.

It is also commendable that NVIDIA applied the technology of pulsating backlight to remove the blur of moving objects (ULMB), with which we previously got acquainted with the example of EIZO FORIS FG2421. It is a pity that until it can work simultaneously with G-Sync.

The ASUS ROG SWIFT PG278Q monitor itself is good primarily by a combination of resolution 2560x1440 and update frequency 144 Hz. There were no previous devices with such parameters on the market, and meanwhile the game monitors with such a low response time and the support for the stereoscopic 3D 3D times to grow from Full HD format. To the fact that the TN-matrix is \u200b\u200binstalled in PG278Q, it is not necessary to quit, for it is really a good instance with the highest brightness, contrast and excellent color reproduction, which after calibration will also be displaced on IPS. The technology is given except limited viewing angles. Do not leave without praise and design, appropriate for such a high-quality product. ASUS ROG SWIFT PG278Q gets a well-deserved award "Choice of the Editor" - it turned out to be so good.

Recommend this game monitor to buying without unnecessary pondays prevents only the price in the area of \u200b\u200b30 thousand rubles. In addition, at the time of writing this article, ASUS ROG SWIFT PG278Q is still not for sale in the Russian Federation, so see it, as well as G-Sync, with its own eyes. But we hope that ASUS and NVIDIA companies will solve this problem in the future - for example, showing G-Sync at exhibitions computer games. Well, the price, one day, probably will decrease ...

FROM file Server Site You can download the color profile for this monitor, which we received after calibration.

Editorial Site Thanks the company "Grandtek" for the colorsimeter X-Rite I1DISPLAY Pro.

G-Sync Technology Overview | Testing G-SYNC with V-Sync disabled

The findings in this material were created on the basis of a survey of authors and friends Tom "S Hardware on Skype (in other words, the sample of the respondents is small), but almost all of them understand that such vertical synchronization and with what disadvantages have to be put up to users. According to them They resort to the help of vertical synchronization only if the gaps are due to a very large scatter in the frame rate and the renewal frequency of the monitor become unbearable.

As you can imagine, the effect of the turned off vertical synchronization on the visual component is difficult to confuse, although it strongly affects the specific game and its detail settings.

Take, for example, Crysis 3. . The game with easily can put on your knees your graphic subsystem at the highest graphics parameters. And, since Crysis 3. It is a first-person shooter with a very dynamic gameplay, ruptures can be quite tangible. In the example above, the FCAT output was captured between two frames. As you can see, the tree is completely cut.

On the other hand, when we forcibly turn off the vertical synchronization in Skyrim, the breaks are not so strong. Please note that in this case the frame rate is very high, and several frames appear on the screen with each scanning. Such reviews, the number of movements on the frame is relatively low. When playing Skyrim in such a configuration there are problems, and perhaps it is not the most optimal. But it shows that even when the vertical synchronization is disabled, the feeling from the game may change.

As a third example, we chose a frame with the depicting the shoulder of Lara Croft from the game Tomb Raider, where you can see a rather clear image break (also look at the hair and strap T-shirts). Tomb Raider is the only game from our sample, which allows you to choose between double and triple buffering when the vertical synchronization is activated.

The last schedule shows that Metro: Last Light with G-Sync. At 144 Hz, in general, it provides the same performance as when the vertical synchronization is disabled. However, it is impossible to see the lack of breaks. If you use the technology with a screen of 60 Hz, the frame rate is strengthened in 60 FPS, but it will not be slow-moving or delays.

In any case, those of you (and us) who conducted an incredible amount of time behind graphic tests, watching the same benchmark again and again, could get used to them and visually determine how good one or another result is. So we measure the absolute performance of video cards. Changes in the picture with active G-Sync. Immediately rush into the eyes, since smoothness appears, as with the V-SYNC enabled, but without breaks, characteristic of the V-Sync disabled. It is a pity that now we can not show the difference in the video.

G-Sync Technology Overview | Compatibility with games: almost great

Checking other games

We tested a few more games. Crysis 3. , Tomb Raider, Skyrim, Bioshock: Infinite, Battlefield 4. We visited the test bench. All of them, except Skyrim, won from technology G-Sync. . The effect depended on the competitive game. But if you saw it, they would immediately admit that they ignored the shortcomings that were present earlier.

Artifacts can still appear. For example, the slip effect associated with smoothing, with a smooth movement is noticeable. Most likely, you will want to expose smoothing as usually as possible to remove unpleasant irregularities that were not so noticeable before.

Skyrim: special case

The Creation Graphic Engine, which developed Skyrim, activates the default vertical synchronization. To test the game frequency above 60 FPS, in one of the game .ini-files you need to add the line iPresentInterval \u003d 0.

Thus, Skyrim can be tested in three ways: in the initial state, allowing the NVIDIA driver to use the application settings ", enable G-Sync. in the driver and leave Skyrim settings intact and then enable G-Sync. And disable V-Sync in the game file with extension.ini.

The first configuration in which the experienced monitor is exhibited by 60 Hz, showed stable 60 FPS on ultrasounds with video card GeForce GTX 770. . Consequently, we got a smooth and pleasant picture. However, entering the user still suffers from delay. In addition, stretch from side to the side revealed a noticeable blur of movements. However, in this way most people play on the PC. Of course, you can buy a screen with a frequency of update 144 Hz, and it will actually eliminate blur. But since GeForce GTX 770. Provides an update frequency at the level of about 90-100 frames per second, there will be tangible technologies when the engine will vary between 144 and 72 FPS.

At 60 Hz G-Sync. It has a negative impact on the picture, it is likely that it is associated with active vertical synchronization, despite the fact that the technology should work with the V-SYNC disabled. Now the side stretch (especially closer to the walls) leads to pronounced slowchairs. This is a potential problem for 60-hentz panels with G-Sync. At least in such games like Skyrim. Fortunately, in the case of the ASUS VG248Q monitor, you can switch to 144 Hz mode, and despite the active V-SYNC, G-Sync. It will work at such a frequency of frames without complaints.

Complete shutdown of vertical synchronization in Skyrim leads to a more "clear" mouse control. However, the image breaks appear (not to mention other artifacts, such as flickering water). Turning on G-Sync. Leaves slowing down at 60 Hz, but at 144 Hz, the situation is significantly improved. Although in video card reviews, we test the game with a disabled vertical synchronization, we would not recommend playing without it.

For Skyrim, perhaps the best solution will turn off G-Sync. And play 60 Hz, which will give constant 60 frames per second at the graphics settings selected to you.

G-Sync Technology Overview | G-SYNC - what did you wait for?

Even before we received a test sample of ASUS monitor with technology G-Sync. We have already pleased the fact that Nvidia is working on a very real problem affecting the game, which has not yet been suggested. Until now, you may turn on or not to include vertical synchronization. At the same time, any decision was accompanied by compromise, negatively affecting gaming experience. If you prefer not to include vertical synchronization until the image breaks become unbearable, we can say that you choose a smaller of two angry.

G-Sync. Solves the problem by providing the monitor the ability to scan the screen on the variable frequency. Such innovation is the only way to continue to promote our industry, while maintaining the technical advantage of personal computers over game consoles and platforms. Nvidia, no doubt, will endure criticism for not developed the standard that competitors could apply. However, for its solution, the company uses DisplayPort 1.2. As a result, only two months after the announcement of the technology G-Sync. She was in our hands.

The question is whether NVIDIA implements everything that promised in G-Sync?

Three talented developers, praising the quality of technology that you have not yet seen in action can inspire anyone. But if your first experience with G-Sync. Based on a demonstration test with a pendulum from NVIDIA, you definitely define a question, whether such a huge difference is possible, or the test represents a special script that is too good to be true.

Naturally, when checking technology in real games, the effect is not so unambiguous. On the one hand, there were exclamations "Wow!" And "go crazy!", On the other, it seems, I see the difference. " Best Activation Impact G-Sync. Noticeably when changing the frequency of the renewal of the display with 60 Hz to 144 Hz. But we also tried to spend a test for 60 Hz with G-Sync. To find out what you get (hope) with cheaper displays in the future. In some cases, a simple transition from 60 to 144 Hz will greatly affect, especially if your video card can provide a high frame rate.

Today we know that ASUS plans to introduce support G-Sync. in the model ASUS VG248QE. That, according to the company, next year will be sold at a price of $ 400. The monitor has a native resolution of 1920x1080 pixels and an update frequency of 144 Hz. Version without G-Sync. Already received our SMART BUY reward for outstanding performance. But personally for us is a 6-bit TN panel is a disadvantage. I really want to see 2560x1440 pixels on an IPS matrix. We even agree to the refresh rate of 60 Hz, if it helps to reduce the price.

Although at CES exhibition we are waiting for a whole bunch of announcements, official NVIDIA comments regarding other displays with modules G-Sync. And we did not hear their prices. In addition, we are not sure what the company's plans are about the module for the upgrade, which should allow you to implement the module G-Sync. in the already purchased monitor ASUS VG248QE. in 20 minutes.

Now we can say what you should wait. You will see that in some games the influence of new technologies cannot be confused, and in others it is less pronounced. But anyway G-Sync. Replies to the "bearded" question, include or not include vertical synchronization.

There is another interesting thought. After we tested G-Sync. How many other AD will be able to avoid comment? The company infatized our readers in their interview (English), noting that it will soon determine this opportunity. If she has something in the plans? The end of 2013 and the beginning of 2014 prepare us a lot of interesting news for discussion, including Battlefield 4. Mantle versions, the upcoming NVIDIA Maxwell architecture, G-Sync. , AMD XDMA engine with CrossFire support and rumors about new two-type video cards. Now we lack video cards, the amount of GDDR5 memory is more than 3 GB (NVIDIA) and 4 GB (AMD), but they are less than $ 1000 ...

Magnetometry in the simplest version The ferrozond consists of a ferromagnetic core and two coils on it

Magnetometry in the simplest version The ferrozond consists of a ferromagnetic core and two coils on it Effective job search course search

Effective job search course search The main characteristics and parameters of the photodiode

The main characteristics and parameters of the photodiode How to edit PDF (five applications to change PDF files) How to delete individual pages from PDF

How to edit PDF (five applications to change PDF files) How to delete individual pages from PDF Why the fired program window is long unfolded?

Why the fired program window is long unfolded? DXF2TXT - export and translation of the text from AutoCAD to display a dwg traffic point in TXT

DXF2TXT - export and translation of the text from AutoCAD to display a dwg traffic point in TXT What to do if the mouse cursor disappears

What to do if the mouse cursor disappears