Three merry letters: what you need to know about HDR. All you need to know about HDR in computer monitors What games are supported by HDR on PC

After CES and Computex 2017, it became clear: by the end of this year, the first monitors for PC supporting the standard will appear on the market. All major manufacturers, such as Asus, LG, Acer, Samsung and Dell announced it. So, let's study a little theory of monitors with HDR.

HDR in PC format

HDR or High Dynamic Range is a standard for expanding the color reproduction and video contrast beyond standard hardware features. It is simply explained, 4K permission is important for image quality, contrast, brightness and color saturation and HDR improves these three parameters, thereby reaching an improvement in image detail.

HDR requires the monitor to have 10 or 12-bit color. However, standard PC monitors are currently capable of transmitting only 6 or 8-bit color using the SRGB color gamut that covers only a third of the HDR visual spectrum.

Of course, there are monitors that meet the requirements of color. These are models with the support of the so-called Wide Gamut Color. However, their capabilities are compatible only with professional programs. Games and other software simply ignore additional colors and often look "blurred."

The HDR standard allows you to avoid this confusion, including metadata in the video broadcasting that correctly distribute the color space. It helps to properly visualize the image and causes all the software to use the best display features.

Standards

Currently, there are four standards, but two are most often used in consumer electronics: patented with 12-bit color and dynamic metadata and open standard that supports 10-bit color and providing static metadata to start video broadcasting. Other two standards are HLG. or, developed by BBC and used on YouTube and Advanced HDR., Created by Technicolor and used in Europe.

But back to the issue of using HDR in PC monitors. Because of all licensed fees and additional equipment, Dolby Vision is more expensive from both standards. By providing better color depth and dynamic step-by-step settings game developers are focused on cheaper, but quite acceptable HDR 10. This is fair not only for PC-based manufacturers, but also for consoles: Microsoft C, Sony with PS4 ,.

Recent Update Dolby Vision simplified the integration of software in game video cards Using Driver Updates. Since March, NVIDIA is one of the main supporters of Dolby Vision in games such as Mass Effect: Andromeda.

Photo from NVIDIA rack on Computex 2017, showing a standard SDR monitor (left) and HDR (right) with Mass Effect: Andromeda. Photo: Techpowerup.

Photo from NVIDIA rack on Computex 2017, showing a standard SDR monitor (left) and HDR (right) with Mass Effect: Andromeda. Photo: Techpowerup. Problem with HDR for PC Gamers

If you have enough money, then there are no problems with buying a HDR monitor. But there is a question about what content can you use in HDR. And here is bad news for gamers. Yes, some new games go to the market with HDR support, but older do not have opportunities for higher contrast.

Only a few games support HDR: Shadow Warrior 2, Deus Ex: Mankind Devided, Hitman, Resident Evil 7, Obduction, Paragon And the above Mass Effect: Andromeda. Will soon appear Need for Speed: Payback and Star Wars: Battlefront 2.

To see all the delights of the HDR10 Standard Pictures, which can produce Xbox One S, Playstation 4 and Playstation 4 Pro, you will need a TV with support for this format. But then everything becomes easier - you can take a picture of the screen and feel the difference.

At the Sony's Special Presentation, as part of the Tokyo Game show, Uncharted 4 and Horizon are demonstrated: Zero Dawn both with the included and turned off HDR. It remained only to make a photo - which was made by the correspondents of the Japanese publication Game Watch.

In the case of Horizon: Zero Dawn The difference is obvious: the first photo shows the included HDR, the second is turned off. Uncharted 4 Everything is more complicated: on both photos you can see the oblique line passing through the image. A part of the image to the left of the line shows the HDR turned off, on the right - the included.

The same presentation showed versions of Final Fantasy XV, Infamous First Light and Resident Evil 7: Biohazard for PlayStatio 4 Pro. About Biohazard and First Light It's hard to say something defined, but FFXV clearly looked more decent than on the usual PlayStation 4. It seems that fans will have to fork out not only on the game, but also on the new console.

The dynamic range is more important than 4k.

To bookmarks

On the SDR display to see HDR is impossible, so usually in such comparisons, the picture on the left worsen intentionally to be clear the essence

4k, as the standard, finally went to the masses. You can safely name 2017 by the turning point in history when this permission has become truly consumer. The price of panels dropped below 500 dollars, even for large diagonals, and the content itself, let and with tension, but began to meet the necessary requirements.

There were consoles for invaluable support. The appearance of PS4 Pro and Xbox ONE X significantly accelerated the introduction of 4K screens. Gradually (but not without a heap of reservations) in 4k translates and extensive Hollywood filmmakers. Already almost all the original Netflix shows can be viewed in the decent Ultra HD.

The difference between 4K and FULL HD

But is it necessary 4k-permission exactly on the TV? On the monitor, which is most often in a pair of tens of centimeters from the eyes, the picture in Full HD begins to "raw" on the pixels already on 27 inches. For this reason, Retina / 5K and even 1440p are becoming increasingly popular.

Among the TV viewers, adaptation is slower, since from three meters, at least some difference becomes visible only from 43 inches, and Ideally, a diagonal of more than 49 inches will be taken to justify the purchase of a 4k-television. Obviously, such a luxury is poorly available for most Russian apartments, even if there is money - sometimes a huge TV is simply nowhere to do.

Ultra HDR View

Approximately the same thoughts were the author of the article before buying a new TV. Take a good FHD, and God with him, with this 4k? The thought is reasonable only at first glance, since the HDR also attached to the resolution on decent panels. And this, with this, with the form, not very promoted technology is really felt by the present jump as an image, let and content under these standards are not so much as I would like.

The purpose of the material is simple and accessible to tell what HDR is and how to use it. About how the process of buying and installing a TV with 4K and HDR on DTF material, let's figure out in terms of more detail.

What is hdr and why he need

Visual difference

Let's start with the fact that the term HDR itself is like a joke of a clumsy marketer. He creates an unnecessary confusion right from the threshold. That HDR, which is in televisions, does not apply to technology with similar name in smartphones or cameras. If we talk about the photo, then HDR is a combination of several images with different exposures, where the goal is to achieve the most even on the shadows and the light of the option.

With the video, everything is not so - here we are talking about the total number of information. Before the HDR appearance, the standard of the picture, including Blu-ray, were 8 bits. This is a good color coverage, but modern panels, especially OLED, are able to display much more shades, gradients and colors than 8-bit sources allow.

A new 10-bit standard is called up to solve this problem, which allows you to transmit significantly more information about brightness, saturation, depth and color of the scene show. For him, a new HDMI 2.0 protocol was developed.

But do not hurry together with the TV change all the wires! Old cables are compatible with HDMI 2.0a, whatever marketers hang on your ears. The main thing is that they have a mark "High Speed \u200b\u200bWith Ethernet". Only bandwidth is worried - the connectors themselves did not change.

At the time of writing this article, the HDR on the TV is sharpened precisely 10 bits, although the standard of digital filming is 14 Bit Raw (even above - at the film), so that even the modern panels are still far from the full display of the information with which directors and installations of many work paintings.

Okay, but what does it give in practice?

Example HDR VS SDR based on Uncharted 4The sun begins to shine in the scenes noticeably brighter, an understanding of the fact that in one frame there may be several different brightness and saturation of light sources. The problem disappears with the pixelization of the halftone in the dark scenes, and the gradients and complex mixtures of the paints acquire volume. The sky is no longer bored and does not merge from the earth on the horizon line. In short, you see a picture more by your own eye than a limited eyepiece of the camera.

At the moment, video games are beneficial than the technology, where a variety of real-time lighting is finally displayed more or less correctly. Much depends on the quality of the source processing for HDR and there is a lot of frank hacktur, but now the references appear for the format of the type of "Guards of the Galaxy 2" or "John Whitch 2".

TV manufacturers - your enemies

So far, HDR, like any advanced technology, is the "Wild West". The "Ready for HDR" label is hanging on all new TVs - regardless of whether they are able to adequately display 10 bits or not. There is a big difference between the real HDR TV and the panel, which simply displays the HDR content, lowering it to 8 bits and just blowing the colors and contrast.

It is sometimes hard to launch manufacturers. It should be viewed, whether the panel is declared as a 10-bit and whether it is responsible to the open HDR10 standard. The correct sign of the real HDR panel is support for Wide Color Gamut (wide range of colors). Without her HDR loses any practical meaning.

A noticeable part of LCD TVs uses active 8-bit panels, which "imagine" colors with special algorithms. The HDR picture on such panels is a little worse than, but they are noticeably cheaper.

Return of "bit" wars

On any turn of the evolution of the picture, the war standards may arise. But if in the case of Blu-ray and HD-DVD battle, everything ended in deplorable for the second, including for those who bought "iron" consumers, the Battle of HDR10 against Dolby Vision HDR will most likely end the bloodless draw.

The HDR10 standard transmits smaller colors and supports only 10 bits, but fully open. The Dolby standard preserves shades and expands up to 12 bits, but it is more difficult to follow and implement it. In any case, the issue of supporting this or that technology is solved by a simple software copy, and the same games are already working with HDR10, since it was it that they were chosen for their consoles both in Sony and in Microsoft.

Televisions themselves often allow you to use several standards at once, so it's no longer worth a head of it now.

What to watch?

If we are talking about the screen, it is better, of course, to take OLED and its analogues. You will get deep black and full support for all HDR charms. If the wallet does not allow the order of about 80 thousand to the top TV, then you should not despair. The LCD model of 2017 finally facing children's sores and you immediately notice the difference between HDR and SDR, let and lose in the gradations of black and brightness. The author of this article is just an LCD panel with HDR support and, I can assure you, the difference with content in standard colors is seen from the first seconds.

If we are talking about the source, then all modern gaming consoles output HDR anyway (except Switch and Tolstoy Xbox One). PS4 gives it just an HDR (no 4K), and Xbox One S / X allows you to play the UHD drives, and to fight the native 4K HDR directly to the TV. From online services, the standard is already supported by Netflix and Amazon, and NetFlix contains in itself both a library for HDR10 and content in Dolby Vision.

And what to watch?

All original Netflix content since 2016, plus all 4k movies that produce film studios on disks and in the "digit". Very soon, the collection of films of Christopher Nolan film will appear, the digitization process of which controlled the director himself. As it was with the "Dark Knight" on Blu-Ray, it is certainly for many years to set the standards for both UHD masters and for HDR.

What to play in?

A noticeable number of games supports HDR even on "basic" consoles. Especially bright (sorry for the pun) technology features show such games like Horizon Zero Dawn, Uncharted 4 and Gran Turismo Sport.

The latter was created under HDR from scratch, and therefore it emphasizes all the advantages of an extended range of brightness and colors. Especially for GT Sport in Polyphony Digital developed HDR cameras to capture a real picture and subsequent calibration under it game. And the number of colors displayed is still exceeding the possibilities of even the most expensive panels. What is called, Benchmark "For Growth".

However, not all games are adapted to HDR equally well, so read reviews on the Internet and see Digital Foundry reviews. To worry, however, it is not necessary to worry, since the developers are better and better understand the possibilities of the expanded range, but, therefore, the quality of the console content will only grow.

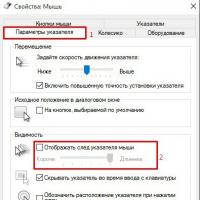

At the PC at the moment, everything is not so smooth. Games with HDR a little, and the image conclusion itself is associated with the problems of color reproduction at the system level (curves drivers, quirks of Windows, and so on). The author's experience with a ligament HDR / Windows 10 was contradictory. Besides with HDR, popular players work poorly, so you have to wait. As the 3D adaptation speed shows the PC, the imputed HDR implementation on computers is worth waiting for approximately six months. Yes, and the library will catch up.

So everything is fine, but what minuses?

Typically, calibration tables in games look easier

The minuses of HDR on the current turn is also enough, but I will lay down a couple of critical.

- Your equipment is most likely not ready for HDMI 2.0a and HDCP 2.2, so along with a TV you will almost certainly have to change the receiver. I encountered this (PS VR of the first revision), I ran into it and Vadim (receiver with HDMI 1.4).

- If it seems to you that the HDR spoil the picture or fails, the screen should be calibrated. Some games offer convenient calibration tools (COD WWII, GT Sport), but most of them have to rely on this issue on their own flair. My advice: Run the game in some complexby colors and bright scene, or vice versa, select the night level at dusk. This will allow you to quickly configure your new cool TV and save you from the primary disorder and frustration.

The time has come

10 bits are not the limit, but after two weeks with an HDR TV to ordinary games or films you return without being enthusiasm. The more nonest thing that this year the HDR stopped being the lot of Gicks and Tolstosums and finally went to the people. The technology will become better and clearer, however, if you waited for "the very moment" to change your TV - then here it is, at a great time to finally see the virtual worlds in all colors.

WriteWhen in early 2017 passed cES exhibitionIt became clear that soon the counters of computer stores will begin to file computer monitors that support the HDR standard. All major manufacturers already sell such models - each with impressive parameters. Soon we will tell you in detail about one of them, and now let's focus on the theory that will help to decide how long the purchase of a monitor with support for HDR is justified.

HDR in PC format

The standard explanation describes HDR (or High Dynamic Range) as a set of standards intended to expand the color reproduction and contrast of video and images beyond the standard, "hard" hardware features. Speaking easier, HDR improves contrast, brightness and saturation of colors, providing several times more detailed image. HDR against SDR

From a practical point of view, for most users, this means a complete replacement of available devices in order to get an obvious difference as an image. Why is a complete replacement? Because standard devices, in particular monitors, do not comply with HDR certification requirements.

Let's start with the requirements for brightness. To be considered "HDR Ready" the display must have a minimum of 1000 kD / m2 (brightness thread). High-class monitors provide brightness between 300-400 NIT, i.e. They do not even closely correspond to what is needed. Good laptops have about 100 yarn. Even with displays for smartphones designed to provide good visibility in bright sunlight, this indicator rarely exceeds 800 NIT (Galaxy Note8 is one of the exceptions with a brightness of 1200 NIT). In other words - currently 99% of displays do not support HDR.

We now turn to the color playback. HDR technology requires the monitor to maintain a color depth of 10 or 12 bits. However, standard monitors are capable of transmitting only 6- or 8-bit color using the SRGB color gamut, which covers only a third of the HDR visual spectrum.

Models of monitors with Wide Gamut Color technology (WGC) meet the requirements of color, but their extended capabilities are compatible only with professional programs ( graphic editor, eg). Games and the rest of the software simply ignore additional colors and often look "blurred" if the equipment cannot emulate a reduced color space.

HDR allows you to avoid this confusion due to metadata that correctly distribute the color space. It is this that helps to properly visualize the image and causes all the software to optimally use the display capabilities.

Here, however, you should insert one large "but" for those of you who work in the field of photography, graphic design and video processing. Brighter and rich colors, provided by HDR monitors, are unlikely to come to your shower. Not because you will not like you, they simply will not satisfy your professional needs, because their "liveliness" is achieved due to the realism of color reproduction. Models with WGC continue to stay the perfect choice for you. So if you read this text to find out what advantages This technology gives your sphere of employment, you just won't find them.  Two designer monitor Dell.. Left - WGC screen with realistic color reproduction. Right - HDR display. Easy to notice high color saturation.

Two designer monitor Dell.. Left - WGC screen with realistic color reproduction. Right - HDR display. Easy to notice high color saturation.

Porridge from standards

Next, we will talk about the experience from the point of view of the usual user and PC-game, but first let me unravel the huge tangled tangle of HDR standards for you. Currently, there are four standard, but only two of them are widespread in consumer electronics: a patented Dolby Vision with its 12-bit color and dynamic metadata; And the open HDR10 standard, which supports 10-bit color and provides only static metadata transmission. Two other standards - HLG developed by BBC and used on YouTube; And Advanced HDR, created by Technicolor and used mainly in Europe.  The difference between SDR, HDR with static metadata (HDR10) and HDR with dynamic metadata (Dolby Vision).

The difference between SDR, HDR with static metadata (HDR10) and HDR with dynamic metadata (Dolby Vision).

But back to the issue of using HDR in computer monitors, adding the severity of games. Requires the license fees and the availability of additional equipment, Dolby Vision is more expensive from both standards, and its high cost is the main factor of slow reception. Despite the fact that Dolby Vision provides the best color depth and the possibility of dynamic reconfiguration of the frame behind the scenes, game developers choose the cheaper, but optimal HDR10. In this case, it is not only about PC manufacturers, but also consoles: Microsoft (Xbox One S and Xbox One x) and Sony (PS4 and PS4 Pro). The main supporters of HDR10, such as Samsung and Amazon, are even actively fighting against the argument that Dolby Vision provides more high quality Images. This struggle led to the appearance of a peculiar update called HDR10 +, which improves some weaknesses of HDR10.

All this suggests that the HDR10 will be a widespread HDR standard for computer monitors and games, right? No, not at all. Recently, Dolby Vision developers have simplified the integration of their technology to games and graphics processors through patches, firmware or driver updates. In the spring of this year, NVIDIA company joined the number of key supporters of Dolby Vision.  NVIDIA stand at Computex 2017. On the left - the standard SDR monitor, on the right - the HRD monitor. Photo: techpowerup.

NVIDIA stand at Computex 2017. On the left - the standard SDR monitor, on the right - the HRD monitor. Photo: techpowerup.

(PC) Geyming in HDR

Consolers were lucky more in the Question HDR. They won from the inclusion of the standard in high-class TVs, and manufacturers of consoles of games (specifically for consoles) quickly saw the visual advantage of HDR screens to standard TVs. From a purely practical point of view, the consumer is easier to justify large investments in the screen, which serves as the entertainment center of his house, than in the one that stands on the desktop.

Nevertheless, PC-gamers can be grateful to their console comrades. Popularization of HDR in televisions, such as LG C6 and C7 series, which can be used as giant monitors for PCs, allowed "PCCHNIs" to enjoy the first wave of HDR-content created specifically for PC.

But still, what models of monitors should pay attention to? Three of the most promising announcements of HDR monitors quickly disappointed that in fact they do not meet all the requirements of HDR10. And, therefore, the true HDR does not support. Two of them, Dell S2718D and LG 32UD99, can receive an HDR signal, but do not have the necessary color range or brightness to use HDR content. The last, BenQ SW320, meets the requirements for color, but not brightness. Thus, the following models remained in the list: Acer Predator X27, Acer PRDATOR X35 ASUS ROG SWIFT PG27UQ, ASUS ROG SWIFT PG35VQ, Samsung CHG70 and Samsung CHG90.  ASUS ROG SWIFT PG35VQ is one of the most promising HDR models at the moment

ASUS ROG SWIFT PG35VQ is one of the most promising HDR models at the moment

The next logical question: What is the situation with the graphics processor? In this regard, computers have long been prepared due to the war between NVIDIA and AMD, as well as their medium and high video cards.

NVIDIA began with HDR integration into its Maxwell generation graphic processors (previous 900 series) and continued certification with a new 1000 series, which uses the Pascal architecture. The first certified video cards from AMD became models of 390x and polaris families. Simply put, if your video card was released over the past 4 years, you should not have any problems. However, if you want to use everything that can offer a new HDR display, you will have to purchase one of the newest video card models.

Real problem with HDR for PC gamers

If with money full order, then buying a monitor with HDR support and the corresponding computer iron will not be a problem. But before running to the store, the situation should be examined with the presence of appropriate content. Unfortunately, in this regard, so-so the situation. Yes, there are new games in which HDR support originally laid, but old games do not know how to adapt to the features of this technology. At least, not without special patches.

HDR integration does not require large-scale changes in software, but this does not cancel the fact that at present the amount of HDR-content available to PC-gamers is not so great. In fact, only a few games support Standard: Shadow Warrior 2, Deus Ex: Mankind Devided, Hitman, Resident Evil 7, Obduction, Paragon, Paragon, Mass Effect version: Andromeda, Need for Speed: Payback and Star Wars: Battlefront 2. Cross-platform games Gears of War, Battlefield and Forza Horizon 3 support HDR in console versions, but this function is missing on the PC. Some time ago, Nvidia actively worked on the HDR patch for Rise of Tomb Raider, but there were no news from the company for a long time about how this work is moving.

Game developers take the idea of \u200b\u200bHDR, but console games will be the first with such support. PC-gamers remain (again) in the background. It will take several years before HDR will become a truly important feature of computer monitors. At the moment this standard is not among the required parameters to which game monitorTo deserve attention. As in the case of 4K, HDR is an investment in the future.

One advice that I can give you in conclusion: buy today a monitor that meets your current needs. If you are important for HDR, this pleasant bonus will cost you a few extra hundreds of dollars, but it will be a guarantee (albeit a little) that your new monitor will remain relevant for a long time.

Tags:,| Parameter | Value |

|---|---|

| Code name chip. | GP104. |

| Production technology | 16 nm Finfet. |

| Number of transistors | 7.2 billion |

| Square nucleus | 314 mm² |

| Architecture | |

| Hardware support DirectX | |

| Memory bus. | |

| 1607 (1733) MHz | |

| Computing blocks | 20 streaming multiprocessors comprising 2560 scalar ALUs for floating semicolons within the framework of the IEEE 754-2008 standard; |

| Texturing blocks | 160 texture addressing and filtering blocks with FP16 and FP32 component support in textures and support for trilinear and anisotropic filtering for all textural formats |

| Monitor support |

| Specifications of the reference video card GeForce GTX. 1080 | |

|---|---|

| Parameter | Value |

| Frequency of nucleus | 1607 (1733) MHz |

| 2560 | |

| Number of textural blocks | 160 |

| Number of blundering blocks | 64 |

| Effective memory frequency | 10,000 (4 × 2500) MHz |

| Memory type | GDDR5X. |

| Memory bus. | 256-bit |

| Memory size | 8 GB |

| 320 GB / s | |

| about 9 teraflops | |

| 103 gigapixels / with | |

| 257 gigatexels / with | |

| Tire | PCI Express 3.0 |

| Connectors | |

| Energy consumption | up to 180 W. |

| Additional food | One 8 pin connector |

| 2 | |

| Recommended price | $ 599-699 (USA), 54990 rubles (Russia) |

The new GeForce GTX 1080 video card model has been logical for the first solution of the new GEFORCE series - it differs from its direct predecessor only a modified generation. The novelty does not simply replace the top solutions in the current line of the company, but for some time it became the flagship of the new series until the Titan X released on the GPU even greater power. The already announced GeForce GTX 1070 model is also located below in the hierarchy, based on the trimmed version of the GP104 chip, which we will consider below.

The recommended prices for a new video holiday NVIDIA are $ 599 and $ 699 for conventional versions and special editions of Founders Edition (see below), respectively, and this is a fairly good sentence, taking into account the fact that GTX 1080 is ahead of not only GTX 980 Ti, but also Titan X. Today, the novelty is the best in performance by the solution in the market of single-chip video cards without any questions, and at the same time it costs cheaper than the most productive video cards of the previous generation. While a competitor from AMD from GeForce GTX 1080 is in fact, therefore, therefore, NVIDIA was able to establish such a price that suits them.

The video card under consideration is based on the GP104 chip, which has a 256-bit memory bus, but a new type of GDDR5X memory works on a very high efficient frequency of 10 GHz, which gives high peak bandwidth in 320 GB / C - which is almost at the GTX 980 Ti level from 384 -Bed bus. The amount of memory mounted on a video card with such a bus could be 4 or 8 GB, but put a smaller volume for such a powerful solution in modern conditions It would be stupid, so GTX 1080 completely logically received 8 GB of memory, and this volume is enough to start any 3D applications with any quality settings for several years ahead.

The GeForce GTX 1080 printed circuit board for obvious reasons is decently different from the previous PCB of the company. The value of typical innovation for the novelty is 180 W - it is slightly higher than that of GTX 980, but noticeably lower than that of less productive Titan X and GTX 980 Ti. The reference board has a familiar set of connectors for attaching an image output devices: one Dual-Link DVI, one HDMI and three DisplayPort.

Reference Design Founders Edition

Announcement of the GeForce GTX 1080 at the beginning of May, a special edition of the video card called Founders Edition has been announced, which has a higher price compared to conventional video cards of the company's partners. In essence, this publication is a reference design card and cooling system, and it is made by NVIDIA. You can relate differently to such variants of video cards, but developed by engineers of the company's reference design and the design produced using high-quality components has its own fans.

But whether they will give a few thousand rubles more for the video card from Nvidia itself - this is a question, the answer to which only practice can give. In any case, it is at first to sell that the reference video cards from NVIDIA at an increased price will appear, and it is not particularly different from anything - it happens with every announcement, but the reference GeForce GTX 1080 is characterized in that it is planned to be sold throughout its time Life, up to the release of the next generation solutions.

NVIDIA believes that this publication has its advantages even before the best works of partners. For example, a two-sheet design of a cooler allows you to easily collect on the basis of this powerful video card as a gaming PC of a relatively small form factor, as well as the multimephy video systems (even despite the work-free operation in three- and four-hour mode). GeForce GTX 1080 Founders Edition has some advantages in the form of an efficient cooler using an evaporative chamber and a fan emitting heated air from the case - this is the first such NVIDIA solution that consumes less than 250 W energy.

Compared to previous reference designs of the company's products, the power circuit was upgraded with a four-phase to five-phase. NVIDIA also speaks about improved components on which the novelty is based, electrical interference has also been reduced to improve the stability of the voltage and overclocking potential. As a result of all improvements, the energy efficiency of the reference fee increased by 6% compared with GeForce GTX 980.

And in order to differ from the "ordinary" models of GeForce GTX 1080 and externally, for Founders Edition developed an unusual "chopped" case design. That, however, probably led to the complication of the shape of the evaporative chamber and the radiator (see photo), which is possible and served as one of the reasons for surcharge at $ 100 for such a special edition. We will repeat that at the beginning of sales of a special choice for buyers will not be, but in the future you can choose both a solution with your own design from one of the company's partners and performed by Nvidia itself.

New generation of Pascal graphic architecture

The GeForce GTX 1080 video card has become the first solution to the GP104 chip based on the new generation of the NVIDIA graphic architecture - Pascal. Although the new architecture was based on the decision, worked back in Maxwell, it has important functional differences that we will write about. The main change from the global point of view was the new technological processwhere a new graphics processor was performed.

The use of the technical process of 16 Nm Finfet in the production of GP104 graphic processors at the TSMC TSMC factories made it possible to significantly increase the complexity of the chip while maintaining the relatively low area and cost. Compare the number of transistors and the GP104 and GM204 chips area - they are close in the area (the crystal of the novelties is even slightly less physically), but the Pascal architecture chip has a noticeably more transistors, and, accordingly, executive blocks, including providing new functionality.

From an architectural point of view, the first gaming Pascal is very similar to similar solutions of the Maxwell architecture, although there are some differences. Like Maxwell, Pascal architecture processors will have a different graphics processing cluster (GPC) computing cluster configuration, streaming multiprocessors Streaming Multiprocessor (SM) and memory controllers. The SM multiprocessor is a high-parallel multiprocessor that plans and runs warp (Warp, groups of 32 command streams) on CUDA-nuclei and other executive blocks in a multiprocessor. Detailed data on the device of all these blocks you can find in our reviews of NVIDIA previous solutions.

Each of the multiprocessors SM is sprayed with a PolyMorph Engine engine, which processes texture samples, tessellation, transformation, installation of vertex attributes and a perspective correction. Unlike previous solutions of the company, Polymorph Engine in the GP104 chip also contains a new Simultaneous Multi-Projection multiprocessing unit, which we will still talk about. The SM multiprocessor combination with one Polymorph Engine engine is traditional for NVIDIA called TPC - Texture Processor Cluster.

The entire GP104 chip in the GeForce GTX 1080 contains four GPC clusters and 20 SM multiprocessors, as well as eight memory controllers, combined with ROP blocks in the amount of 64 pieces. Each GPC cluster has a dedicated routing engine and includes five SM multiprocessors. Each multiprocessor, in turn, consists of 128 CUDA-cores, 256 KB of the register file, 96 CB of shared memory, 48 KB of the first level cache and eight TMU texture blocks. That is, in total GP104 contains 2560 CUDA-cores and 160 TMU blocks.

Also, the graphics processor, which is based on the GeForce GTX 1080 video card, contains eight 32-bit (unlike 64-bit previously used memory controllers, which gives us a final 256-bit memory bus. Each of the memory controllers is tied to eight ROP and 256 KB of the second level cache. That is, the entire GP104 chip contains 64 ROP blocks and 2048 KB of second-level cache.

Thanks to architectural optimizations and a new technical process, the first gaming Pascal has become the most energy-efficient graphics processor for all time. Moreover, the contribution to it is both from one of the most advanced technological processes of 16 NM FinFet and from the optimizations of architecture in Pascal, compared with Maxwell. NVIDIA was able to increase the clock frequency even more than they were calculated when switching to a new technical process. GP104 operates at a higher frequency than the hypothetical GM204 would be worked, released using a 17 Nm process. For this, NVIDIA engineers had to thoroughly check and optimize all the bottlenecks of previous solutions, not allowing to accelerate above a certain threshold. As a result, the new GeForce GTX 1080 model operates by more than 40% increased frequency, compared with GeForce GTX 980. But this is not all changes associated with the frequency of operation of the GPU.

GPU BOOST 3.0 technology

As we know well according to NVIDIA video cards, in their graphic processors, they use GPU Boost hardware technology, intended to increase the GPU working clock frequency in the modes when it has not yet reached the limits for energy consumption and heat generation. Over the past years, this algorithm has undergone many changes, and the third generation of this technology is already used in the Pascal architecture video chip - GPU Boost 3.0, the main innovation of which has become a more subtle installation of turbo-frequencies, depending on the voltage.

If you recall the principle of operation of previous versions of technology, then the difference between the base frequency (the guaranteed minimum frequency value is below which the GPU does not fall at least in games) and the turbo frequency was fixed. That is, the turbo frequency has always been on a certain number of megahertz above the basic. GPU BOOST 3.0 has the ability to install turbo frequency displacements for each voltage separately. The easiest way to understand is illustrated by:

On the left is the GPU Boost of the second version, on the right - the third, which appeared in Pascal. The fixed difference between the basic and turbo frequencies did not allow to disclose the capabilities of the GPU completely, in some cases the graphics processors of previous generations could work faster on the installed voltage, but the fixed excess of the turbo frequency did not allow this. In GPU BOOST 3.0, this feature appeared, and the turbo frequency can be installed for each of the individual voltage values, completely squeezing all the juices from the GPU.

In order to control acceleration and establish a turbo-frequency curve, convenient utilities are required. NVIDIA itself does not do this, but helps its partners to create similar utilities for the relief of overclocking (within reasonable limits, of course). For example, the new GPU BOOST 3.0 functionality is already disclosed in EVGA Precision Xoc, which includes a special acceleration scanner, automatically finding and installing a nonlinear difference between the base frequency and the turbo frequency for different values Voltages using the launch of the built-in performance test and stability. As a result, the user turns out a turbo frequency curve, ideally corresponding to the capabilities of a particular chip. Which, besides, you can somehow modify in manual mode.

As you can see on the screenshot of the utility, in addition to the information about the GPU and the system, there are also settings for overclocking: Power Target (defines typical power consumption during acceleration, as a percentage of standard), GPU TEMP TARGET (maximum allowable kernel temperature), GPU Clock Offset (Exceeding over the basic frequency for all voltage values), Memory Offset (video memory frequency exceeding over the default value), OverVOLTAGE (additional power to increase voltage).

The Precision Xoc utility includes three acceleration modes: the main Basic, linear linear and manual Manual. In the main mode, you can set a single frequency exceeding value (fixed turbo frequency) above the basic, as it was for the previous GPUs. The linear mode allows you to set a linear change in the frequency from the minimum to the maximum voltage values \u200b\u200bfor the GPU. Well, in manual mode, you can set unique GPU frequency values \u200b\u200bfor each voltage point on the graph.

As part of the utility there is also a special scanner for automatic overclocking. You can either install your own frequency levels or allow the Precision Xoc utility to scan GPU on all stresses and find the most stable frequencies for each point on the voltage curve and frequency are fully automatically. In the process of scanning, Precision Xoc gradually adds the frequency of the GPU and checks its work on the stability or appearance of artifacts, building the perfect curve of frequencies and stresses, which will be unique for each specific chip.

This scanner can be configured under your own requirements, setting the time segment of the test of each voltage value, minimum and maximum frequency checked, and its step. It is clear that to achieve stable results it will be better to set a small step and a decent duration of testing. In the process of testing, an unstable video device and system can occur, but if the scanner does not depend, it will restore the work and continue to find the optimal frequencies.

New type of video memory GDDR5X and improved compression

So, the power of the graphics processor has grown noticeably, and the memory bus remained only 256-bit - there will be no memory bandwidth to limit overall performance and what can be done with it? It seems that the promising HBM memory of the second generation is still too much in production, so I had to look for other options. From the moment of the advent of GDDR5-memory in 2009, NVIDIA engineers investigated the possibilities of using new types of memory. As a result, the development came to the introduction of a new GDDR5x memory standard - the most complex and advanced standard, which gives the transfer rate of 10 Gbps.

NVIDIA brings an interesting example of how fast it is. There are only 100 picoseconds between transmitted bits - during such a time, the Light beam will pass the distance just one inch (about 2.5 cm). And when using GDDR5x memory, the reception chain of the data transmission must be less than half of this time, select the value of the transmitted bit, before the next one is sent, it is just that you understand what modern technologies have reached.

To achieve this speed of work, it was necessary to develop a new architecture of the I / O system of data that required several years of joint development with memory chip manufacturers. In addition to the increased data transmission rate, energy efficiency has increased - the GDDR5x standard memory chips use a reduced voltage in 1.35 V and produced according to new technologies, which gives the same energy consumption at 43% more frequency.

The company's engineers had to recycle data transmission lines between the GPU core and memory chips, pay more attention to prevent loss and degradation of the signal throughout the memory from memory to GPU and back. Thus, the above illustration shows a captured signal in the form of a large symmetric "eye", which indicates good optimization of the entire chain and relative ease of capturing data from the signal. Moreover, the changes described above have led not only to the possibility of using GDDR5X by 10 GHz, but also should help get a high PSP on future products using more familiar GDDR5-memory.

Well, more than 40% of the increase in the PSP from the application of new memory we received. But is it not enough? To further increase the efficiency of using the memory bandwidth in NVIDIA, continued to improve the advanced data enclosed in previous architectures. The memory subsystem in the GeForce GTX 1080 uses improved and several new techniques for compressing data without loss, designed to reduce the requirements for the PSP - the fourth generation of intracepical compression.

Algorithms for compressing data in memory immediately bring several positive moments. Compression reduces the number of data recorded into memory, the same applies to the data sent from the video memory to the second-level cache memory, which improves the efficiency of using the L2 cache, since the compressed tile (block of several framework pixels) has a smaller size than uncompressed. Also decreases the amount of data sent between different points, such as the TMU texture module and the framebuffer.

The data compression conveyor in the GPU uses several algorithms, which are determined depending on the "compressibility" of the data - for them the best of the available algorithms is selected. One of the most important is the algorithm of delta-coding of the pixel color data (Delta Color Compression). This compression method encodes data in the form of a difference between sequential values \u200b\u200binstead of the data itself. The GPU calculates the difference in the color values \u200b\u200bbetween the pixels in the block (tile) and saves the block as a certain averaged color for the entire block plus data about the difference in values \u200b\u200bfor each pixel. For graphic data, this method is usually well suited, since the color within small tiles for all pixels is often not different.

The GP104 graphics processor as part of the GeForce GTX 1080 supports more compression algorithms compared to previous Maxwell architect chips. Thus, the compression algorithm 2: 1 became more efficient, and in addition to it two new algorithms appeared: compression mode 4: 1, suitable for cases, when the difference in the color of the block pixel is very small, and the mode of 8: 1, combining the constant algorithm Compression with a ratio of 4: 1 blocks of 2 × 2 pixels with a twofold compression of a delta between blocks. When compression is not completely impossible, it is not used.

However, in reality, the latter is very infrequent. This can be seen by examples of screenshots from the Project Cars game, which led NVIDIA to illustrate the increased compression ratio in Pascal. On the illustrations of Purple, those tiles of personnel buffer are painted, which could squeeze a graphics processor, and non-lossless compression remained with the original color (from above - maxwell, below - Pascal).

As you can see, new compression algorithms in the GP104 really work much better than in Maxwell. Although the old architecture also could compress most tiles in the scene, a large number of grass and trees at the edges, as well as the parts of the car are not exposed to outdated compression algorithms. But when you enable new techniques in Pascal, a very small number of images of the image remains uncompressed - improved efficiency is obvious.

As a result of improved data compression, GeForce GTX 1080 is able to significantly reduce the number of data sent in each frame. If we talk about numbers, then improved compression saves an additional about 20% of the effective memory bandwidth. In addition to more than 40% of the increased PSP in the GeForce GTX 1080 relative to the GTX 980 from the use of GDDR5x memory, it all gives about 70% of the increase in the effective PSP, compared with the model of the past generation.

ASYNC Compute asynchronous computing support

Most modern games use complex calculations in addition to graphic. For example, calculations in the calculation of the behavior of physical bodies can not be carried out not before or after graphic calculations, but simultaneously with them, since they are not connected with each other and do not depend on each other within one frame. Also, an example can be given a post-processing of already rendered personnel and processing audio data, which can also be executed in parallel with rendering.

Another vivid example of using functionality is an asynchronous time distortion (ASynchronous Time Warp) used in virtual reality systems in order to change the output frame in accordance with the player's head movement directly before its output, interrupting the rendering of the next. Such asynchronous loading of GPU capacities makes it possible to increase the efficiency of using its executive blocks.

Such loads create two new GPU use scenarios. The first of these includes superimposed downloads, as many types of tasks do not use the capabilities of graphics processors completely, and part of the resources is idle. In such cases, you can simply run two on one GPU different tasksSeparating its executive blocks to obtain more efficient use - for example, Physx effects running together with 3D frame rendering.

To improve the work of this scenario, dynamic load balancing appeared in the Pascal architecture (Dynamic Load Balancing). In the previous Maxwell architecture, overlapping loads were performed as a static distribution of GPU resources on graphic and computational. This approach is effective, provided that the balance between two loads approximately corresponds to the separation of resources and the tasks are performed equally in time. If illiteraphic calculations are performed longer than graphic, and both expect completion common workThe part of the GPU remains time to stand up, which will cause a decline in overall performance and will reduce all the benefits. Hardware dynamic load balancing also allows you to use the released GPU resources immediately as they become available - to understand the illustration.

There are also tasks critical by execution time, and this is the second scenario of asynchronous computing. For example, the execution of an asynchronous time distortion algorithm in VR must complete before the scan (Scan Out) or the frame will be discarded. In this case, the GPU must support a very fast interruption of the task and switch to another to remove the less critical task from execution on the GPU, freeing its resources for critical tasks - this is called the PREMPTION.

One rendering team from the game engine can contain hundreds of calls for drawing functions, each Draw Call call, in turn, contains hundreds of processed triangles, each of which contains hundreds of pixels that need to be calculated and deny. In the traditional approach, the GPU uses the interruption of tasks only at a high level, and the graphic conveyor is forced to wait for the completion of all this work before switching the task, which results in very large delays.

To fix it, the Pascal architecture first introduced the ability to interrupt the task on the pixel level - Pixel Level Preemption. The executive blocks of the Pascal graphics processor can constantly monitor the progress of performing rendering tasks, and when the interrupt is requested, they can stop execution by saving the context for further completion, quickly switched to another task.

The interrupt and switching at the flow level for computing operations is similar to the interruption on the pixel level for graphic calculations. Computing loads consist of several grids, each of which contains many threads. When the interrupt request was received, the streams performed on the multiprocessor finish the execution. Other blocks retain their own state to continue from the same moment in the future, and the GPU switches to another task. The entire task switching process takes less than 100 microseconds after the flows are performed completed.

For gaming loads, a combination of interrupts on a pixel level for graphic, and interrupts at the streaming level for computing tasks gives graphic processors of the Pascal architecture with the ability to quickly switch between tasks with minimal time loss. And for computing tasks on CUDA, it is also possible to interrupt with minimal granularity - at the instruction level. In this mode, all streams stop execution immediately, immediately switching to another task. This approach requires the maintenance of more information on the state of all registers of each flow, but in some cases of non-grief calculations it is fully justified.

Using the quick interrupt and switching tasks in graphics and computing tasks was added to the Pascal architecture in order for graphic and illiterate tasks to be interrupted at the level of individual instructions, and not entire streams, as it was in Maxwell and Kepler. These technologies are able to improve asynchronous execution of various loads on the graphics processor and improve responsiveness while simultaneously performing several tasks. The NVIDIA event showed a demonstration of the work of asynchronous calculations on the example of calculating physical effects. If without asynchronous calculations, the performance was at 77-79 FPS, then with the inclusion of these capabilities, the frame rate rose to 93-94 fps.

We have already given an example of one of the possibilities for using this functionality in games in the form of asynchronous time distortion in VR. The illustration shows the work of this technology with a traditional interrupt (premption) and with a quick. In the first case, the process of asynchronous time distortion is trying to perform as late as possible, but before updating the image on the display. But the work of the algorithm should be made to the execution of several milliseconds in GPU earlier, since without a quick interrupt it is not possible to accurately perform work at the right moment, and the GPU is idle for some time.

In the case of accurate interruption at the level of pixels and flows (on the illustration on the right), such an opportunity gives greater accuracy in determining the moment of interrupting, and the asynchronous time distortion can be launched significantly later with confidence at shutdown before updating information on the display. And the GPU standing for some time in the first case can be downloaded some additional graphic work.

Multiprocessing technology Simultaneous Multi-Projection

Support appeared in the new GP104 graphics processor new technology Multi-Projection (Simultaneous Multi-Projection - SMP), allowing GPU to draw data on modern image output systems more efficiently. SMP allows the video statement to simultaneously display data into several projections, for which it was necessary to enter a new hardware block in the GPU into the PolyMorph engine at the end of the geometric conveyor in front of the rasterization unit. This unit is responsible for working with several projections for a single geometry stream.

Multiprocating engine processes geometric data at the same time for 16 prefigured projections that combine the projection point (cameras), these projections can be rotated independently or tilted. Since each geometric primitive may appear simultaneously in several projections, the SMP engine provides such functionality, allowing the application to give the video statement instructions to replicate geometry to 32 times (16 projections at two projection centers) without additional processing.

The entire processing process is speedingly accelerated, and since multiprocying works after a geometric engine, it does not need to repeat several times all stages of processing geometry. Saved resources are important under conditions of limiting the rendering speed with the performance of geometry processing, such as tessellation, when the same geometric work is performed several times for each projection. Accordingly, in the peak case, multiprocating can reduce the need for geometry processing up to 32 times.

But why is it all necessary? There are several good examples where multiprocessing technology can be useful. For example, a multi-domuclear system of three displays mounted at an angle to each other close to the user (Surround configuration). In a typical situation, the scene is drawn in one projection, which leads to geometric distortions and incorrect refunctions of geometry. The correct way is three different projections for each of the monitors, in accordance with the angle under which they are located.

With the help of a video card on a Pascal architecture, this can be done in one pass of geometry, indicating three different projections, each for its monitor. And the user will thus be able to change the angle under which monitors are located to each other not only physically, but also virtually - turning projections for lateral monitors to get the correct perspective in the 3D scene with a wonderfully broader viewing angle (FOV). True, there is a restriction - for such support, the application should be able to draw a scene with wide fov and use SMP SMP Special Calls for installation. That is, in each game you will not do this, you need special support.

In any case, the times of one projection on the only flat monitor passed, now many multi-component configurations and curved displays, which can also be used this technology. Not to mention the virtual reality systems that use special lenses between the screens and the eyes of the user, which requires new techniques for projecting 3D images into a 2D image. Many of these technologies and techniques are still at the beginning of the development, the main thing is that old GPUs cannot effectively use more than one flat projection. These require several rendering passes, repeated treatment of the same geometry, etc.

The Maxwell architect chips had limited Multi-Resolution support that helps increase efficiency, but SMP in Pascal may be much more. Maxwell could turn the projection by 90 degrees for cubic cards (Cube Mapping) or various permits for the projection, but it was useful only in a limited circle of applications, like VXGI.

From other SMP applications, we note the drawing with different resolution and single-pass stereorender. For example, drawing with different resolution (MULTI-RES Shading) can be used in performance optimization games. When it is used, a higher resolution is used in the center of the frame, and on the periphery it decreases to obtain a higher rendering rate.

Single-pass stereorendering is used in VR, it has already been added to the VRWORKS package and uses the possibility of multiproycation to reduce the amount of geometric work required by VR rendering. In the case of using this feature, the GEFORCE GTX 1080 graphics processor processes the scene geometry only once, generating two projections at once for each eye, which doubled the geometric load on the GPU, and also reduces the loss from the driver and the OS.

An even more advanced method of improving the efficiency of VR rendering is Lens Matched Shading, when geometric distortions required with VR rendering are simulated using several projections. This method uses multiprocating for rendering of the 3D scene to the surface, which is approximately similar to the adjusted lens when drawing to output on a VR-helmet, which allows not to draw a lot of unnecessary pixels on the periphery that will be discarded. It is easiest to understand the essence of the method on the illustration - in front of each eye, four slightly deployed projections are used (Pascal can also be used for 16 projections for each eye - for more accurate imitation of the curved lens) instead of one:

Such an approach is able to save decently in performance. So, a typical image for Oculus Rift for each eye is 1.1 megapixel. But due to the difference in projections, to render it, the original image is used in 2.1 megapixels - 86% more needed! The use of multiprocessing embedded in the Pascal architecture allows to reduce the resolution of the drawn image to 1.4 megapixels, having received one and a half-way savings at the pixel processing rate, and also saves memory bandwidth.

And together with twofold savings in the processing speed of geometry due to single-pass stereorendering, the GEFORCE GTX 1080 graphics processor is capable of providing a significant increase in the performance of VR rendering, very demanding and to the speed of processing geometry, and even more so - to pixel processing.

Improvements in video output and processing blocks

In addition to the performance and new functionality associated with 3D rendering, it is necessary to maintain a good level and the possibility of outputting the image, as well as decoding and video data coding. And the first Pascal architecture graphics processor did not disappoint - it supports all modern standards in this sense, including HEVC hardware decoding required to view 4K videos on the PC. Also, the future owners of GeForce GTX 1080 video cards will be able to quickly enjoy the playback of the streaming 4K video from NetFlix and other providers on their systems.

From the point of view of an image output on displays, GeForce GTX 1080 has support for HDMI 2.0B with HDCP 2.2, as well as DisplayPort. So far, the version of DP 1.2 is certified, but the GPU is ready for certification for newer standards: DP 1.3 Ready and DP 1.4 Ready. The latter allows you to display an image to 4K screens at a renewal frequency of 120 Hz, and at 5k- and 8k-displays - at 60 Hz when using the DisplayPort 1.3 cable pair. If for GTX 980 the maximum supported resolution was 5120 × 3200 at 60 Hz, then for the new GTX 1080 model, it increased to 7680 × 4320 with the same 60 Hz. Reference GeForce GTX 1080 has three DisplayPort outputs, one HDMI 2.0B and one digital Dual-Link DVI.

The new NVIDIA video card model also received an improved video decoding unit and video data coding. Thus, the GP104 chip complies with high standards of PlayReady 3.0 (SL3000) to play streaming video, allowing you to confident that playing high-quality content from well-known suppliers, like NetFlix, will be as high quality and energy efficient. Details on the support of various video formats when encoding and decoding are shown in the table, the novelty is clearly different from previous solutions for the better:

But an even more interesting novelty can be called support for the so-called high dynamic range displays (High Dynamic Range - HDR), which are about to get widespread in the market. TVs are sold already in 2016 (and in just a year it is planned to sell four million HDR-TVs), and monitors are as follows. HDR is the greatest breakthrough in display technologies for many years, this format has doubled the color shades (75% of the visible spectrum, unlike 33% for RGB), more bright displays (1000 NIT) with greater contrast (10,000: 1) and saturated colors.

The appearance of the reproduction of content with a greater difference in brightness and richer and rich colors will bring the image on the screen to reality, the black color will become deeper, the bright light will be blind, as in the real world. Accordingly, users will see more details in bright and dark images of images, compared with standard monitors and TVs.

To support HDR displays, GeForce GTX 1080 has everything you need - the ability to output a 12-bit color, support for BT.2020 and SMPTE 2084 standards, as well as image output in accordance with HDMI 2.0B 10/12-bit for HDR in 4K resolution, which was and Maxwell. In addition to this, Pascal has supported a 4K-resolution hevc format decoding with 60 Hz and 10 or 12-bit color, which is used for HDR video, as well as the coding of the same format with the same parameters, but only in 10-bit to record HDR video or streaming. Also, a novelty is ready to standardize DisplayPort 1.4 to transmit HDR data for this connector.

By the way, the encoding of HDR video may be needed in the future in order to transmit such data from a home PC to the Shield Game Console, which can play a 10-bit HEVC. That is, the user will be able to broadcast the game with a PC in the HDR format. Stop, and where to take games with such support? NVIDIA constantly works with game developers to implement such support, passing everything you need (support in driver, code examples, etc.) for correct rendering of an HDR image compatible with existing displays.

At the time of release of the video card, GeForce GTX 1080, the support for HDR output has such games as Obduction, The Witness, Lawbreakers, Rise of The Tomb Raider, Paragon, The Talos Principle and Shadow Warrior 2. But in the near future it is expected to replenish this list. .

Changes in the SLI multiple rendering

There were also some changes associated with the corporate technology of multiple rendering SLI, although no one expected. SLI is used by PC-games enthusiasts in order to increase the performance or to extreme values, setting the most powerful single-chip video cards in the tandem, or in order to get a very high frame rate, limiting a couple of medium-level solutions, which sometimes cost cheaper than one top one ( The decision is controversial, but do it). If there are 4K monitors, the players have almost no other options, except for the installation of a videocamp pair, since even top models often cannot provide a comfortable game at maximum settings in such conditions.

One of the important components of NVIDIA SLI are bridges connecting video cards into a common video cure and employees for the organization digital Canal By transferring data between them. On GeForce video cards, Dual SLI connectors were traditionally installed, which served to connect between two or four video cards in the 3rd and 4-way SLI configurations. Each of the video cards should have been connected to each, since all GPUs sent with them frames into the main graphics processor, therefore, two interfaces were needed on each of the boards.

Starting from the GeForce GTX 1080 model, for all NVIDIA video cards based on the Pascal architecture, two SLI interfaces are connected together to increase the data transmission performance between video cards, and such a new two-channel SLI mode allows you to improve productivity and comfort when displaying visual information on very high resolution displays or multi-component systems.

For such a mode, new bridges called SLI HB were needed. They combine a pair of GeForce GTX 1080 video cards at once on two SLI channels, although new video cards are also compatible with old bridges. For resolution of 1920 × 1080 and 2560 × 1440 pixels, at a frequency of update 60 Hz, you can use standard bridges, but in more demanding modes (4K, 5K and multimonitorial systems) The best results on the smooth frame change will provide only new bridges, although the old will work, But somewhat worse.

Also, when using SLI HB bridges, the GeForce GTX 1080 data transmission interface operates at 650 MHz, compared with 400 MHz in conventional SLI bridges on old GPUs. Moreover, for some of the rigid old bridges, a higher frequency of data transmission with video chips of the Pascal architecture is also available. With increasing rate of data transmission between the GPU on a double SLI interface with an increased work frequency, a smoother frame output is provided to the screen, compared with previous solutions:

It should also be noted that the support of the multiple rendering in DirectX 12 is somewhat different from what was familiar earlier. In the latest version of the graphics API, microsoft. Making many changes associated with the work of such video systems. For software developers in DX12, two options for using multiple GPU are available: Multi Display Adapter (MDA) modes and Linked Display Adapter (LDA).

Moreover, the LDA mode has two forms: Implicit LDA (which NVIDIA uses for SLI) and Explicit LDA (when the game developer assumes the tasks of managing rendering management tasks. MDA and Explicit LDA modes have been implemented in DirectX 12 in order to give The game developers are greater freedom and opportunities when using multimeview video systems. The difference between the modes is clearly visible to the following table:

In LDA mode, each GPU memory may be associated with the memory of another and displayed in the form of a large total volume, naturally, with all performance constraints when the data goes from someone else's memory. In the MDA mode, each GPU memory works separately, and different GPUs cannot get direct access to data from the memory of another graphics processor. LDA mode is designed for multi-purity systems of similar performance, and MDA mode has fewer restrictions, and discrete and integrated GPUs or discrete solutions with chips of different manufacturers can work together. But this mode also requires the developers more attention and work when programming collaboration in order for the GPU to exchange information with each other.

By default, the SLI system based on GeForce GTX 1080 boards supports only two GPUs, and three- and four-sized configurations are not officially recommended for use, as in modern games it becomes more and more difficult to ensure productivity gains from the addition of the third and fourth graphics processor. For example, many games rest in opportunities central processor Systems when working with multi-type video systems, temporal (temporary) techniques using data from previous frames are increasingly used in new games, in which the effective operation of several GPUs is simply impossible.

However, the operation of systems in other (non-SLI) multimery systems remains possible, as the MDA or LDA EXPLICIT modes in DirectX 12 or two-type SLI system with a dedicated third GPU for physical effects of PhysX. But what about the records in the benchmarks, really in Nvidia refuse them at all? No, of course, but since such systems are in demand in the world almost units of users, then for such ultrantusiasts, they came up with the special key of Enthusiast Key, which can be downloaded on the NVIDIA website and unlock this opportunity. To do this, you first need to get a unique GPU identifier by running a special application, then request an enthusiast key on the website and downloading it, set the key to the system, unlocking the 3rd and 4-way SLI configuration.

Fast Sync Synchronization Technology

Some changes occurred in synchronization technologies when displaying information on the display. Rating forward, nothing new has not appeared in the G-SYNC, as Adaptive Sync adaptive synchronization technology is not supported. But in NVIDIA decided to improve the smoothness of the output and synchronization for games that show very high performance when the frame rate significantly exceeds the monitor update rate. This is especially important for games requiring minimal delays and quick response and on which multiplayer battles and competition are held.

Fast Sync is a new alternative to vertical synchronization, which does not have visual artifacts in the form of breaks of the picture on the image and is not tied to a fixed update frequency, which increases delays. What is the problem of vertical synchronization in games like Counter-Strike: Global Offensive? This game on powerful modern GPU works with several hundred frames per second, and the player has a choice: include vertical synchronization or not.

In multiplayer games, users most often chase minimal delays and vsync disconnect, getting well visible gaps in the image, extremely unpleasant and at high frame rate. If you include vertical synchronization, the player will receive a significant increase in delays between its actions and the image on the screen when the graphical conveyor slows down to the monitor update frequency.

So the traditional conveyor works. But NVIDIA decided to divide the rendering process and display the image to the screen using Fast Sync technology. This allows you to continue the most efficient work for the part of the GPU, which is engaged in rendering frames at full speed, while maintaining these frames in a special Last Rendered Buffer temporary buffer.

This method allows you to change the way output to the screen and take the best from the VSync ON and VSYNC OFF modes by receiving low delays, but without image artifacts. With Fast Sync, there is no frame control, the game engine works in the synchronization mode mode and it does not say to wait with the drawing of the next, therefore the delays are almost as low as the VSYNC OFF mode. But since Fast Sync selects the buffer to output to the screen and displays the entire frame, then there is no breaks of the picture.

When working Fast Sync, three different buffers are used, the first two of which are used in the same way as double buffering in the classic conveyor. The primary buffer (Front Buffer - FB) is a buffer, information from which is displayed on the display, fully drawn frame. The secondary buffer (Back Buffer - BB) is a buffer that comes with information during rendering.

When using vertical synchronization under high frame conditions, the game is waiting for the moment of updating information on the display (Refresh Interval) to swap the primary buffer with the secondary to display the one-piece image on the screen. This slows down the process, and adding additional buffers as with traditional triple buffering only adds a delay.

With the use of Fast Sync, the third Last Rendered Buffer buffer (LRB) is added, which is used to store all frames, which have just been rendered in the secondary buffer. The buffer name speaks for itself, it contains a copy of the last fully re-drawn frame. And when the moment of updating the primary buffer will come, this LRB buffer is copied to the primary entirely, and not in parts, as from the secondary when the vertical synchronization is turned off. Since copying information from buffers is inefficient, they simply change places (or renamed, as it will be more convenient to understand), and the new buffer change logic places that appeared in the GP104 manages this process.

In practice, the inclusion of a new Fast Sync synchronization method provides a slightly large delay, compared with the vertical synchronization completely disabled - on average, 8 ms more, but displays frames on the entire monitor, without unpleasant artifacts on the screen that tears the image. A new method can be enabled from the graphic settings of the NVIDIA control panel in the vertical synchronization control section. However, the default value is the management of the application, and the FAST SYNC is simply not required in all 3D applications, it is better not necessary to choose this method specifically for high FPS games.

Virtual Reality Technologies NVIDIA VRWORKS

We have repeatedly affected the hot topic of virtual reality in the article, but it was mostly about increasing the frequency of personnel and providing low delays, very important for VR. All this is very important and progress is really there, but so far VR games look far from much impressive as the best of "ordinary" modern 3D games. So it turns out not only because VR applications leading game developers are not particularly involved in, but also because of the greater VR demands for the frame rate, which does not allow many of the usual techniques in such games due to high demands.

In order to reduce the difference in the quality between VR games and ordinary, NVIDIA decided to release a whole package of relevant VRWorks technologies, which included a large number of APIs, libraries, engines and technologies that allow significantly improve both quality and performance VR- Applications. As it belongs to the announcement of the first gaming solution On Pascal? It is very simple - some technologies have introduced into it, helping to increase productivity and improve quality, and we have already written about them.

And although it comes to not only graphics, first tell me a little about it. The VRWORKS Graphics technology set includes the previously mentioned technologies, like Lens Matched Shading, using multiprocating the possibility that appears in GeForce GTX 1080. The novelty allows you to get a productivity increase of 1.5-2 times with respect to decisions that do not have such support. We also mentioned other technologies, like Multires Shading, intended for rendering with a different resolution in the center of the frame and on its periphery.

But much more unexpected was the announcement of VRWORKS Audio technology, designed for high-quality speaker data CCD in 3D scenes, especially important in virtual reality systems. In ordinary engines, the positioning of sound sources in a virtual environment is calculated rather correctly if the enemy shoots the right, then the sound is louder from this side of the audio system, and this calculation is not too demanding for computing power.

But in reality, the sounds come not only to the player, but in all directions and are reflected from various materials, similar to how the rays of light are reflected. And in reality, we hear these reflections, although not so distinctly like straight sound waves. These indirect sound reflections are usually simulated by special reverb effects, but this is a very primitive approach to the task.

In the VRWORKS Audio package, the miscalculation of sound waves is similar to the ray tracing when rendering when the path of the light beam is tracked to several reflections from objects in the virtual scene. VRWORKS Audio also imitates the spread of sound waves in the environment, when straight and reflected waves are monitored, depending on the angle of their falling and properties of reflective materials. In its work, VRWORKS AUDIO uses a high-performance NVIDIA Optix high-performance NVIDIA engine designed to trace rays according to graphic tasks. Optix can be used for a variety of tasks, such as the calculation of indirect lighting and preparation of lighting cards, and now for tracing audio waves in VrWorks Audio.

NVIDIA has embedded the exact calculation of sound waves into its VR Funhouse demonstration program, it uses several thousand rays and is calculated up to 12 reflections from objects. And in order to assign the benefits of technology on an understandable example, we suggest you watch a video about the work of technology in Russian:

It is important that the NVIDIA approach differs from traditional sound engines, including hardware accelerated using a special block in the GPU method from the main competitor. All these methods provide only accurate positioning of sound sources, but do not calculate the reflection of sound waves from objects in the 3D scene, although they can simulate this using the reverberation effect. Nevertheless, the use of ray tracing technology can be much more realistic, since only such an approach will provide accurate imitation of various sounds, taking into account the size, forms and materials of objects in the scene. It is difficult to say whether such accuracy is required for a typical player, but it can be said for sure: in VR, it can add users to the most realistic, which is not enough for the usual games.

Well, we have left to tell only about VR SLI technology, working in OpenGL and in DirectX. Its principle is extremely simple: the two-processor video system in the VR application will work so that each eye is allocated separate GPU, in contrast to the AFR rendering, which is familiar to SLI configurations. This greatly improves overall performance, so important for virtual reality systems. Theoretically, you can use more GPU, but their quantity should be even.

Such an approach was required because the AFR is poorly suitable for VR, since with its help the first GPU will draw an even frame for both eyes, and the second is odd, which does not reduce delays, critical for virtual reality systems. Although the frequency of frames will be high enough. So with VR SLI, the work on each frame is divided into two GPUs - one works on the part of the frame for the left eye, the second is for the right, and then these half frames are combined into a whole.