Analysis of large amounts of information and. The use of large data in medicine. BIG PROBLEMS OF USE BIG DATA

It was predicted that the overall global volume of created and replicated data in 2011 may amount to about 1.8 satetta (1.8 trillion gigabyte) - about 9 times more than what was created in 2006.

More complex definition

Nevertheless ` big data`Invite more than just an analysis of huge amounts of information. The problem is not that organizations create huge amounts of data, and in the fact that most of them are presented in a format, poorly relevant to the traditional structured database format - these are web logs, video recordings, text documents, machine code, or, for example, geospatial data . All this is stored in many diverse repositories, sometimes even outside the organization. As a result, the corporation may have access to the huge amount of their data and not have required toolsTo establish relationships between these data and make significant conclusions based on them. Add here the fact that the data is now updated increasingly and more often, and you will get a situation in which traditional methods for analyzing information cannot affect the huge amounts of constantly updated data, which in the end and opens the road technologies large data.

Best definition

In essence, the concept large data It implies work with the information of a huge volume and a variety of composition, very often updated and located in different sources in order to increase the efficiency of work, creating new products and increasing competitiveness. Consulting company Forrester gives a brief wording: ` Big data Combine techniques and technologies that remove the meaning of the data on the extreme limit of practicality`.

How big is the difference between business analytics and big data?

Craig Bati, executive director of marketing and director of Fujitsu Australia technologies, indicated that business analysis is a descriptive process for analyzing the results achieved by the business at a certain period of time, meanwhile as the processing speed large data Allows you to make an analysis predictive, able to offer business recommendations for the future. Large data technology also allow you to analyze more data types in comparison with business analytics tools, which makes it possible to focus not only on structured storage facilities.

Matt Slocum from O "Reilly Radar believes that though big data And business analysts have the same goal (search for answers to the question), they differ from each other in three aspects.

- Big data are designed to handle more significant amounts of information than business analyst, and this, of course, corresponds to the traditional definition of large data.

- Big data are intended for processing more quickly obtained and changing information, which means deep research and interactivity. In some cases, the results are formed faster than the web page is loaded.

- Big data are intended for processing unstructured data, whose use methods we only begin to study after able to establish their collection and storage, and we require algorithms and the possibility of dialogue to facilitate the search for trends contained within these arrays.

According to an Oracle-published white book `Oracle Information Architecture: Architect's Guide for Big Data. (Oracle Information Architecture: An Architect" S Guide to Big Data), when working with big data, we approach information otherwise than when conducting business analysis.

Working with large data is not similar to the usual business intelligence process, where a simple addition of known values \u200b\u200bbrings the result: for example, the result of the addition of paid accounts is becoming a sales volume for the year. When working with large data, the result is obtained in the process of cleaning them by successive modeling: first the hypothesis is put forward, a statistical, visual or semantic model is built, the loyalty to the hypotheses extended its base is checked and then the next one is put forward. This process requires a researcher or interpretation of visual values \u200b\u200bor compiling interactive knowledge-based queries, or the development of adaptive algorithms `Machine training, capable of obtaining the desired result. And the lifetime of such an algorithm can be rather short.

Methods for analyzing big data

There are many diverse methods for analyzing data arrays, which are based on tools borrowed from statistics and computer science (for example, machine learning). The list does not pretend to be complete, but it reflects the approaches most demanded in various industries. At the same time, it should be understood that researchers continue to work on creating new techniques and improving existing ones. In addition, some of these methods are not necessarily applied exclusively to large data and can be successfully used for smaller arrays (for example, A / B testing, regression analysis). Of course, the more volumetric and diversified array is analyzed, the more accurate and relevant data can be obtained at the output.

A / B Testing. The technique in which the control sample is alternately compared with others. Thus, it is possible to identify the optimal combination of indicators to achieve, for example, the best response of consumers for a marketing offer. Big data allow you to spend a huge number of iterations and thus get a statistically reliable result.

Association Rule Learning. A set of techniques to identify relationships, i.e. Associative rules between variables in large data arrays. Used B. data Mining..

Classification. A set of techniques that allows you to predict the behavior of consumers in a specific market segment (making decisions on the purchase, outflow, consumption volume, etc.). Used B. data Mining..

Cluster Analysis.. The statistical method of classifying objects by groups by detection of non-known common features. Used B. data Mining..

Crowdsourcing.. Data collection methodology from large number Sources.

Data Fusion and Data Integration. A set of techniques that allows you to analyze the comments of users of social networks and compare with the results of real-time sales.

Data Mining.. A set of techniques that allows you to determine the most susceptible to the progressable product or service category of consumers, identify the features of the most successful employees, predict a behavioral model of consumers.

Ensemble Learning. In this method, many predicative models are involved at the expense of which the quality of predictions made.

Genetic algorithms.. In this technique possible solutions They represent in the form `chromosome`, which can be combined and mutually. As in the process of natural evolution, the most adapted individual survives.

Machine Learning. Direction in computer science (Historically, the name of the `artificial intelligence) was entrenched historically, which pursues the goal of creating self-study algorithms based on an analysis of empirical data.

Natural Language Processing. (NLP.). Set of borrowed from computer science and linguistics techniques for recognizing the natural language of a person.

Network Analysis. A set of methods for analyzing links between nodes in networks. With regard to social networks allows you to analyze the relationship between individual users, companies, communities, etc.

Optimization. A set of numerical methods for redesign complex systems and processes to improve one or more indicators. It helps in making strategic decisions, for example, the composition of the product line launched to the market, investment analysis, and so on.

Pattern Recognition. A set of techniques with self-learning elements for predicting a consumer behavioral model.

Predictive Modeling. A set of techniques that make it possible to create a mathematical model in advance of the specified probable event development scenario. For example, an analysis of the CRM database-cues for possible conditions that subscribers will be prompted to change the provider.

Regression. A set of statistical methods to identify patterns between a change in the dependent variable and one or more independent. It is often used for forecasting and predictions. Used in Data Mining.

Sentiment Analysis. The methods of assessing consumer sentiment are based on the human language recognition techniques. They allow you to be pulled out of the general information flow Messages related to the subject of interest (for example, by the consumer product). Next to estimate the polarity of judgment (positive or negative), the degree of emotionality, and so on.

Signal Processing.. Borrowed from radio engineering A set of techniques that pursues the target recognition target against the background of noise and its further analysis.

Spatial Analysis. The set of partly borrowed from statistics methods for analyzing spatial data - topology of the terrain, geographical coordinates, geometry of objects. Source large data In this case, geographic information systems often perform (GIS).

Statistics. Collection science, organization and interpretation of data, including the development of questionnaires and conducting experiments. Statistical methods are often used for estimated conjugations on the relationships between those or other events.

Supervised Learning. Machining methods based on machine learning techniques that allow you to identify functional relationships in the analyzed data arrays.

Simulation. Modeling the behavior of complex systems is often used to predict, prediction and study of various scripts when planning.

Time Series Analysis. Set of borrowed from statistics and digital processing Signals of analyzing methods repeated over time of data sequences. Some of the obvious applications are tracking the securities market or the incidence of patients.

Unsupervised Learning. A set of machine learning techniques based on machine learning techniques that allow you to identify hidden functional relationships in the analyzed data arrays. Has common features with Cluster Analysis..

Visualization. Methods graphic representation The results of the analysis of large data in the form of diagrams or animated images to simplify the interpretation of facilitating the understanding of the results obtained.

A visual representation of the results of a large data analysis is of fundamental importance for their interpretation. It is no secret that human perception is limited, and scientists continue to conduct research in the field of improving modern data presentation methods in the form of images, diagrams or animations.

Analytical toolkit

For 2011, some of the approaches listed in the previous subsection or their definite combination make it possible to implement analytical engines to work with large data in practice. Of the free or relatively inexpensive open Big Data analysis systems, you can recommend:

- Revolution Analytics (based on R language for mat. Straightencies).

Of particular interest in this list represents Apache Hadoop - by open source, which over the past five years has been tested as the analyzer of the data by most trackers shares. As soon as Yahoo opened the Hadoop code with an open source community, a whole direction for creating products based on Hadoop appeared in the IT industry. Almost all modern means of analysis large data Provide integration tools with Hadoop. Their developers act as startups and well-known world companies.

Markets solutions to manage large data

Large Data Platforms (BDP, Big Data Platform) as a means of combating digital chores

Ability to analyze big data, In the surprise called Big Data, perceived as a good, and definitely. But is it really? What can the rampant data accumulation? Most likely to the fact that domestic psychologists in relation to man are called pathological storage, silhloromicia or figuratively "Plushkin Syndrome". In English, a vicious passion to collect everything in a row is called Hording (from the English Hoard - "stock"). According to the classification of mental diseases, choroding is counted for mental disorders. Digital Hoarding (Digital Hoarding) is added to the digital era, they may suffer both individual identities and whole enterprises and organizations ().

World and Russian market

Big Data Landscape - Main suppliers

Interest in Tools for Collection, Processing, Management and Analysis large data Exposed all the leading IT companies, which is quite natural. First, they directly face this phenomenon in their own business, secondly, big data Open excellent opportunities for the development of new niches of the market and attract new customers.

A lot of startups appeared on the market that makes a business on the processing of huge data arrays. Some of them use the finished cloud infrastructure provided by large players like Amazon.

Theory and practice of large data in industries

History of development

2017

TmaxSoft forecast: the next "wave" Big Data will require the upgrade of the DBMS

Enterprises are known that in their huge amounts of data, there is important information about their business and clients. If the company can successfully apply this information, then it will have a significant advantage compared to competitors, and it will be able to offer the best than them, products and services. However, many organizations still cannot effectively use big data Due to the fact that their inherited IT infrastructure is unable to provide the necessary capacity of storage systems, data exchange processes, utilities and applications necessary for processing and analyzing large arrays of unstructured data to extract valuable information from them indicated in TmaxSoft.

In addition, an increase in the processor capacity necessary for the analysis of constantly increasing data volumes may require significant investments in the outdated IT infrastructure of the organization, as well as additional support resources that could be used to develop new applications and services.

On February 5, 2015, the White House published a report in which the question was discussed how big data»To establish various prices for different buyers - practice known as" price discrimination "or" Differentiated pricing "(Personalized Pricing). The report describes the benefit of "big data" both for sellers and buyers, and its authors come to the conclusion that many problematic issues arising from the advent of large data and differentiated pricing can be solved within the framework of existing anti-discrimination legislation and laws protecting consumer rights.

The report notes that at this time there are only individual facts indicating how companies use large data in the context of individualized marketing and differentiated pricing. This information show that sellers use pricing methods that can be divided into three categories:

- study of the demand curve;

- Guidance (STEERING) and differentiated pricing based on demographic data; and

- target behavioral marketing (behavioral targeting - Behavioral Targeting) and individualized pricing.

Studying demand curve: In order to clarify the demand and study of the behavior of consumers, marketers often conduct experiments in this area, during which one of the two possible price categories is randomly appointed by clients. "Technically, these experiments are the form of differentiated pricing, since their consequence becomes different prices for customers, even if they are" non-discriminatory "in the sense that all customers have the likelihood of" getting "at a higher price of the same."

STEERING): This is the practice of presenting products to consumers based on their belonging to a specific demographic group. So, website computer company can offer one and the same laptop with various types of buyers at different prices, settled on the basis of information reported by them (for example, depending on whether this user Representative of state bodies, scientific or commercial institutions, or by a private person) or from their geographical location (for example, defined by the IP address of the computer).

Target behavioral marketing and individualized pricing: In these cases, personal data of buyers are used for targeted advertising and customized prices for certain products. For example, online advertisers use collected by advertising networks and through the cookies of third parties data about the activity of users on the Internet in order to aim to send their promotional materials. Such an approach, on the one hand, makes it possible to consumers to receive advertising of goods and services for them, it, however, may cause the concerns of those consumers who do not want certain types of their personal data (such as information about visiting sites related With medical and financial issues) were collected without their consent.

Although targeted behavioral marketing is widespread, there is relatively little evidence of individualized pricing in the online environment. The report suggests that this may be due to the fact that the corresponding methods are still being developed, or with the fact that companies are in no hurry to use individual pricing (or prefer to praise about it) - perhaps, fearing the negative reaction from consumers.

The authors of the report believe that "for an individual consumer, the use of large data is undoubtedly due to both potential return and risks." Recognizing that when using large data, problems of transparency and discrimination appear, the report at the same time claims that existing anti-discrimination laws and consumer protection laws are sufficient to solve them. However, the report also emphasizes the need for "continuous control" in cases where companies use confidential information by an opaque manner or methods that are not covered by an existing regulatory framework.

This report is a continuation of the efforts of the White House to study the use of "big data" and discriminatory pricing on the Internet, and relevant consequences for American consumers. Earlier it has already been reported that the Working Group of the White House for Great Data has published its report on this issue in May 2014. The Federal Commission for Trade (FTC) also considered these issues during the seminar on discrimination in the September 2014 in September 2014 due to the use of large data.

2014

Gartner dispels myths about "big data"

In the analytical note of the fall of 2014, Gartner listed a number of the myths common among IT managers regarding large data and their refutation is given.

- Everyone is implementing big data processing systems faster than us

Interest in larger data technologies is recorded: 73% of organizations surveyed by Gartner analysts this year already invest in relevant projects or are collected. But most of these initiatives are still at the earliest stages, and only 13% of respondents have already implemented such solutions. The most difficult thing is to determine how to extract income from large data, decide where to start. In many organizations are stuck in the pilot stage, because they cannot bind new technology to specific business processes.

- We have so much data that there is no need to worry about small mistakes in them

Some IT managers believe that small flaws in the data do not affect the overall results of the analysis of huge volumes. When there is a lot of data, each error separately does less affect the result, analysts mark, but also becomes more. In addition, most of the analyzed data is an external, unknown structure or origin, so the probability of errors is growing. Thus, in the world of large data, the quality is actually much more important.

- Large data technology will cancel the need to integrate data

Big data promise the ability to process data in an original format with automatic formation of the circuit as it is read. It is believed that this will allow analyze information from the same sources using multiple data models. Many believe that it will also allow end users to interpret any set of data at its discretion. In reality, most users often need a traditional way with finished schemeWhen the data is formatted accordingly, there are agreements on the level of information integrity and how it should relate to the use scenario.

- Data warehouse does not make sense to use for complex analytics

Many administrators of information management systems believe that it makes no sense to spend time creating a data warehouse, taking into account that complex analytical systems use new data types. In fact, in many systems of complex analytics uses information from the data storage. In other cases, new types of data must be additionally prepared to analyze in large data processing systems; It is necessary to make decisions on the suitability of the data, the principles of aggregation and the necessary level of quality - such preparation can occur outside the repository.

- Data lakes will come to change data storage facilities

In reality, the suppliers are misleading customers, positioning the data lakes (Data Lake) as replacing storage facilities or as critical elements of analytical infrastructure. The fundamental technologies of the lakes of the data lack the maturity and the latitude of the functionality inherent in storage facilities. Therefore, the leaders responsible for managing data should wait until the lakes reach the same level of development, believe in Gartner.

Accenture: 92% of the implementing system of large data are satisfied with the result

Among the main advantages of large data, the respondents called:

- "Search for new sources of income" (56%),

- "Improving customer experience" (51%),

- "New products and services" (50%) and

- "The influx of new customers and the preservation of older loyalty" (47%).

In the introduction of new technologies, many companies faced traditional problems. For a 51% stumbling block, security was, for 47% - budget, for 41% - lack of necessary frames, and for 35% complexity when integrating with an existing system. Almost all the companies surveyed (about 91%) are planning to solve the problem with the lack of personnel and hire specialists from great data.

Companies optimistic assesses the future technologies of large data. 89% believe that they will change the business as much as the Internet. 79% of respondents noted that companies that do not enjoy large data will lose a competitive advantage.

However, the respondents dealt with the opinion that it was worth it to be large. 65% of respondents believe that these are "big data cards", 60% are confident that it is "advanced analytics and analysis", and 50% is that these are "data of visualization tools".

Madrid spends 14.7 million euros on the management of big data

In July 2014, it became known that Madrid would use Big Data technology to manage urban infrastructure. The cost of the project is 14.7 million euros, the basis of the implementable solutions will be technologies for analysis and management of large data. With their help, the urban administration will manage work with each service provider and to pay it accordingly depending on the level of services.

We are talking about the contractors of the administration, which are followed by the state of streets, lighting, irrigation, green plantings, carry out the cleaning of the territory and export, as well as the processing of garbage. During the project, 300 key indicators of the performance of urban services were developed for specially selected inspectors, on the basis of which 1,5 thousand different checks and measurements will be carried out daily. In addition, the city will begin using an innovative technological platform called Madrid Inteligente (MINT) - Smart Madrid.

2013

Experts: Fashion Peak on Big Data

Everyone without exception to the vendor in the data management market at this time, technologies are developing technologies for the Big Data Management. This new technological trend is also actively discussed by the professional community, both developers and sectoral analysts and potential consumers of such solutions.

As DataShift found out, as of January 2013, a wave of discussions around " large data"Exceeded all imaginable sizes. After analyzing the number of Mentions of Big Data on social networks, DataShift was calculated that in 2012 this term was used about 2 billion times in posts created about 1 million different authors around the world. This is equivalent to 260 posts per hour, with the peak of references amounted to 3070 references per hour.

Gartner: Each second IT director is ready to spend money on Big Data

After several years of experiments with Big Data technologies and first introductions in 2013 adaptation such solutions It will increase significantly, predicted in Gartner. Researchers interviewed IT leaders around the world and found that 42% of respondents have already invested in Big Data technology or plan to make such investments over the next year (data for March 2013).

Companies are forced to spend money on processing technology. large dataSince the information landscape is rapidly changing, demanding new approaches to information processing. Many companies have already realized that large data arrays are critical, and work with them allows you to achieve benefits that are not available when using traditional sources of information and processing methods. In addition, permanent duty to the topic of "big data" in the media is heating interest in the relevant technologies.

Frank Buytendijk, Vice President Gartner, even called on the company to temper the dust, as some are concerned that they are lagging behind competitors in the development of Big Data.

"It's not necessary to worry about the opportunity to implement ideas on the basis of" big data "technologies are actually endless," he said.

According to Gartner, by 2015, 20% of the Global 1000 list companies will take a strategic focus on the "infrastructure".

In anticipation of new features that will bring with them the technology of processing "big data", now many organizations organize the process of collecting and storing various kinds of information.

For educational and government organizations, as well as industry companies, the largest potential for business transformation is laid in combination of accumulated data with the so-called DARK DATA (literally "dark data"), the latter include messages email, Multimedia and other similar content. According to Gartner, it is those who will learn how to handle the data from the most different sources of information.

Cisco Survey: Big Data will help increase IT budgets

During the study (spring 2013), called Cisco Connected World Technology Report, conducted in 18 countries by an independent Analytical company InsightExpress, 1,800 college students were interviewed and the same number of young professionals aged 18 to 30 years. The survey was conducted to find out the level of readiness of IT departments to project implementation Big Data. and get an idea of \u200b\u200brelated issues, technological flaws and strategic value of such projects.

Most companies collect, writes and analyzes the data. Nevertheless, the report says, many companies in connection with Big Data are faced with a number of complex business and information technology problems. For example, 60 percent of respondents recognize that Big Data solutions can improve decision-making processes and increase competitiveness, but only 28 percent declared that the real strategic advantages of the accumulated information are already obtained.

More than half of the IT managers respondents believe that Big Data projects will help to increase IT budgets in their organizations, as there will be increased requirements for technologies, personnel and professional skills. At the same time, more than half of the respondents expect such projects to increase IT budgets in their companies already in 2012. 57 percent are confident that Big Data will increase their budgets over the next three years.

81 percent of respondents said that all (or at least some) Big Data projects will require applications cloud computing. Thus, the distribution of cloud technologies may affect the speed of distribution of Big Data solutions and on the values \u200b\u200bof these business solutions.

Companies collect and use data the most different typessuch as structured and unstructured. Here is from what sources the data of the survey participants receive (Cisco Connected World Technology Report):

Almost half (48 percent) of IT managers predicts the doubling of the load on their network over the next two years. (This is especially characteristic of China, where 68 percent of respondents and Germany are adhered to a point of view - 60 percent). 23 percent of respondents await the tripling of the network load over the next two years. At the same time, only 40 percent of respondents declared their readiness to the explosive increase in network traffic.

27 percent of respondents recognized that they need better IT policies and information security measures.

21 percent needs to expand bandwidth.

Big Data opens up new opportunities before IT departments to increase the value and form a close relationship with business units, allowing to increase income and strengthen the company's financial position. Big Data projects make IT divisions with a strategic partner of business units.

According to 73 percent of respondents, it is the IT department that will become the main locomotive of the implementation of the Big Data strategy. At the same time, they consider respondents, other departments will also connect to the implementation of this strategy. First of all, it concerns the departments of Finance (24 percent of respondents), research (20 percent), operational (20 percent), engineering (19 percent), as well as marketing departments (15 percent) and sales (14 percent).

Gartner: To manage big data, millions of new jobs are needed.

World IT costs have been reached $ 3.7 billion by 2013, which is 3.8% more expenses for information technology in 2012 (the forecast at the end of the year is $ 3.6 billion). Segment large data (Big Data) will develop much higher rates, the Gartner report says.

By 2015, 4.4 million jobs in the field of information technologies will be created to serve large data, of which 1.9 million jobs are in. Moreover, each workplace He will entail the creation of three additional jobs outside the IT sector, so that only in the United States in the next four years 6 million people will work to maintain the information economy.

According to Gartner experts, the main problem is that in the industry for this not enough talents: both private and state educational system, for example, in the United States are not able to supply the industry with a sufficient number of qualified personnel. So from the mentioned new jobs in IT frames will be ensured only one of three.

Analysts believe that the role of painting qualified IT personnel should take on themselves directly to the Company, which are in dire need of them, as such employees will pass for them to the new information economy of the future.

2012

The first skepticism for "big data"

Analysts of Ovum and Gartner companies suggest that for fashionable in 2012 themes large data may come the time of liberation from illusions.

The term "big data", at this time, as a rule, denote the ever-growing amount of information coming in operational mode From social media, from networks of sensors and other sources, as well as a growing range of tools used for data processing and identifying important business trends based on them.

"Because of the hype (or despite it) regarding the idea of \u200b\u200blarge data, manufacturers in 2012 with a great hope looked at this tendency," said Tony Bayer, Ovum analyst.

Bayer said that DatasiFT conducted a retrospective analysis of the mention of large data in

What Big Data. (literally - big data)? Let us turn first to the Oxford Dictionary:

Data - values, signs or symbols that operates the computer and which can be stored and transmitted in the form of electrical signals, recorded on magnetic, optical or mechanical media.

Term Big Data. Used to describe a large and growing exponentially with a data set time. To process such a number of data, do not do without.

The advantages that Big Data provides:

- Collection of data from different sources.

- Improving business processes through real-time analytics.

- Storing a huge amount of data.

- Insights. Big Data is more perceptive to hidden information using structured and semi-structured data.

- Big data help reduce risk and make smart solutions due to a suitable risk analytics.

Examples of Big Data.

New York Stock Exchange Daily generates 1 Terabyte Data on trading over the past session.

Social media: Statistics shows that the Facebook database is loaded daily 500 terabytes New data is generated mainly due to photo downloads and videos on social network servers, messaging, comments under posts and so on.

Jet engine Generates 10 terabytes Data every 30 minutes during flight. Since thousands of flights are performed daily, the amount of data reaches petabytes.

Classification Big Data.

Forms of large data:

- Structured

- Unstructured

- Semi-structured

Structured form

Data that can be stored, be available and processed in shape with a fixed format is called structured. For a long time, computer sciences have achieved great success in improving the technician to work with this type of data (where the format is known in advance) and learned to benefit. However, today there are problems associated with increasing volumes to the size measured in the range of several zeettabites.

1 Zettabyte corresponds to a billion terabyte

Looking at these numbers, it is easy to verify the truthfulness of the term Big Data and the difficulties of conjugate with the processing and storage of such data.

The data stored in the relational base is structured and have a form, for example, company employees table

Unstructured form

The data of an unknown structure is classified as unstructured. In addition to large sizes, such a form is characterized by a number of difficulties for processing and removing useful information. A typical example of unstructured data is a heterogeneous source containing a combination of simple text files, pictures and video. Today, organizations have access to the large volume of raw or unstructured data, but do not know how to benefit from them.

Semi-structured form

This category contains both described above, therefore the semi-structured data possess some form, but in reality are not defined using tables in relational bases. An example of this category is the personal data presented in the XML file.

Characteristics Big Data.

Growth Big Data with time:

Blue presented structured data (Enterprise DATA), which are stored in relational bases. Other colors are unstructured data from different sources (IP telephony, devices and sensors, social networks and web applications).

In accordance with Gartner, large data differ in volume, generation rates, variety and variability. Consider these characteristics in more detail.

- Volume. By itself, the term Big Data is associated with a large size. Data size is the most important indicator when determining possible recoverable value. Daily 6 million people use digital media, which preliminary estimates generate 2.5 quintillion data bytes. Therefore, the volume is the first to consider the characteristic.

- Diversity - The next aspect. It refers to heterogeneous sources and nature of data, which can be both structured and unstructured. Previously, spreadsheets and databases were the only sources of information considered in most applications. Today, data in the form of emails, photos, video, PDF files, audio is also considered in analytical applications. Such a variety of unstructured data leads to problems in storage, mining and analysis: 27% of companies are not confident that they work with suitable data.

- Generation rate. How quickly the data is accumulated and processed to meet the requirements, determines the potential. The speed determines the speed of inflow of information from sources - business processes, application logs, social networking sites and media, sensors, mobile devices. The flow of data is huge and continuous in time.

- Variability Describes the inconstancy of data at some points in time that complicates processing and management. For example, most of the data is unstructured by nature.

Big Data Analytics: What is the benefit of big data

Promotion of goods and services: Access to data from search engines and sites, such as Facebook and Twitter, allows enterprises to more accurately develop marketing strategies.

Improving service for buyers: Traditional buyers feedback systems are replaced with new ones in which Big Data and the processing of the natural language is used to read and evaluate the customer's review.

Risk calculationassociated with the release of a new product or service.

Operating efficiency: Big data is structured to quickly remove the necessary information and promptly give the exact result. Such a combination of Big Data technologies and storages helps organizations optimize work with rarely used information.

Only lazy does not speak Big Data, but what it is and how it works - it is unlikely. Let's start with the simplest terminology. In Russian, Big Data is various tools, approaches and processing methods of both structured and unstructured data in order to use them for specific tasks and goals.

Unstructured data is information that does not have a predetermined structure or is not organized in a certain order.

The term "big data" introduced the editor of the magazine Nature Clifford Lynch back in 2008 in a special issue dedicated to the explosive growth of world information volumes. Although, of course, large data themselves existed before. According to experts, the Big Data category includes most of the data streams above 100 GB per day.

See also:

Today, under this simple term, only two words are hidden - storage and data processing.

Big Data - simple words

In the modern world, Big Data is a socio-economic phenomenon, which is associated with the fact that new technological capabilities appeared to analyze a huge amount of data.

See also:

For ease of understanding, imagine a supermarket in which all goods lie not in the usual order. Bread next to fruit, tomato paste near frozen pizza, liquid for ignition in front of the rack with tampons, on which, among others, stands avocado, tofu or shiitake mushrooms. Big Data set everything in its place and help you find nuts milk, find out the cost and expiration date, and also - who, besides you, buys such a milk and how it is better than a cow's milk.

Kenneth Cucier: Big Data - Best Data

Technology Big Data.

Huge data volumes are processed so that a person can get the specific and necessary results for them for their further effective use.

See also:

In fact, Big Data is a solution to problems and an alternative to traditional data management systems.

Techniques and analysis methods applicable to Big Data by McKinsey:

Crowdsourcing;

Mixing and data integration;

Machine learning;

Artificial neural networks;

Pattern recognition;

Forecast analytics;

Simulation;

Spatial analysis;

Statistical analysis;

- Visualization of analytical data.

Horizontal scalability, which provides data processing - the basic principle of processing large data. Data is distributed to computing nodes, and processing occurs without productivity degradation. McKinsey included relational control systems and Business Intelligence in the applicability context.

Technologies:

- Nosql;

- MapReduce;

- Hadoop;

- Hardware solutions.

See also:

For large data, traditional defining characteristics produced by Meta Group still in 2001, which are called " Three V.»:

- VOLUME. - The magnitude of the physical volume.

- Velocity. - The growth rate and the need for quick data processing to obtain results.

- Variety. - The ability to simultaneously process various data types.

Big Data: Application and Features

The volumes of inhomogeneous and quickly incoming digital information cannot be treated with traditional tools. The data analysis itself allows you to see certain and inconspicuous patterns that a person cannot see. This allows you to optimize all spheres of our life - from government to production and telecommunications.

For example, some companies have defended their customers from fraud a few years ago, and care for the money of the client is concern for their own money.

Susan Etlyger: How to deal with big data?

Big Data-based solutions: Sberbank, Beeline and other companies

Bilain has a huge amount of subscriber data that they use not only to work with them, but also to create analytical products, such as external consulting or IPTV analytics. Beeline segmented the base and protected customers from cash fraud and viruses, using HDFS and Apache Spark, and for data processing - Rapidminer and Python.

See also:

Or remember Sberbank with their old case called AS Safi. This is a system that analyzes the photos to identify the Bank's customers and prevents fraud. The system was introduced back in 2014, the system is based on a comparison of photographs from the base, which come there from webcams on racks due to computer vision. The basis of the system is a biometric platform. Due to this, fraud cases decreased 10 times.

Big Data in the world

By 2020, according to forecasts, humanity will form 40-44 zettabites of information. And by 2025 will grow 10 times, the report of The Data Age 2025, which was prepared by IDC analysts. The report notes that the enterprises themselves will be generated most of the data, and not ordinary consumers.

Analysts of research believe that the data will become a vital asset, and security is a critical foundation in life. Also, the authors of the work are confident that the technology will change the economic landscape, and the usual user will communicate with connected devices about 4800 times a day.

Big Data market in Russia

Typically, large data comes from three sources:

- Internet (social networks, forums, blogs, media and other sites);

- Corporate archives of documents;

- Indications of sensors, devices and other devices.

Big Data in banks

In addition to the system described above, in the Sberbank Strategy for 2014-2018. It is said about the importance of analyzing data supermaissions for quality customer service, risk management and cost optimization. Now the Bank uses Big Data to control risks, combating fraud, segmentation and credit-quality credit costs, personnel management, predicting queues in offices, calculation of bonuses for employees and other tasks.

VTB24 enjoys large data for segmentation and management of customer outflow, formation of financial statements, analysis of feedback in social networks and forums. To do this, he applies Teradata, Sas Visual Analytics and SAS Marketing Optimizer solutions.

Large data (or Big Data) is a set of working methods with huge volumes of structured or unstructured information. Specialists in working with large data are engaged in its processing and analysis to obtain visual, perceived results. Look At Me talked with professionals and found out what is the situation with the processing of large data in Russia, where and what is better to learn to those who want to work in this area.

Alexey Rupin on the main directions in the field of large data, communication with customers and the world of numbers

I studied at the Moscow Institute of Electronic Technology. The main thing that I managed to take out, are fundamental knowledge of physics and mathematics. At the same time, I worked in the R & D Center, where he was engaged in the development and implementation of noblestatic coding algorithms for the means of protected data transfer. After the end of the undergraduate, I entered the magistracy of business informatics of the Higher School of Economics. After that, I wanted to work in IBS. I was lucky that at that time in connection with large quantity Projects went an additional set of interns, and after several interviews I began working at IBS, one of the largest Russian companies in this area. For three years, I went away from the train before the architect of corporate solutions. Now I am engaged in developing Big Data technologies for customer companies from the financial and telecommunications sector.

There are two main specializations for people who want to work with big data: analysts and IT consultants who create technologies for working with large data. In addition, you can also talk about the profession of Big Data Analyst, i.e., people who directly work with the data with the IT platform from the customer. Previously, it was ordinary mathematics analysts who knew statistics and mathematics and using the statistical software to solve the data analysis tasks. Today, in addition to the knowledge of statistics and mathematics, an understanding of technology and a life cycle of data is also necessary. In this, in my opinion, is the difference between modern Data Analyst from those analysts that were before.

My specialization is IT consulting, that is, I am inventing and offering customers how to solve business tasks with IT technologies. People come to consulting with various experiences, but the most important qualities for this profession are the ability to understand the needs of the client, the desire to help people and organizations, good communication and team skills (since it is always working with the client and in a team), good analytical abilities. Internal motivation is very important: we work in a competitive environment, and the customer is waiting for unusual solutions and interest in work.

Most of the time I have to communicate with customers, formalizing their business needs and assistance in developing the most suitable technological architecture. The selection criteria here have their own features: In addition to the functionality and TSO (Total Cost of Ownership - the total cost of ownership) are very important, non-functional requirements for the system, most often this is the response time, information processing time. To convince the customer, we often use Proof of Concept approach - we offer free to "test" technology for some task, on a narrow data set to make sure that the technology works. The decision should create a competitive advantage for the customer at the expense of additional benefits (for example, X-SELL, cross-sale) or solve some kind of business problem, say, reduce the high level of loan fraud.

It would be much easier if customers came from the finished task, But as long as they do not understand that a revolutionary technology has appeared, which can change the market for a couple of years.

What problems have to face? The market is not ready to use "big data" technology. It would be much easier if customers came from a ready-made task, but until they understand that a revolutionary technology that could change the market for a couple of years. That is why we, in fact, work in the startup mode - do not just sell technology, but every time we convince customers that you need to invest in these solutions. This is such a position of visionaries - we show customers how you can change your business with the attraction of data and IT. We create this new market - Market of commercial IT consulting in the Big Data area.

If a person wants to engage in data-analysis or IT consulting in the sphere of Big Data, then the first thing that is important is mathematical or technical education with good mathematical preparation. It is also helpful to master specific technologies, let's say SAS, Hadoop, R language or IBM solution. In addition, it is necessary to actively be interested in the application tasks for Big Data - for example, as they can be used for improved credit scoring in a bank or management of the client's life cycle. These and other knowledge can be obtained from available sources: for example, Coursera and Big Data University. There is also a Customer Analytics Initiative in Wharton University of Pennsylvania, which has published a lot of interesting materials.

A serious problem for those who want to work in our area is an explicit lack of information about Big Data. You can not go to the bookstore or in for some site and get, for example, an exhaustive column of cases for all applications of Big Data technologies in banks. There are no such reference books. Part of the information is in the books, another part is collected at conferences, and to something you have to reach yourself.

Another problem is that analysts feel well in the world of numbers, but they are not always comfortable in business. Such people are often introverted, it is difficult for them to communicate, and therefore it is difficult for them to convincingly to convey to customers information about research results. For the development of these skills, I would recommend such books as the "Pyramid Principle", "speak in diagrams." They help develop presentation skills, concisely and clearly state their thoughts.

I was very helpful to participation in different case championships while studying at HSE. Case championships are intelligent competitions for students, where you need to study business problems and offer their decision. They are two species: Consulting Firm Case Championships, such as McKinsey, BCG, Accenture, as well as independent Changellenge type case championships. During the participation in them, I learned to see and solve complex tasks - from identifying the problem and its structuring to the protection of recommendations for its solution.

Oleg Mikhalsky about the Russian market and the specifics of creating a new product in the field of large data

Before coming to Acronis, I was already engaged in the launch of new products to the market in other companies. It is always interesting and difficult at the same time, so I was immediately interested in the possibility of working on cloud services and solutions for data storage. In this area, all my previous experience in the IT industry was useful, including its own startup project I-Accelerator. The presence of business education (MBA) also helped in addition to the basic engineering.

In Russia, large companies - banks, mobile operators, etc. - there is a need to analyze big data, so in our country there are prospects for those who want to work in this area. True, many projects are now integrating, that is, on the basis of foreign developments or Open Source technologies. In such projects, fundamentally new approaches and technologies are not created, but rather existing developments are adapted. In Acronis, we went to another way and, after analyzing the existing alternatives, decided to invest in our own development, creating a system of reliable storage for large data, which is not inferior at cost, for example, Amazon S3, but it works reliably and efficiently on a significantly smaller scale. Own development for large data is also among major Internet companies, but they are rather focused on internal needs than the satisfaction of the needs of external clients.

It is important to understand trends and economic forces that affect the area of \u200b\u200bprocessing of large data. To do this, read a lot, listen to the performances of authoritative specialists in the IT industry, visit thematic conferences. Now almost every conference has a Big Data section, but they all talk about it at different angles: from the point of view of technology, business or marketing. You can go for design work or internship in the company, which already conducts projects on this topic. If you are confident in your abilities, it is not too late to organize a startup in the sphere of Big Data.

Without constant contact with the market New development risks to be unclaimed

True, when you are responsible for a new product, much time goes to analytics of the market and communicating with potential customers, partners, professional analysts who know a lot about customers and their needs. Without constant contact with the market, the new development risks being unclaimed. There are always a lot of uncertainty: you must understand who will become the first users (Early Adopters) that you have valuable for them and then attract a mass audience. The second most important task is to form and convey to developers a clear and holistic vision of the final product to motivate them to work in such conditions when some requirements may still change, and priorities depend on feedbackcoming from the first customers. Therefore, an important task is to manage customer expectations on one side and developers on the other. So that neither others have lost interest and brought the project before completion. After the first successful project, it becomes easier, and the main task will find the right growth model for the new business.

At one time, I heard the term "Big Data" from German Gref (head of Sberbank). They say, they now actively work on the introduction, because it will help them cut the time of working with each client.

The second time I ran into this concept in the client's online store, over which we worked and increased the range from a pair of thousands to a couple of tens of thousands of commodity positions.

For the third time, when I saw that the Big Data analyst is required in Yandex. Then I decided to dare to figure it out in this topic and at the same time write an article that would tell what the term is for the term that excites the minds of top managers and Internet space.

Vvv or vvvvv

Usually any of your article I start with the explanation as for the term so. This article will not be an exception.

However, this is caused first of all without the desire to show what I am smart, but the fact that the topic is truly complex and requires careful explanation.

For example, you can read what Big Data is in Wikipedia, do not understand anything, and then return to this article so that you still understand the definition and applicability for business. So, let's start with the description, and then to the examples for business.

Big Data is big data. Surprisingly, yes? Really, it is translated from English as "big data". But this definition can be said for dummies.

Important. Big Data Technology is an approach / method of processing a larger number of data to obtain new information that is hard to handle in conventional ways.

The data can be both processed (structured) and disparate (that is, unstructured).

The term itself appeared relatively recently. In 2008, this approach was predicted in the scientific journal as something necessary for working with a large amount of information, which increases in geometric progression.

For example, annually information on the Internet, which needs to be stored, well, to process, increase by 40%. Again. + 40% each year appears on the Internet of new information.

If the printed documents are clear and their processing methods are also clear (transfer to electronic form, sew to one folder, numbered), what to do with the information that is presented in completely other "media" and other volumes:

- internet documents;

- blogs and social networks;

- audio / video sources;

- measuring devices;

There are characteristics that allow you to attribute information and data to Big Data.

That is, not all data can be suitable for analytics. In these characteristics, the key concept of Big Date is laid down. All of them fit in three V.

- Volume (from Eng. Volume). Data is measured in the magnitude of the physical volume of the "document" to be analyzed;

- Speed \u200b\u200b(from eng. Velocity). The data does not cost in their development, but constantly grow, that is why their fast processing is required to obtain results;

- Mature (from English. Variety). Data may not be single-format. That is, they can be disparate, structured or structured partially.

However, periodically add to VVV and the fourth V (veracity - reliability / believability of data) and even the fifth V (in some embodiments it is Viability - viability, in others it is Value - value).

Somewhere I also saw 7V, which characterize data related to the Big Date. But in my opinion this is from the series (where P is periodically added, although it is for understanding the initial 4-x).

We are already over 29,000 people.

Enter

Who needs it?

It comes to a logical question, how can I use information (if that, Big Date is hundreds and thousands of terabyte)? Not even so.

Here is information. So what did the Big Date come up with then? What is the use of Big Data in marketing and in business?

- Ordinary databases cannot be stored and processing (I now speak not even about analytics, but simply storage and processing) of a huge amount of information.

Big Date solves this main task. Successfully stores and manages information with a large volume;

- Structures information coming from various sources (video, images, audio and text documents), in one single, understandable and responding form;

- Formation of analytics and creating accurate forecasts based on structured and processed information.

It's complicated. To speak simply, any marketer who understands that if you explore a large amount of information (about you, your company, your competitors, your industry), you can get very decent results:

- Full understanding of your company and your business on the part of numbers;

- Explore your competitors. And this, in turn, will give the opportunity to come out ahead due to the prevalence of them;

- Learn new information about your customers.

And precisely because Big Data technology gives the following results, everything is worn with it.

Trying to fasten this case into your company to get an increase in sales and reduce costs. And if specifically, then:

- Increase sales cross and additional sales due to better knowledge of customer preferences;

- Finding popular products and reasons why they are bought (and on the contrary);

- Improving product or service;

- Improving service level;

- Raying loyalty and customer focus;

- Fraud warning (more relevant for the banking sector);

- Reduced excess costs.

The most common example, which is given in all sources - is, of course, apple companywhich collects data on its users (phone, clock, computer).

It is due to the presence of the eco-system that the corporation knows so much about its users and hereinafter uses this to profit.

These and other examples of using you can read in any other article except this.

We go to the future

I will tell you about another project. Rather about a person who builds the future using Big Data solutions.

This is Ilon Mask and his company Tesla. His main dream is to make cars autonomous, that is, you get behind the wheel, turn on the autopilot from Moscow to Vladivostok and ... fall asleep, because you absolutely do not need to drive a car, because he will do everything himself.

It would seem fantasy? But no! Just Ilon came much wiser than Google, who manage cars with tens of satellites. And went to another way:

- In each car sold, a computer is set, which collect all the information.

All - this means all the whole. About the driver, the style of his driving, roads around, the movement of other cars. The volume of such data reaches 20-30 GB per hour;

- Next, this satellite information is transmitted to the central computer, which is engaged in processing this data;

- Based on Big Data data that processes this computer, A model of an unmanned car is being built.

By the way, if the Google business go pretty badly and their cars all the time get into the accident, then the mask, due to the fact that work with Big Data is going much better, because test models show very well-good results.

But ... it's all from the economy. What are we all about profits, yes about profit? Much, which can decide the Big Date, is completely unrecognized with earnings and money.

Google statistics, just being based on Big Data, shows an interesting thing.

Before physicians declare the beginning of the epidemic of the disease in some region, the number of search queries on the treatment of this disease is significantly increasing.

Thus, the correct study of data and their analysis can form predictions and predict the beginning of the epidemic (and, accordingly, its prevention) is much faster than the conclusion of official bodies and their actions.

Application in Russia

However, Russia, as always, slightly "slows down." So the definition of Big Data in Russia appeared no more than 5 years ago (I am now about regular companies now).

And it despite the fact that this one of the fastest growing markets in the world (drugs and weapons nervously smoking on the side), because annually the market for collecting and analyzing Big Data will gripe by 32%.

In order to characterize the Big Data market in Russia, I remember one old joke. Big Date is like sex until 18 years old.

Everyone is told about it, there are many noise and few real actions around it, and everyone is ashamed to admit that they are not engaged in this. And the truth is, around this a lot of noise, but little real actions.

Although the well-known Gartner research company has already announced that the Big Date is an increasing trend (as by the way and artificial intelligence), and quite independent instruments for analyzing and developing advanced technologies.

The most active niches, where Big Data in Russia is applied, these are banks / insurance (no wonder I started an article with the head of Sberbank), telecommunications sphere, retail, real estate and ... public sector.

For example, I will tell you more about a pair of sectors of the economy, which use Big Data algorithms.

Banks

Let's start with banks and the information they collect about us and our actions. For example, I took the top 5 Russian banks that actively invest in Big Data:

- Sberbank;

- Gazprombank;

- VTB 24;

- Alfa Bank;

- Tinkoff Bank.

It is especially nice to see among the Russian leaders of Alpha Bank. At a minimum, it is nice to realize that the bank, the official partner of which you are, understands the need to introduce new marketing tools into your company.

But examples of using and successfully implementing Big Data I want to show on a bank that I like for a non-standard look and a deed of its founder.

I'm talking about Tinkoff Bank. Their main task was to develop a system for analyzing large data in real time due to the increasing client base.

Results: The time of internal processes decreased at least 10 times, and for some more than 100 times.

Well, and a little distraction. Do you know why I spoke about non-standard trials and actions of Oleg Tinkov?

Just in my opinion, they helped him to turn out of a businessman of the middle hand, koi thousand in Russia, in one of the most famous and recognizable entrepreneurs. In confirmation, look this unusual and interesting video:

The property

In real estate, everything is much more difficult. And this is exactly the example I want to bring you to understand the Big Date to understand the usual business. Initial data:

- Large amount of text documentation;

- Open sources (private satellites transmitting data on earth changes);

- Huge amount of uncontrolled information on the Internet;

- Continuous changes in sources and data.

And on the basis of this, it is necessary to prepare and evaluate the value of the land plot, for example, under the Ural Village. The professional will take a week.

The Russian Society Appraisers & Roseco, which is actually an analysis of Big Data with the help of software, will leave for this not more than 30 minutes of leisurely work. Compare, week and 30 minutes. The colossal difference.

Well, for a snack

Of course, huge amounts of information cannot be stored and processed on simple hard drives.

And the software that structures and analyzes the data is generally intellectual property and every time the author's development. However, there are tools based on all this charm:

- Hadoop & MapReduce;

- NOSQL databases;

- Data Discovery class tools.

To be honest, I will not be able to clearly explain what they differ from each other, as they are learning to get acquainted and working with these things in physical and mathematical institutions.

Why then did I speak about it if I can't explain? Remember in all films robbers enter any bank and see a huge number of all sorts of hardware connected to the wires?

The same thing in big date. For example, here is a model that is currently one of the most leaders in the market.

Tool Big Date

The cost in the maximum configuration comes up to 27 million rubles per rack. This is, of course, the luxury version. I am to ensure that you follow the creation of Big Data in your business.

Briefly about the main thing

You can ask why you, small and medium business work with big date?

On this I will answer you a quote of one person: "In the near future, customers will be in demand by companies that better understand their behavior, habits and match them as much as possible."

But let's take a truth in the eyes. To introduce the Big Date in the Small Business, it is necessary to possess not only big budgets for the development and implementation of software, but also on the content of specialists, at least such as Big Data analyst and sysadmin.

And now I am silent that you have to have such data for processing.

Okay. For small business, the topic is almost not applicable. But this does not mean that you need to forget all that read above.

Just study not your data, but the results of analytics of data known as foreign and Russian companies.

For example, the Target retail network using Big Data analysts found out that pregnant women in front of the second trimester of pregnancy (from the 1st to the 12th week of pregnancy) are actively buying non-aromatic means.

Thanks to this data, they send them coupons with discounts on unarative means with a limited period.

And if you are just a very small cafe, for example? Yes, very simple. Use the loyalty application.

And after a while, thanks to the accumulated information, you can not only offer customers relevant to their needs, but also to see the most unreliable and most marginal dishes literally a pair of mouse clicks.

Hence the output. Introducing the Big Date of Small Business is hardly worth it, but to use the results and developments of other companies - be sure.

Cellular - what it is on the iPad and what's the difference

Cellular - what it is on the iPad and what's the difference Go to digital television: What to do and how to prepare?

Go to digital television: What to do and how to prepare? Social polls work on the Internet

Social polls work on the Internet Savin recorded a video message to the Tyuments

Savin recorded a video message to the Tyuments Menu of Soviet tables What was the name of Thursday in Soviet canteens

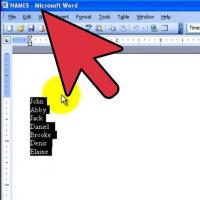

Menu of Soviet tables What was the name of Thursday in Soviet canteens How to make in the "Word" list alphabetically: useful tips

How to make in the "Word" list alphabetically: useful tips How to see classmates who retired from friends?

How to see classmates who retired from friends?