ranking algorithm. Yandex ranking algorithm Ranking algorithms

The author tells about 30 entertaining (and instructive) stories from the field of mathematics. One of the stories talks about how PageRank works, a link ranking algorithm pioneered by Google. The topic is relevant and quite easy to understand. So over to Steven Strogatz...

Back in the days before Google didn't exist, searching the web was a hopeless endeavor. Sites offered by older search engines often didn't match the query, and those that contained the right information were either buried deep in the list of results or not at all. Algorithms based on link analysis solved the problem by penetrating a paradox similar to Zen koans: Web searches were supposed to show the best pages. What makes a page better? When other equally good pages link to it.

Download note in format or

It sounds like reasoning about a vicious circle. This is true. That is why everything is so complicated. By seizing on this idea and turning it into an advantage, the link analysis algorithm provides a jiu-jitsu-style web search solution. This approach is built on ideas taken from linear algebra, the study of vectors and matrices. Whether you want to discover patterns in a huge amount of data or perform gigantic calculations with millions of variables, linear algebra provides you with all the tools you need. With its help, the foundation was built for the PageRank algorithm, which is the basis of Google. She also helps scientists classify human faces, analyze Supreme Court voting, and win the Netflix Prize (given to the team that improves over 10% of the Netflix system that recommends the best movies to watch).

To explore linear algebra in action, let's take a look at how the PageRank algorithm works. And to bring out its essence without too much fuss, imagine a toy web, consisting of only three pages, interconnected as follows:

Rice. 1. Small network of three sites

Arrows indicate that page X contains a link to page Y, but Y does not reciprocate. Instead, Y refers to Z. Meanwhile, X and Z refer to each other.

What are the most important pages on this little web? You might think that it is impossible to determine this due to the lack of information about their contents. But this way of thinking is outdated. Anxiety about content resulted in an inconvenient way to rank pages. Computers have little understanding of the semantic content, and people cannot cope with the thousands of new pages that appear on the web every day.

The approach devised by Larry Page and Sergey Brin, graduate students at the university and founders of Google, was to let pages rank themselves in a certain order by link voting. In the example above, pages X and Y link to Z, making Z the only page with two inbound links. Therefore, it will be the most popular page in this environment. However, if the links come from pages of dubious quality, they will work against themselves. Popularity by itself means nothing. The main thing is to have links from good pages.

And here we are again in a vicious circle. A page is considered good if it has good pages linking to it, but who decides which ones are good in the first place? The network decides. Here's how it all goes.

Google's algorithm assigns a fractional number between 0 and 1 to each page. This numerical value is called PageRank and measures the "importance" of a page relative to others by calculating the relative amount of time a hypothetical user would spend visiting it. Although the user can choose from more than one outgoing link, he chooses it randomly with equal probability. With this approach, pages are considered more authoritative if they are visited more often.

And since PageRank indices are defined as proportions, their sum over the entire network should be 1. This conservation law suggests a different, perhaps more tangible, way of visualizing PageRank. Think of it as a liquid substance flowing through the web, decreasing on bad pages and increasing on good ones. With the help of an algorithm, we are trying to determine how this liquid is distributed over the Internet over a long period of time.

The answer will be obtained as a result of the following repeated process. The algorithm starts with a guess, then updates all the PageRank values, distributing fluid equally across outbound links, after which it goes through a few circles until a certain state is established, in which the pages receive their share.

Initially, the algorithm sets equal shares, which allows each page to receive the same amount of PageRank. In our example, there are three pages, and each of them starts moving along the algorithm with a score of 1/3.

Rice. 2. Initial PageRank values

The score is then updated to reflect the actual value of each page. The rule is that each page takes its PageRank from the last circle and distributes it evenly across all the pages it links to. Therefore, page X's updated value after the first round is still 1/3, because that's how much PageRank it gets from Z, the only page that links to it. This reduces page Y's score to 1/6 as it only gets half the PageRank of X after the previous round. The second half goes to page Z, which makes it the winner at this stage, since it adds another 1/6 of page X to itself, as well as 1/3 of Y, for a total of 1/2. Thus, after the first round, we have the following PageRank values:

Rice. 3. PageRank values after one update

In subsequent circles, the update rule remains the same. If we denote by x, y, z the current count of pages X, Y and Z, then as a result of the update we get the following score:

z' \u003d ½ x + y,

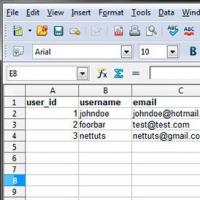

where dashes indicate that an update has occurred. It is convenient to perform such repetitive calculations in a spreadsheet (or manually if the network is small, as in our case).

After ten repetitions, we find that the numbers practically do not change from update to update. At this point, X's share will be 40.6% of the total PageRank, Y's share will be 19.8%, and Z's will be 39.6%. These values are suspiciously close to the numbers 40, 20 and 40%, which means that the algorithm should converge to them. This is true. These limit values are determined by the Google algorithm for the network as PageRank.

Rice. 4. PageRank Limits

The conclusion for this small network is that pages X and Z are equally important, even though Z has twice as many incoming links. This is understandable: page X is equal to Z in importance, since it receives full approval from it, but in return gives it only half of its approval. The other half goes to Y. This also explains why Y only gets half of X and Z's shares.

Interestingly, these values can be obtained without resorting to multiple iterations. You just need to think about the conditions that determine the stationary state. If nothing changes after the next update, then x' = x, y' = y and z' = z. Therefore, replacing primed variables in the update equations with their unprimed equivalents, we obtain the system of equations

when solving which x = 2y = z. Since the sum of x, y, and z must equal 1, it follows that x = 2/5, y = 1/5, and z = 2/5, which corresponds to the previously found values.

Difficulties begin where there are a huge number of variables in the equations, as happens in a real network. Therefore, one of the central tasks of linear algebra is the development of faster algorithms for solving large systems of equations. Even minor improvements in these algorithms are felt in almost all areas of life - from flight schedules to image compression.

However, linear algebra's most significant victory, in terms of its role in everyday life, was by far the solution to the Zen paradox for page ranking. "A page is good to the extent that good pages link to it." Translated into mathematical symbols, this criterion becomes the PageRank algorithm.

The Google search engine became what it is today, after solving the equation that we just solved, but with billions of variables - and, accordingly, with billions in profits.

According to Google, the term PageRang comes from the name of one of the founders of Google Larry Page, and not from the English word page (page).

For the sake of simplicity, I will present only the basic version of the PageRank algorithm. To handle networks with some other structural properties, it needs to be changed. Suppose there are pages on the web that link to others, but those pages don't link to them in turn. During the upgrade process, these pages will lose their PageRank. They give it to others, and it is no longer replenished. Thus, eventually they will get PageRank values equal to zero, and from this point of view they become indistinguishable.

On the other hand, there are networks where certain pages or groups of pages are open to accumulating PageRank but do not link to other pages. Such pages act as PageRank accumulators.

To avoid similar results, Brin and Page modified their algorithm as follows. After each step in the data update process, all current PageRank values are reduced by a constant factor, so that their sum is less than 1. The remaining PageRank is then distributed evenly among all nodes in the network, as if "falling from the sky." Thus, the algorithm ends with an adjustment action that distributes the PageRank values among the "poorest" nodes.

For a more in-depth look at the mathematics of PageRank and interactive research, see E. Aghapour, T. P. Chartier, A. N. Langville, and K. E. Pedings, Google PageRank: The mathematics of Google (

In addition to graphical and set-theoretic, they often use algebraic representation matrix graph.

Consider the digraph G, containing n peaks and m ribs. adjacency matrix digraph G called matrix A size n n

Sometimes the adjacency matrix is called relationship matrix, or direct link matrix.

Incidence matrix(or incidence matrix) digraph G called matrix B size n m, in which

To introduce an adjacency matrix, you need to number the vertices, and for the incidence matrix, the edges of the graph.

The algebraic representation makes it possible to algorithmize the procedure for determining the structural quantitative parameters of the system in a form convenient for computer programming.

Let us now consider some methods for solving practical problems using the mathematical formalism introduced by us.

Ranking of system elements

The analysis of connections in a graph consists, first of all, in finding and evaluating paths between its vertices. In addition to the direct search for a path in a certain communication system, this problem includes, for example, the problem of choosing the optimal strategy, etc. Indeed, it is enough to associate graph vertices with some goals, and path lengths with the costs of achieving these goals, in order to obtain the problem of choosing a strategy for achieving the goal with the least cost.

Searching for paths according to a drawing with a complex graph structure (in practice, one has to analyze graphs with more than 100 vertices) is difficult and is associated with the possibility of errors. Let us consider one of the algebraic methods, convenient for use on a computer. This method allows, based on the matrix of direct links  , build full path matrix

, build full path matrix , Where

, Where  - number of paths from the top i to the top j(

- number of paths from the top i to the top j( = 0), or limit ourselves to finding one of its elements.

= 0), or limit ourselves to finding one of its elements.

Numbers  or their literal expressions are defined using a special kind of determiners - quasi-minors(unsigneddeterminants). There is a formula

or their literal expressions are defined using a special kind of determiners - quasi-minors(unsigneddeterminants). There is a formula

.

.

Expression  called element quasi-minor

called element quasi-minor matrices

matrices  . Sign

. Sign  is the quasi-minor symbol, and

is the quasi-minor symbol, and  points to a matrix with crossed out l-th line and k th column, which fits into the quasi-minor symbol like a matrix fits into the ordinary minor symbol.

points to a matrix with crossed out l-th line and k th column, which fits into the quasi-minor symbol like a matrix fits into the ordinary minor symbol.

The calculation of the quasi-minor is reduced to its decomposition into quasi-minors of smaller order by the formula

The calculation procedure is in many ways similar to the calculation procedure for ordinary determinants, but some skill is required to master this method.

Example.

Let the matrix of direct links have the form

It is necessary to find all paths leading from vertex 1 to 5 and count their number.

For the example under consideration, we get

Initially in the matrix  Column 1 is crossed out, corresponding to the number of the vertex from which the path starts, and row 5, corresponding to the number of the vertex at which the path ends. This corresponds to removing from the graph all edges leading to node 1 and leaving node 5. It is more convenient to leave the position and numbering of the remaining rows and columns unchanged. Next, it is necessary to expand the resulting quasi-minor in non-zero elements of the 1st row

Column 1 is crossed out, corresponding to the number of the vertex from which the path starts, and row 5, corresponding to the number of the vertex at which the path ends. This corresponds to removing from the graph all edges leading to node 1 and leaving node 5. It is more convenient to leave the position and numbering of the remaining rows and columns unchanged. Next, it is necessary to expand the resulting quasi-minor in non-zero elements of the 1st row

The expansion for the first term is carried out along the second line, the second - along the third, the third - along the fourth, i.e. the number of the row along which the decomposition is carried out is equal to the number of the column in which the last term of the decomposition was located.

If we now put for non-zero elements  = 1 and perform operations according to the rules of ordinary arithmetic, then we get -

= 1 and perform operations according to the rules of ordinary arithmetic, then we get -  .

.

If, in the resulting expression, we perform actions according to the rules of Boolean algebra, then we get the value complete matrix of connections , which characterizes graph connectivity. The values of the elements of the complete matrix of relations

, which characterizes graph connectivity. The values of the elements of the complete matrix of relations  are defined like this:

are defined like this:

= 1 if vertex i is connected to vertex j by at least one path,

= 1 if vertex i is connected to vertex j by at least one path,

=0 otherwise.

=0 otherwise.

It is usually considered that  .

.

Connectivity is the most important characteristic of the structural scheme of the system. The structure is the better, the fuller the fullness of the complete matrix of connections. The presence of a large number of zeros indicates serious flaws in the structure of the system.

Another important characteristic of the structure is the distribution of the importance of the elements of the system. Quantitative characteristic of significance - element rank- was first explicitly formulated in the analysis of the structure of relations of dominance (superiority, predominance) in groups of individuals (humans, animals).

Using the full path matrix  , the values of the ranks of the elements are determined by the formula

, the values of the ranks of the elements are determined by the formula

.

.

It should be borne in mind that the significance of an element is not determined by the value itself.  , but by comparing the ranks of all elements, i.e. rank

, but by comparing the ranks of all elements, i.e. rank  is a relative measure of importance.

is a relative measure of importance.

The higher the rank of a given element, the greater the number of ways it is connected with other elements, and the greater the number of elements will violate the normal operating conditions when it fails. Therefore, when forming a program for ensuring the reliability of the system under consideration, it is necessary to pay special attention to elements with a high rank.

For systems with a network-like structure, the presence of elements with ranks that are significantly higher than those of the others usually indicates a functional overload of these elements. It is desirable to redistribute links, to provide workarounds in order to equalize the significance of the elements of this system.

There are other methods for determining ranks. The choice of an appropriate technique is determined by the specifics of the task.

It should be noted that there are structures, the ranking of elements of which may lose its practical meaning. These are primarily hierarchical structures. The significance of an element in them is determined by the level of the hierarchy.

We have released a new book, "Social Media Content Marketing: How to get into the head of subscribers and make them fall in love with your brand."

Ranking algorithms - methods for assessing the quality of sites

The TOP-10 should include only those sites that fully respond to the user's request. High-quality results are provided by special mathematical formulas that determine the "usefulness" of a particular site. Search engines do not disclose information about their algorithms, they provide webmasters with only general recommendations for improving and optimizing sites. Nevertheless, optimizers have learned to identify certain patterns on the basis of which a strategy is developed.

birth.

More videos on our channel - learn internet marketing with SEMANTICA

What criteria does the ranking algorithm take into account

Search engines evaluate websites in many ways. The most important criteria include:

- uniqueness and optimization of texts (presence of key phrases, nausea, wateriness);

- domain age;

- quantity and quality of incoming links;

- type of CMS used;

- site page loading speed;

- errors in the code.

By understanding how the search engine algorithm works, a webmaster can influence the issuance of his site. To do this, you need to "fit" the pages of the web project to the requirements of the PS. In particular, you will need to embed key phrases in the title and description meta tags, as well as directly into the page text. If you are promoting by a geo-dependent query, then, in addition to the keywords, you should add the name of the desired city or region.

This is interesting! Periodically, the search engine is upgraded, which leads to a fundamental change in existing algorithms. Such measures are aimed at combating search spam. Often, a change in the Yandex algorithm leads to a deterioration in the positions of sites promoted by "black" and "gray" methods.

Search sanctions

If the webmaster is clearly trying to manipulate Yandex algorithms, then the search engine can apply various sanctions to him. The following problems may occur:

- Downgrading in SERPs

- Poor indexing of new pages (or falling out of the index of old documents)

- Full or partial ban

Yandex algorithms impose sanctions for excessive optimization of texts, for example, for placing lists of key phrases on pages. The filter can be applied for "invisible" text that blends into the background. Also subject to sanctions are doorway sites and Internet sites that copy someone else's content.

New Yandex Algorithm - Minusinsk

This algorithm involves pessimizing a web project for using SEO links. We are talking about sites that buy thousands of links using automated exchanges like Sape. From Yandex's point of view, a link is considered SEO if it leads from a low-quality donor site and has a commercial anchor.

The reason for applying the filter "" may be a sharp increase in the reference mass. Therefore, in order to protect your web project from the possibility of applying such a sanction, you should purchase links gradually and dilute anchor links with non-anchor hyperlinks.

This is the simplest method of evaluating work, as it involves the distribution of all types of work in places, depending on their value to the organization. The content of some type of work is compared with the content of the work taken as the starting point, as a result, it takes the appropriate place. Often such a comparison is carried out on the basis of only one job description, without a full analysis of the work. Then, depending on the belonging of the work to a particular rank or gradation, payment is assigned. This method is very simple and may be useful in small firms with little or no job variety, or with a fairly homogeneous "family" of jobs, but may be completely unusable in larger organizations with a wide variety of jobs of varying content (some of them very complex). In this case, the grouping of types of work should be carried out on the basis of an approved scheme, especially if the presence of a large number of individual gradations and pay rates is not acceptable.

The ranking method is relatively simple and inexpensive to use, and can be implemented fairly quickly. On the other hand, the ranking of works can be done on the basis of incomplete information and without regard to certain standards. Often, job rankers do not have specific knowledge of the jobs they rank. This means that the rankings can be quite superficial and result in rankings of performers rather than the types of work themselves. In addition, the prevailing pay rates for them often influence the ranking positions of various types of jobs.

2. Classification method

This method is widely used to pay employees of institutions, as well as to classify the skills of employees in the manufacturing industry. It differs from the ranking method in that the structure of gradations and the corresponding pay are established before a thorough study of various types of work is carried out. The number of gradations is determined, their functions are established and, accordingly, the payments for each of them are clear.

In this approach, the descriptions of the various grades are designed to reflect marked differences in skill levels, responsibilities, and job requirements. Types of work for which employees are required only to follow simple instructions under constant supervision correspond to the lowest gradation of the scale. Each subsequent step reflects a higher level of skills, responsibilities, etc. and less control. The work is not divided into its component parts, but, as with the ranking method, it is considered as a whole. On Fig. 3 gives an example of gradations and a brief description of office work.

Rice. 3. Gradations of office work: a general description of the work.

|

Scale "D". Normal routine office work. Scale "C". Work that requires special training, knowledge and experience in certain areas of activity. Certain types of jobs require an above-average appearance. High degree of reliability and precision when working with parts. Control over the work performed, with the exception of general management, is not expected. Scale "B". A job that requires serious special knowledge and experience in a particular field of activity. Some jobs require very high personal qualities. High degree of reliability and precision when working with parts. The completed work does not need additional verification. It assumes initiative and individual responsibility in interpreting instructions for performing a particular job and making the right decision. Can lead a small or medium group of staff. Scale "A". A job that requires very serious special knowledge and experience in certain areas. Ability to organize and conduct some operations on one's own initiative. Responsibility for the work and behavior of a large group of employees and the ability to evaluate the effectiveness of their work and abilities. A high degree of responsibility assumes the ability to play the role of a leader and establish contacts both inside and outside the organization. |

The classification method is also relatively simple, inexpensive and easy to apply. Although the results obtained may be quite satisfactory, the amount of remuneration that must be paid for the performance of a particular work may be highly dependent on existing rates. Moreover, the written description of each stage is very difficult and will become even more complicated in large organizations. In these cases it is very often necessary to have many different stages, but then this will deprive the method of its main advantage - simplicity. In addition, it is often very difficult to place a particular job on any level, since its characteristics may overlap with those of another type of work, and the level of analysis carried out is not always detailed enough to correctly classify this type of work.

A slightly more sophisticated version of this approach involves the participation of a panel of arbitrators representing all interested parties. Arbitrators study 30 types of proposed work and compare them with each other. A computer is used to carry out the overall ranking of paired comparisons, which gives a certain scientific touch to the process and makes its results more acceptable. However, this impression is very misleading.

Factor Comparison Method

This method involves ranking various types of work in accordance with certain factors, as a result of which their payment is assigned. The first task in applying this method is to select and clearly describe the factors to be used, usually skills, mental development requirements (level of education and training received), physical requirements, responsibilities and working conditions. This list may change depending on the needs of the organization.

Certain key jobs are selected, considered as jobs that are considered to represent other types of jobs and pay rates, and job descriptions are drawn up corresponding to them. The distinguishing feature of this method is the use of existing rates of pay for key jobs to determine a set of fixed points on the scale of relative pay rates that arise when evaluating work performance. The selected types of work should be clearly distinguished from each other and described accordingly - possibly using a job analysis. A sufficient number of key activities should be selected to provide the necessary number of reference points to compare all types of activities, from the simplest to the most complex.

The next step for the individual or committee is to rank the key jobs in order of importance based on the selected factors.

A similar procedure is the subsequent appointment of certain payments for various factors for key types of work. Rates for the performance of each type of work are broken down and set in proportion to the factors used. For example, if the work of a toolmaker can be conditionally assessed by 20 units of payment, then you can assign: 9 - for skills and abilities, 5 - for the requirement for mental abilities, 2 - for physical requirements, 3 - for responsibility and 1 for working conditions.

After such a ranking, the results of the ranking of works by factors and by the assigned payment are compared. Any inconsistencies in the two different ranking systems can be corrected by adjusting the rates or content of the entries. If this is not possible, then this work cannot be used as a key work.

At the final stage, all remaining types of work can be placed on a scale depending on their relationship with key types of work in terms of their content, each factor is considered separately until new scales of pay rates for all types of work in the enterprise are created.

The essence of the factor comparison method is that rates for key types of work are considered final and correct, other types of work are ranked according to each of the factors and adjusted to the main scale. This method is similar to the ranking method, however, it requires ranking works on individual factors twice instead of ranking the entire work once.

The main advantage of the factor comparison method is that it takes into account factors that appear to determine the relative value of different types of work. It allows you to make a basic scale, expressed in monetary units, and non-key work can be "measured" on it. This is a more accurate and more flexible method than the previous two. On the other hand, it takes more time to implement and use this method, and it is very difficult to explain it to employees. In addition, some pay inequity may arise due to the inadequacy of existing rates or the way in which managers or union representatives consider the relative value of different jobs. In addition, despite the obvious scientific nature of the very process of determining pay, the proportional distribution of pay for work according to various factors is inevitably quite arbitrary. For these reasons, this method is not popular today.

scoring method

The method of determining the rating is focused on increasing objectivity. It is based on the assumption that there are factors that are common to all types of work, although it allocates scores to factors rather than determining the value of various factors in monetary terms.

(1) skills,

(2) effort,

(3) responsibility,

(4) working conditions.

In general, they can be subdivided into 10-15 subfactors. The requirements for these sub-factors can be further subdivided into several levels (usually between 5 and 8). On Fig. 3. shows the factors, levels and scores that were used in one real plan.

As you can see, the number of points assigned to each factor is not the same. This is due to the application of the method of weighting the scoring ranking. With the direct method of scoring, the distribution of scores for all factors is the same.

select factors that are common to all types of work being assessed;

determine the number of levels that should be different for each factor when comparing works;

establish the specific weight of each factor;

determine the value of each level or each factor in points.

Next, job descriptions are prepared for each type of work, usually based on its systematic analysis. Different types of work are evaluated based on these job descriptions, as well as through:

rating all factors on one job, then all factors on the second job, etc., or

evaluation of all types of work on the first factor, then on the second, etc.

The second method is usually used because it facilitates comparative analysis of the relative value of different types of work. The number of points received by each type of work on various factors is summed up, and then the total number of points scored is converted into monetary units using the methods described below.

|

The factors used in this example when drawing up a plan based on the scoring method show how, in this case, the total score for each factor was distributed among various subfactors. |

|||||||

|

Points by levels |

Total possible points |

||||||

|

Skills | |||||||

|

1. Education | |||||||

|

2. Experience | |||||||

|

3. Initiative and ingenuity | |||||||

|

Efforts | |||||||

|

4. Physical costs | |||||||

|

5. Mental costs | |||||||

|

Responsibility | |||||||

|

6. Equipment or process | |||||||

|

7. Materials or products | |||||||

|

8. Safety of others | |||||||

|

9. The work of others | |||||||

|

Working conditions | |||||||

|

10. Working conditions | |||||||

|

11. Possible dangers | |||||||

In the example above, the factors can be used for most jobs. The most popular scheme that is applied to managers, the so-called "Hay Guide Chart and Profile Method" (HGC), uses three fairly general factors (which make the method acceptable for all types of work at various levels of the organization):

Execution of work on the basis of "know-how" - the overall set of skills required to perform a job at an intermediate level is characterized by both breadth (number of skills) and depth (their level)

Problem solving - original, independent thinking, which is required for analysis, evaluation, formation of arguments and conclusions, measures the intensity of the thought process ...

Responsibility - responsibility for actions and for consequences ... measured by the impact of work on the final results ...

The main advantage of the score ranking method is that it only calculates scores, not wages. Therefore, its use is not significantly affected by existing pay rates, unlike the previous three methods. The method also claims to be more objective, since it is usually based on data on each type of work obtained from the results of their analysis, which makes it possible to give more convincing answers to all questions of employees regarding the reliability of the assessment. However, it is generally recognized that this method also contains many arbitrary and subjective elements, especially:

when choosing the number and types of correct factors and levels to be used in the assessment;

in the distribution of specific weights or points by factors or their various levels.

It is very difficult to make decisions on these two points. It is practically impossible to avoid subjectivity, since there are no objective criteria, and the decisions made may exaggerate the importance of some types of work compared to others.

This method requires good technical skills. Being less flexible than others, it does not easily accommodate changes in general economic conditions and other factors when designing pay structures. This method is what the critics have in mind when they say that performance appraisal is inherently a static method that has yet to be adapted to a dynamic situation. However, performance appraisal can be used to to collect information about changes in the content of the work and translate these changes into cost units.

The most common scoring method is the Hay method (or Hay MSL is its original name). It includes factors such as planning, organizing, evaluating, developing, and coordinating, which are particularly well suited to the job of a manager.

It must be remembered that all methods, regardless of their complexity and seeming scientific nature, are initially based on arbitrary decisions and very subjective assessments, and to a large extent depend on the existing ratio of different types of work. In many cases, performance appraisal is the best we can do, but its results should never be regarded as absolutely correct and undeniable.

|

classification |

ranging |

comparison of factors |

score ranking |

|

non-quantitative |

quantitative |

||

|

scale defined |

jobs are compared with each other |

scale defined |

|

|

the work is considered as a whole |

consider factors |

||

|

cheaper and easier |

complex and costly |

||

|

suitable for a limited range of jobs |

suitable for a wide range of jobs |

||

Total

For a very long time, Yandex ranking algorithms remained a "secret" for users. Yandex search engine specialists preferred not to inform Internet users about changes in ranking algorithms.

Yandex ranking algorithms

1 2007And only in 2007, Yandex employees began to inform their users about the introduction of innovations in the search algorithm. This makes site promotion a bit easier for many webmasters.

It should be noted that Yandex ranking algorithms are constantly changing. Thanks to these changes, newer and more advanced functionality is added, which makes it very easy to work with this search engine. Also, due to the change in ranking algorithms, bugs are eliminated, filters and limiters are updated, more accurate output of information is adjusted that best matches the original request.

May 2, 2008In May 2008, Yandex specialists released a new algorithm called Magadan.

Algorithm Magadan

In this algorithm, the number of ranking factors has been doubled, the classifier by user location (geotargeting) has been significantly improved. Also in the Magadan algorithm there are such innovative solutions as adding classifiers for content and links. Significantly increased the speed of the search engine to search for information on key queries entered (thanks to this algorithm, the search engine is able to provide information even with texts that have pre-revolutionary spelling).

In July of the same year, a new version of the Magadan algorithm was released, which included additional ranking factors, such as determining the uniqueness of text and information, determining whether content belongs to pornographic content, and so on.

September 3, 2008Already in September 2008, Yandex released a new algorithm, which is called "Nakhodka".

Thanks to the appearance of this algorithm, work with dictionaries in the Yandex search engine has significantly improved, the quality of ranking for queries that included stop words (conjunctions and prepositions) has significantly increased. Also, in this algorithm, a completely new approach to machine learning was developed (the machine began to distinguish between different requests, and began to change the ranking factors for different requests in the search results calculation formula).

April 4, 2009A new algorithm called "Arzamas" or "Anadyr" was posted in the Yandex search engine in April 2009.

Arzamas algorithm

Thanks to the introduction of this algorithm, the Yandex search engine has learned to understand the Russian language more accurately and much better, which made it possible to more accurately resolve ambiguous words in queries. Also, this algorithm made it possible to take into account, by the search engine, the region in which the user is located. Thanks to this, users began to receive more accurate and more useful information on the requested question, which had the maximum relation to the region in which the user was located.

At the same time, it should be noted that in different regions the information provided is also different, despite the same query entered by the user. Also in this search algorithm, the formula has been significantly improved, which makes it more convenient to work with verbose queries. Tougher filters have been introduced for pages with pop-under banners (Pop-Under banner appears on all pages of the site and is not related to the subject of the site), clickander (Click-ander advertising that appears on the page when the visitor first clicks) and body click (Bodyclic - teaser service).

November 5, 2009In November 2009, a new algorithm was released, which is called "Snezhinsk".

Snezhinsk algorithm

This algorithm introduces additional features and ranking parameters that allow you to apply several thousand search parameters for a single document. Also in this algorithm, new regional parameters were present, they were introduced (filters of sites intentionally trying to influence search results, a simpler, anti-shit site), and the search for original content on the Internet was significantly improved. Also in this algorithm there was a self-learning system MatrixNet.

December 6, 2009In December 2009, a new algorithm called "Konakovo" appeared.

This algorithm was just an improved version of the Snezhinsk algorithm and only local ranking was improved in it. In September 2010, a new algorithm "Obninsk" was released. In this algorithm, the ranking for territorially independent queries was improved, and the influence of artificial links on the ranking was limited. Also, thanks to this algorithm, the procedure for determining the author's text has been significantly improved, and the transliteration dictionary has been significantly expanded.

7 2010In December 2010, a new algorithm called "Krasnodar" was released.

To create this algorithm, a new technology called Spectrum was specially developed. Thanks to this algorithm, the Yandex search engine began to classify requests and select objects from them, assigning a certain category to the requests (goods, services, etc.).

8 2014Another killer shot Yandex - Yandex ranking algorithms will no longer take into account links when ranking. According to the latest announcements, ranking without links will be launched in early 2014. Yandex will remove all link factors from ranking factors. This innovation will affect only commercial requests and will first be tested in Moscow and the Moscow region. Authors of innovations, creators of AGS Yandex.

Choosing the best navigator for pedestrians

Choosing the best navigator for pedestrians Best kidnapping movies How to escape from an intruder

Best kidnapping movies How to escape from an intruder SQL query language Ready-made sql queries

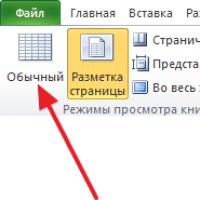

SQL query language Ready-made sql queries Removing headers and footers in Microsoft Excel

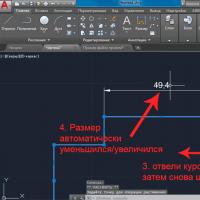

Removing headers and footers in Microsoft Excel Dimension Styles in AutoCAD AutoCAD Dimension Style Manager

Dimension Styles in AutoCAD AutoCAD Dimension Style Manager Internet service e-textbook

Internet service e-textbook How to create a layer in AutoCAD?

How to create a layer in AutoCAD?