System requirements for g-sync hdr. How to enable NVIDIA G-SYNC support and unleash its full potential g sync does not work in games

G-Sync Technology Overview | Short story fixed refresh rate

Long ago, monitors were bulky and contained cathode ray tubes and electron guns. Electron guns bombard the screen with photons to illuminate colored phosphor dots, which we call pixels. They draw from left to right each "scan" line from top to bottom. Adjusting the speed of an electron gun from one full upgrade to the next was not very practical before, and there was no particular need for this before the advent of 3D games. Therefore, CRTs and related analog video standards were developed with a fixed refresh rate.

LCD monitors gradually replaced CRTs, and digital connectors (DVI, HDMI, and DisplayPort) replaced analog (VGA). But the video standardization associations (led by VESA) have not moved from a fixed refresh rate. Movies and televisions still rely on constant frame rate inputs. Once again, switching to variable refresh rate does not seem necessary.

Adjustable frame rates and fixed refresh rates do not match

Prior to the advent of modern 3D graphics, fixed refresh rates were not an issue for displays. But it arose when we first encountered powerful GPUs: the frequency at which the GPU rendered individual frames (what we call the frame rate, usually expressed in FPS or frames per second) is not constant. It changes over time. In heavy graphics scenes, the card can provide 30 FPS, and when looking at an empty sky - 60 FPS.

Disabling sync leads to breaks

It turns out that the variable GPU frame rate and the fixed refresh rate of the LCD panel don't work very well together. In this configuration, we are faced with a graphical artifact called tearing. It appears when two or more incomplete frames are rendered together during the same monitor refresh cycle. They usually shift, which gives a very unpleasant effect while driving.

The image above shows two well-known artifacts that are common but difficult to capture. Since these are display artifacts, you won't see this in regular game screenshots, however our shots show what you actually see while playing. To shoot them, you need a camera with a high-speed shooting mode. Or if you have a card that supports video capture, you can record an uncompressed video stream from the DVI port and clearly see the transition from one frame to another; this is the method we use for the FCAT tests. However, it is best to observe the described effect with your own eyes.

The tearing effect is visible in both images. The top one is done with a camera, the bottom one is done with a video capture function. The bottom picture is "cut" horizontally and looks displaced. In the top two images, the left is on a Sharp 60Hz display, and the right is on an Asus 120Hz display. The gap on the 120Hz display is not as pronounced as the refresh rate is twice as high. However, the effect is visible and appears in the same way as in the left image. An artifact of this type is a clear indication that the images were taken with V-sync turned off.

Battlefield 4 on GeForce GTX 770 with V-sync disabled

The second effect seen in BioShock: Infinite images is called ghosting. It is especially visible at the bottom of the left image and is related to the screen refresh delay. In short, individual pixels do not change color quickly enough, resulting in this type of glow. A single shot cannot convey the effect of ghosting on the game itself. A panel with 8ms gray-to-gray response times like the Sharp will result in a blurry image with any movement on the screen. This is why these displays are generally not recommended for first-person shooters.

V-sync: "wasted on soap"

Vertical sync, or V-sync, is a very old solution to the tearing problem. When this feature is activated, the graphics card tries to keep up with the screen refresh rate by completely removing the tears. The problem is that if your video card is unable to keep the frame rate above 60 FPS (on a display with a 60 Hz refresh rate), the effective frame rate will jump between multiples of the screen refresh rate (60, 30, 20, 15 FPS, etc.). which in turn will lead to noticeable slowdowns.

You will experience stuttering when the frame rate drops below the refresh rate while V-sync is active

Moreover, because V-sync forces the graphics card to wait and sometimes relies on an invisible buffer, V-sync can add additional input latency to the render chain. Thus, V-sync can be both a salvation and a curse, solving some problems, but at the same time provoking other disadvantages. An informal survey of our staff found that gamers tend to turn off vertical sync, and only turn it on when tears get unbearable.

Get creative: Nvidia unveils G-Sync

When starting a new video card GeForce GTX 680 Nvidia has enabled a driver mode called Adaptive V-sync (adaptive vertical sync), which attempts to mitigate problems when V-sync is enabled when the frame rate is higher than the monitor's refresh rate, and quickly turns it off when there is a dramatic drop in performance below the refresh rate. While the technology fulfilled its function in good faith, it was only a workaround to prevent tearing when the frame rate was lower than the monitor's refresh rate.

Implementation G-Sync much more interesting. More generally, Nvidia is showing that instead of forcing graphics cards to run at a fixed display frequency, we can get new monitors to run at an inconsistent frequency.

GPU frame rate determines the refresh rate of the monitor, removing artifacts associated with enabling and disabling V-sync

The DisplayPort packet data transmission mechanism has opened up new possibilities. By using variable blanking intervals in the DisplayPort video signal and replacing the monitor scaler with a variable blanking module, the LCD panel can operate at a variable refresh rate related to the frame rate the graphics card outputs (within the monitor's refresh rate). In practice, Nvidia got creative with the special features of the DisplayPort interface and tried to catch two birds with one stone.

Before starting the tests, I want to give credit for the creative approach to solving a real problem affecting PC games. This is innovation at its finest. But what are the results G-Sync on practice? Let's find out.

Nvidia sent us an engineering sample of the monitor Asus VG248QE, in which the scaler is replaced by a module G-Sync... We are already familiar with this display. The article is dedicated to him "Asus VG248QE Review: 24" 144Hz Gaming Monitor for $ 400 ", in which the monitor earned the Tom "s Hardware Smart Buy award. Now it's time to see how Nvidia's new technology will impact the hottest games.

G-Sync Technology Overview | 3D LightBoost, Onboard Memory, Standards & 4K

As we scanned Nvidia's press materials, we asked ourselves many questions, both about the place of technology in the present and its role in the future. During a recent trip to the company's headquarters in Santa Clara, our US colleagues received some answers.

G-Sync and 3D LightBoost

The first thing we noticed was that Nvidia sent a monitor Asus VG248QE modified to support G-Sync... This monitor also supports Nvidia technology 3D LightBoost, which was originally designed to brighten up 3D displays but has been unofficially used in 2D for a long time, using a pulsating panel backlight to reduce ghosting (or motion blur). Naturally, it became interesting if this technology is used in G-Sync.

Nvidia responded in the negative. While both technologies would be ideal at the same time, strobe backlighting at variable refresh rates today causes flicker and brightness issues. These are incredibly difficult to solve as you need to adjust the brightness and track the pulses. As a result, it now has to choose between the two technologies, although the company is trying to find a way to use them simultaneously in the future.

Built-in memory of the G-Sync module

As we already know G-Sync eliminates the incremental input lag associated with V-sync as there is no longer any need to wait for the panel scan to complete. However, we noticed that the module G-Sync has built-in memory. Can the module buffer frames on its own? If so, how long does it take for a frame to get through the new channel?

According to Nvidia, frames are not buffered in the module's memory. As data arrives, it is displayed on the screen, and the memory performs some other functions. However, the processing time for G-Sync noticeably less than one millisecond. In fact, we encounter almost the same delay when V-sync is off, and it is associated with the peculiarities of the game, video driver, mouse, etc.

Will G-Sync be standardized?

This question was asked in a recent interview with AMD when a reader wanted to know the company's reaction to the technology. G-Sync... However, we wanted to ask this directly from the developer and find out if Nvidia plans to bring the technology to the industry standard. In theory, a company can offer G-Sync as an upgrade to the DisplayPort standard for variable refresh rates. After all, Nvidia is a member of the VESA.

However, no new specifications are planned for DisplayPort, HDMI or DVI. G-Sync and so supports DisplayPort 1.2, that is, the standard does not need to be changed.

As noted, Nvidia is working on compatibility G-Sync with a technology now called 3D LightBoost (but will soon have a different name). In addition, the company is looking for a way to reduce the cost of modules. G-Sync and make them more accessible.

G-Sync at Ultra HD resolutions

Nvidia promises monitors with support G-Sync and resolutions up to 3840x2160 pixels. However, the model from Asus, which we are going to review today, only supports 1920x1080 pixels. On this moment Ultra HD monitors use the STMicro Athena controller, which has two scaling devices to create a tiled display. We are wondering if the module will G-Sync support MST configuration?

In truth, 4K VFR displays will have to wait. There is no standalone 4K upscaler yet, the nearest one is due in the first quarter of 2014, and monitors equipped with them only in the second quarter. Since the module G-Sync replaces the zoom device, compatible panels will start to appear after this point. Fortunately, the module natively supports Ultra HD.

What happens up to 30 Hz?

G-Sync can change the screen refresh rate up to 30 Hz. This is explained by the fact that at very low frequencies As the screen refreshes, the image on the LCD screen begins to deteriorate, resulting in visual artifacts. If the source provides less than 30 FPS, the module will refresh the panel automatically, avoiding possible problems... This means that one image can be played back more than once, but the lower threshold is 30 Hz, which will provide the highest quality image.

G-Sync Technology Overview | 60Hz Panels, SLI, Surround and Availability

Is the technology only limited to panels with a high refresh rate?

You will notice that the first monitor with G-Sync initially has a very high screen refresh rate (higher than the level required for the technology) and a resolution of 1920x1080 pixels. But the Asus display has its own limitations, like the 6-bit TN panel. We got curious about the introduction of technology G-Sync Is it planned only for displays with a high refresh rate, or will we be able to see it on more common 60 Hz monitors? In addition, I want to get access to the 2560x1440 resolution as soon as possible.

Nvidia reiterated that the best experience from G-Sync can be obtained when your video card keeps the frame rate in the range of 30 - 60 FPS. So the technology can really benefit from conventional 60Hz monitors and module G-Sync .

But why use a 144Hz monitor then? It seems that many monitor manufacturers have decided to implement a low motion blur feature (3D LightBoost), which requires a high refresh rate. But those who decided not to use this function (and why not, because it is not yet compatible with G-Sync) can create a panel with G-Sync for much less money.

Speaking of resolutions, it can be noted that everything goes like this: QHD screens with a refresh rate of more than 120 Hz may begin to be released as early as 2014.

Are there problems with SLI and G-Sync?

What does it take to see G-Sync in Surround mode?

Nowadays, of course, you don't need to combine two graphics adapters to display 1080p images. Even a mid-range Kepler-based graphics card will be able to provide the level of performance needed to play comfortably at this resolution. But there is also no way to run two cards in SLI for three G-Sync-monitors in Surround mode.

This limitation is due to modern display outputs on Nvidia cards, which typically have two DVI ports, one HDMI and one DisplayPort. G-Sync requires DisplayPort 1.2 and the adapter will not work (nor does an MST hub). The only option is to connect three monitors in Surround mode to three cards, i.e. a separate card for each monitor. Naturally, we anticipate that Nvidia's partners will start releasing "G-Sync Edition" cards with more DisplayPort connectors.

G-Sync and triple buffering

Vsync required active triple buffering to play comfortably. Do I need it for G-Sync? The answer is no. G-Sync not only does not require triple buffering, since the channel never stops, on the contrary, it harms G-Sync because it adds an extra frame of latency without any performance gain. Unfortunately, triple buffering games are often self-setting and cannot be manually bypassed.

What about games that tend to react badly when V-sync is disabled?

Games like Skyrim, which is part of our test suite, are designed to work with V-sync on a 60Hz panel (although this sometimes makes life difficult for us due to input lag). To test them, you need to modify certain files with the .ini extension. As it behaves G-Sync with games based on vsync sensitive Gamebryo and Creation engines? Are they limited to 60 FPS?

Second, you need a monitor with an Nvidia module G-Sync... This module replaces the display scaler. And, for example, add to the split Ultra HD display G-Sync impossible. For today's review, we use a prototype with a resolution of 1920x1080 pixels and a refresh rate of up to 144Hz. But even with it, you will be able to get an idea of what impact it will have G-Sync if manufacturers start installing it in cheaper 60Hz panels.

Third, a DisplayPort 1.2 cable is required. DVI and HDMI are not supported. In the short term, this means that the only option for work G-Sync on three monitors in Surround mode, they are connected via a triple SLI bundle, since each card has only one DisplayPort connector, and adapters for DVI to DisplayPort do not work in this case. The same goes for MST hubs.

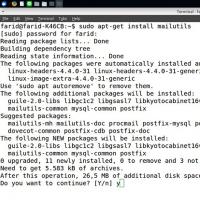

Finally, don't forget about driver support. The latest package, version 331.93 beta, already has compatibility with G-Sync and we expect future WHQL-certified versions to be equipped with it.

Test stand

| Test bench configuration | |

| CPU | Intel Core i7-3970X (Sandy Bridge-E), 3.5 GHz base frequency, overclocked to 4.3 GHz, LGA 2011, 15 MB Shared L3 Cache, Hyper-Threading On, Power Saving Features On |

| Motherboard | MSI X79A-GD45 Plus (LGA 2011) X79 Express Chipset, BIOS 17.5 |

| RAM | G.Skill 32GB (8 x 4GB) DDR3-2133, F3-17000CL9Q-16GBXM x2 @ 9-11-10-28 and 1.65V |

| Storage device | Samsung 840 Pro SSD 256GB SATA 6Gb / s |

| Video cards | Nvidia GeForce GTX 780 Ti 3 GB Nvidia GeForce GTX 760 2GB |

| Power Supply | Corsair AX860i 860 W |

| System software and drivers | |

| OS | Windows 8 Professional 64-bit |

| DirectX | DirectX 11 |

| Video driver | Nvidia GeForce 331.93 Beta |

Now you need to figure out in what cases G-Sync has the greatest impact. Chances are good that you are already using a monitor with a 60Hz refresh rate. Among gamers, 120 and 144 Hz models are more popular, but Nvidia rightly assumes that most of the enthusiasts on the market will stick to 60 Hz.

With active vertical sync on a 60Hz monitor, the most noticeable artifacts appear when the card cannot deliver 60fps, resulting in annoying jumps between 30 and 60fps. There are noticeable slowdowns here. With V sync off, the tearing effect will be most noticeable in scenes where you need to rotate the camera frequently or in which there is a lot of movement. Some players get so distracted by this that they just turn on V-sync and suffer slowdowns and input delays.

At 120Hz and 144Hz and higher frame rates, the display refreshes more frequently, reducing the time it takes to keep one frame across multiple screen scans when performance is poor. However, issues with active and inactive vertical sync persist. For this reason, we will be testing the Asus monitor at 60 and 144 Hz with technology on and off. G-Sync .

G-Sync Technology Overview | Testing G-Sync with V-Sync Enabled

It's time to start testing G-Sync... All that remains is to install a video capture card, an array of several SSDs and proceed to the tests, right?

No, it’s wrong.

Today we are not measuring productivity, but quality. In our case, tests can show only one thing: the frame rate at a particular point in time. On the quality and experience of use with the technology turned on and off G-Sync they don't say anything at all. Therefore, you will have to rely on our carefully verified and eloquent description, which we will try to bring as close to reality as possible.

Why not just record a video and give it to readers for judgment? The fact is that the camera records video at a fixed speed of 60 Hz. Your monitor also plays video at a constant 60Hz refresh rate. Insofar as G-Sync implements a variable refresh rate, you will not see the technology in action.

Given the number of games available, the number of possible test combinations is countless. V-sync on, V-sync off, G-Sync incl., G-Sync off, 60 Hz, 120 Hz, 144 Hz, ... The list goes on for a long time. But we'll start with a 60Hz refresh rate and active vertical sync.

Probably the easiest place to start is with Nvidia's own demo utility, which swings the pendulum from side to side. The utility can simulate frame rates of 60, 50 or 40 FPS. Or the frequency can fluctuate between 40 and 60 FPS. Then you can disable or enable V-sync and G-Sync... Although the test is fictional, it does a good job of demonstrating the technology's capabilities. You can watch the scene at 50 FPS with vertical sync enabled and think: "Everything is quite good, and the visible slowdowns can be tolerated." But after activation G-Sync I immediately want to say: "What was I thinking? The difference is obvious, like day and night. How could I live with this before?"

But let's not forget that this is a technical demo. I would like evidence based on real games. To do this, you need to run a game with high system requirements, such as Arma III.

Arma III can be installed in a test car GeForce GTX 770 and set ultra settings. With vertical sync disabled, the frame rate fluctuates between 40 - 50 FPS. But if you enable V-sync, it drops to 30 FPS. The performance is not high enough to see constant fluctuations between 30 and 60 FPS. Instead, the frame rate of the video card is simply reduced.

Since there was no freezing of the image, a significant difference when activated G-Sync invisible, except that the actual frame rate jumps 10 - 20 FPS higher. Input lag should also be reduced, since the same frame is not saved over multiple scans of the monitor. We feel that Arma is generally less jittery than many other games, so the lag is not felt.

On the other hand, Metro: Last Light is influenced by G-Sync more pronounced. With video card GeForce GTX 770 The game can be run at 1920x1080 with very high detail settings including 16x AF, normal tessellation and motion blur. In this case, you can select SSAA settings from 1x to 2x to 3x to gradually reduce the frame rate.

In addition, the game's environment includes a hallway, which is easy to strafe back and forth. After starting the level with active vertical sync at 60 Hz, we went out into the city. Fraps showed that with triple SSAA, the frame rate was 30 FPS, and with anti-aliasing turned off, it was 60 FPS. In the first case, slowdowns and delays are noticeable. With SSAA disabled, you will get a completely smooth picture at 60 FPS. However, activating 2x SSAA leads to fluctuations from 60 to 30 FPS, from which each duplicated frame creates inconvenience. This is one of the games in which we would definitely turn off vertical sync and just ignore the breaks. Many people have already developed a habit.

but G-Sync eliminates all negative effects. You no longer have to look at the Fraps counter while waiting for drawdowns below 60 FPS to lower another graphical parameter. On the contrary, you can increase some of them, because even if you slow down to 50 - 40 FPS, there will be no obvious slowdowns. What if you turn off V-sync? You will learn more about this later.

G-Sync Technology Overview | Testing G-Sync with V-Sync Disabled

The conclusions in this material are based on a survey of the authors and friends of Tom "s Hardware on Skype (in other words, the sample of respondents is small), but almost all of them understand what vertical sync is and what disadvantages users have to put up with in this regard. , they only resort to vertical sync when the gaps become unbearable due to the large variation in frame rates and monitor refresh rates.

As you can imagine, the visual impact of Vsync off is hard to confuse, although it is highly influenced by the game and its detail settings.

Take for example Crysis 3... The game can easily bring your graphics subsystem to its knees at the highest graphics settings. And since Crysis 3 is a first person shooter with very dynamic gameplay, the tears can be quite palpable. In the example above, the FCAT output was captured between two frames. As you can see, the tree is completely cut.

On the other hand, when we forcibly turn off Vsync in Skyrim, the breaks are not that strong. Note that in this case the frame rate is very high and several frames appear on the screen with each scan. For these reviews, the number of movements per frame is relatively low. There are problems when playing Skyrim in this configuration, and it may not be the most optimal. But it does show that even with vertical sync disabled, the feel of the game can change.

For the third example, we chose a shot of Lara Croft's shoulder from the Tomb Raider game, which shows a fairly clear image tear (also look at the hair and the strap of the shirt). Tomb Raider is the only game in our selection that allows you to choose between double and triple buffering when vertical sync is activated.

The last graph shows that Metro: Last Light with G-Sync at 144 Hz, generally provides the same performance as when vertical sync is disabled. However, the absence of gaps cannot be seen on the chart. If you use technology with a 60 Hz screen, the frame rate will stop at 60 FPS, but there will be no slowdowns or lags.

In any case, those of you (and us) who have spent countless amounts of time on graphics tests, watching the same benchmark over and over again, could get used to them and visually determine how good a particular result is. This is how we measure the absolute performance of video cards. Changes in the picture with active G-Sync immediately striking, because there is a smoothness, as with the enabled V-sync, but without the breaks inherent in disabled V-sync. It's a shame that now we can't show the difference in the video.

G-Sync Technology Overview | Game compatibility: almost perfect

Checking other games

We've tested a few more games. Crysis 3, Tomb Raider, Skyrim, BioShock: Infinite, Battlefield 4 visited the test bench. All but Skyrim have benefited from technology. G-Sync... The effect depended on the competitive play. But if you saw him, you would immediately admit that you ignored the shortcomings that were present earlier.

Artifacts can still appear. For example, the creep effect associated with anti-aliasing is more noticeable with smooth motion. Most likely, you will want to set anti-aliasing as high as possible to remove unpleasant bumps that were not so noticeable before.

Skyrim: special case

Skyrim's Creation graphics engine activates V-sync by default. To test the game at frame rates above 60 FPS, add the line iPresentInterval = 0 to one of the game's .ini files.

Thus, Skyrim can be tested in three ways: in the initial state, allowing the Nvidia driver to "use application settings", enable G-Sync in the driver and leave the Skyrim settings intact and then enable G-Sync and disable V-sync in the game file with the .ini extension.

The first configuration, in which the development monitor is set to 60 Hz, showed a stable 60 FPS on ultra settings with a video card GeForce GTX 770... Therefore, we got a smooth and pleasant picture. However, user input still suffers from latency. In addition, the side-to-side strafe revealed a noticeable motion blur. However, this is how most people play on PC. You can of course buy a 144Hz screen and it will really eliminate blur. But since GeForce GTX 770 provides a refresh rate of about 90 - 100 frames per second, noticeable slowdowns will appear when the engine fluctuates between 144 and 72 FPS.

At 60 Hz G-Sync has a negative effect on the picture, probably due to active vertical sync, while the technology should work with disabled V-sync. Now the side strafe (especially closer to the walls) leads to pronounced slowdowns. This is a potential problem for 60 Hz panels with G-Sync at least in games like Skyrim. Fortunately, in the case of the Asus VG248Q monitor, you can switch to 144Hz mode, and despite active V-sync, G-Sync will work at that frame rate flawlessly.

Disabling Vsync completely in Skyrim results in smoother mouse control. However, tearing occurs in the image (not to mention other artifacts such as shimmering water). Turning on G-Sync leaves brakes at 60 Hz, but at 144 Hz the situation improves significantly. Although we test the game with Vsync disabled in our video card reviews, we would not recommend playing without it.

For Skyrim, perhaps the most the best solution will disable G-Sync and play at 60 Hz, which will give a constant 60 frames per second at your chosen graphics settings.

G-Sync Technology Overview | Is G-Sync what you've been waiting for?

Even before we got our test sample of the Asus monitor with technology G-Sync We are already pleased with the fact that Nvidia is working on a very real problem affecting games, the solution to which has not yet been proposed. Until now, you may or may not turn on vertical sync to your liking. In this case, any decision was accompanied by compromises that negatively affect the gaming experience. If you prefer not to turn on V-sync until the tearing becomes unbearable, then you can say that you are choosing the lesser of two evils.

G-Sync solves the problem by allowing the monitor to scan the screen at a variable frequency. Such innovation is the only way to continue to advance our industry while maintaining technical advantage personal computers over game consoles and platforms. Nvidia will no doubt stand up to criticism for failing to develop a standard that competitors can apply. However, the company uses DisplayPort 1.2 for its solution. As a result, just two months after the technology was announced G-Sync she ended up in our hands.

The question is, is Nvidia doing everything it promised in G-Sync?

Three talented developers touting qualities of technology you've never seen in action can inspire anyone. But if your first experience with G-Sync Based on Nvidia's pendulum demo test, you are sure to wonder if such a huge difference is possible, or if the test presents a special scenario that is too good to be true.

Naturally, when testing the technology in real games, the effect is not so unambiguous. On the one hand, there were exclamations of "Wow!" and "Go crazy!", on the other - "I think I can see the difference." Best of all the impact of activation G-Sync noticeable when changing the refresh rate of the display from 60 Hz to 144 Hz. But we also tried to run the 60Hz test with G-Sync to see what you (hopefully) get with cheaper displays in the future. In some cases, simply going from 60Hz to 144Hz will overwhelm you, especially if your graphics card can handle high frame rates.

Today we know that Asus plans to implement support G-Sync in the model Asus VG248QE, which the company says will sell for $ 400 next year. The monitor has a native resolution of 1920x1080 pixels and a refresh rate of 144 Hz. Version without G-Sync has already won our Smart Buy award for outstanding performance. But for us personally, the 6-bit TN panel is a disadvantage. I'd like to see 2560x1440 pixels on an IPS-matrix. We'll even settle for a 60Hz refresh rate if that helps keep the price down.

Although on at CES we are waiting for a whole bunch of announcements, official comments from Nvidia regarding other displays with modules G-Sync and we have not heard their prices. In addition, we are not sure what the company's plans are for the upgrade module, which should allow you to implement the module. G-Sync into an already purchased monitor Asus VG248QE in 20 minutes.

Now we can say that it is worth the wait. You will see that in some games the influence new technologies cannot be confused, but in others it is less pronounced. But anyway G-Sync answers the "bearded" question, whether or not to enable vertical sync.

There is another interesting thought. After we have tested G-Sync How much more AMD will be able to shy away from commenting? The company teased our readers in his interview(English), noting that she will soon decide on this opportunity. If she has anything in the plans? The end of 2013 and the beginning of 2014 prepare us a lot of interesting news to discuss, including Battlefield 4 Mantle versions, upcoming Nvidia Maxwell architecture, G-Sync, AMD xDMA engine with CrossFire support and rumors about new dual-GPU video cards. Now we are short of video cards with more than 3 GB (Nvidia) and 4 GB (AMD) GDDR5 memory, but they cost less than $ 1000 ...

There are things that are not only difficult to write about, but very difficult. Which you just need to see once than hear about them a hundred times or read on the Internet. For example, it is impossible to describe some natural wonders, such as the majestic Grand Canyon or the snow-capped Altai Mountains. You can look at beautiful pictures with their images a hundred times and admire the videos, but all this cannot replace live impressions.

The topic of smooth output of frames to the monitor using Nvidia G-Sync technology also refers to such topics - according to textual descriptions, the changes do not seem so significant, but in the very first minutes of a 3D game on a system with an Nvidia Geforce video card connected to G-Sync -monitor, it becomes clear how big a qualitative leap is. And although more than a year has passed since the announcement of the technology, the technology does not lose its relevance, it still has no competitors (among the solutions that have entered the market), and the corresponding monitors continue to be produced.

Nvidia has been focusing on improving the user experience of Geforce GPUs for video footage in modern games for quite some time by making the rendering smoother. Remember the Adaptive V-Sync technology, which is a hybrid that combines modes with V-Sync On and V-Sync Off, respectively. In the case when the GPU provides rendering at a frame rate lower than the refresh rate of the monitor, synchronization is disabled, and for FPS exceeding the refresh rate, it is enabled.

Not all of the smoothness issues were solved with adaptive sync, but it was still an important step in the right direction. But why was it necessary to do any special modes synchronization and even release software and hardware solutions? What's wrong with technologies that have been around for decades? Today we'll show you how Nvidia's G-Sync technology can help eliminate all known display artifacts such as tearing, blurry footage, and increased lag.

Looking far ahead, we can say that the G-Sync synchronization technology allows you to get a smooth frame rate with the highest possible performance and comfort, which is very noticeable when playing behind such a monitor - this is noticeable even for an ordinary home user, and for avid gamers it can mean an improvement reaction time, and at the same time game achievements.

Today, most PC gamers use 60Hz monitors - the typical LCD screens that are the most popular today. Accordingly, both with V-Sync On and off, there are always some drawbacks associated with the basic problems of ancient technologies, which we will talk about further: high delays and jerks of FPS with V-Sync on and unpleasant breaks images when off.

And since delays and irregular frame rates are more disruptive and annoying to the game, rarely do any of the players turn on synchronization at all. And even some models of monitors with a refresh rate of 120 and 144 Hz that have appeared on the market cannot help eliminate the problems completely, they simply make them somewhat less noticeable, updating the screen content twice as often, but all the same artifacts are present: lags and the absence of the same comfortable smoothness.

And since monitors with G-Sync paired with an appropriate Nvidia Geforce graphics card are able to provide not only a high refresh rate, but also eliminate all these disadvantages, purchasing such solutions can be considered even more important than even upgrading to a more powerful GPU. But let's figure it out first with why it was necessary to do something different from long-known solutions at all - what is the problem here?

Problems of Existing Video Output Methods

Technologies for displaying images on a screen with a fixed refresh rate have been around since the days when cathode ray tube (CRT) monitors were used. Most readers should remember them - pot-bellied ones, like ancient televisions. These technologies were originally developed for displaying television images with a fixed frame rate, but in the case of devices for displaying a 3D image dynamically calculated on a PC, this solution causes big problems that have not yet been resolved.

Even the most modern LCD monitors have a fixed refresh rate on the screen, although technologically nothing prevents them from changing the picture at any time, with any frequency (within reasonable limits, of course). But PC gamers have long had to put up with a clearly imperfect solution to the problem of synchronizing the frame rate of 3D rendering and the refresh rate of the monitor for a long time with CRT monitors. So far, there have been very few options for displaying an image - two, and both of them have drawbacks.

The root of all the problems lies in the fact that with a fixed refresh rate of the image on the monitor, the video card renders each frame at a different time - this is due to the constantly changing complexity of the scene and the load on the GPU. And the render time of each frame is not constant, it changes every frame. It is not surprising that when trying to display a number of frames on the monitor, there are synchronization problems, because some of them take much longer to render than others. As a result, it turns out different preparation time for each frame: then 10 ms, then 25 ms, for example. And monitors existing before the advent of G-Sync could display frames only after a certain period of time - not earlier, not later.

The matter is further complicated by the wealth of hardware and software configurations of gaming PCs, combined with a very different load depending on the game, quality settings, video driver settings, etc. or at least not too different times in all 3D applications and conditions - as is possible on game consoles with their single hardware configuration.

Naturally, unlike consoles with their predictable frame rendering times, PC players are still seriously limited in their ability to achieve a smooth gameplay without noticeable drawdowns and lags. In an ideal (read - impossible in reality) case, the image on the monitor should be updated strictly after the next frame has been calculated and prepared by the graphics processor:

As you can see, in this hypothetical example, the GPU always manages to draw a frame before the time it needs to be transmitted to the monitor - the frame time is always slightly less than the time between information updates on the display, and the GPU rests a little during breaks. But in reality, after all, everything is completely different - the frame rendering time is very different. Imagine if the GPU does not have time to render the frame in the allotted time - then the frame must either be displayed later, skipping one image update on the monitor (vertical synchronization is enabled - V-Sync On), or display frames in parts with disabled synchronization, and then on the monitor at the same time chunks from several adjacent frames will be present.

Most users turn V-Sync off to get lower latency and smoother frame output, but this solution introduces visible tearing artifacts. And with synchronization enabled, there will be no picture tearing, since the frames are displayed exclusively in full, but the delay between the player's action and the refresh of the image on the screen increases, and the frame output rate turns out to be very uneven, since the GPU never draws frames in strict accordance with the picture refresh time on the monitor.

This problem has existed for many years and clearly interferes with the comfort when viewing the result of 3D rendering, but until recently no one bothered to solve it. And the solution is, in theory, quite simple - you just need to display information on the screen strictly when the GPU finishes working on the next frame. But first, let's take a closer look at examples of how the existing display technologies work, and what solution Nvidia offers us in its G-Sync technology.

Disadvantages of output when sync is disabled

As we already mentioned, the vast majority of players prefer to keep synchronization off (V-Sync Off) in order to get the display of frames rendered by the GPU on the monitor as quickly as possible and with a minimum delay between the player's action (keystrokes, mouse commands) and their display. For serious players, this is necessary to win, and for ordinary players in this case, the sensations will be more pleasant. This is how working with V-Sync disabled looks schematically:

There are no problems and delays with the output of frames. But while disabling Vsync solves the lag problem as much as possible, providing minimal latency, along with this, artifacts appear in the image - picture tearing, when the image on the screen consists of several pieces of adjacent frames, rendered by the GPU. The lack of smoothness of the video sequence is also noticeable due to the unevenness of the frames coming from the GPU to the screen - image breaks in different places.

These tearing results from displaying a picture consisting of two of more frames, rendered on the GPU during one cycle of updating information on the monitor. Of several - when the frame rate exceeds the refresh rate of the monitor, and of the two - when it roughly corresponds to it. Look at the diagram shown above - if the content of the frame buffer is updated in the middle between the times of displaying information on the monitor, then the final picture on it will be distorted - some of the information in this case belongs to the previous frame, and the rest to the currently being rendered.

With synchronization disabled, frames are transmitted to the monitor without regard to the frequency and time of its refresh, so they never coincide with the refresh rate of the monitor. In other words, with V-Sync disabled, monitors without G-Sync support will always experience such tearing.

This is not only about the fact that the player is unpleasant to see stripes twitching across the entire screen, but also about the fact that the simultaneous rendering of parts of different frames can misinform the brain, which is especially noticeable with dynamic objects in the frame - the player sees parts of objects that are shifted relative to each other. You have to put up with this only because disabling V-Sync provides minimal output delays at the moment, but far from the ideal quality of a dynamic image, as you can see from the following examples (frames in full resolution are available by clicking on them):

In the examples above, captured using the FCAT software and hardware complex, you can make sure that the real image on the screen can be composed of pieces of several adjacent frames - and sometimes unevenly, when a narrow strip is taken from one of the frames, and the neighboring ones occupy the remaining ( noticeably larger) part of the screen.

Even more clearly, the problems with image tearing are noticeable in dynamics (if your system and / or browser does not support playing MP4 / H.264 videos in a resolution of 1920 × 1080 pixels with a refresh rate of 60 FPS, then you will have to download them and view them locally using a media player with appropriate capabilities):

As you can see, even in dynamics, unpleasant artifacts in the form of picture breaks are easily noticeable. Let's see how it looks schematically - in the diagram, which shows the output method when synchronization is disabled. In this case, frames are received on the monitor immediately after the GPU finishes rendering them, and the image is displayed even if the information output from the current frame is not completely finished yet - the rest of the buffer falls on the next screen refresh. That is why each frame of our example displayed on the monitor consists of two frames rendered on the GPU - with a break in the image in the place marked in red.

In this example, the first frame (Draw 1) is drawn by the GPU to the screen buffer faster than its 16.7ms refresh time — and before the image is sent to the monitor (Scan 0/1). The GPU immediately starts working on the next frame (Draw 2), which breaks the picture on the monitor, containing another half of the previous frame.

As a result, in many cases, a clearly distinguishable strip appears on the image - the border between the partial display of adjacent frames. In the future, this process is repeated, since the GPU works on each frame for a different amount of time, and without synchronizing the process, the frames from the GPU and those displayed on the monitor never match.

Pros and cons of vertical sync

When you turn on the traditional vertical sync (V-Sync On), the information on the monitor is updated only when the work on the frame is completely finished by the GPU, which eliminates tearing in the image, because the frames are displayed on the screen exclusively as a whole. But, since the monitor updates the content only at certain intervals (depending on the characteristics of the output device), then this binding already brings other problems.

Most modern LCD monitors update information at 60 Hz, that is, 60 times per second - approximately every 16 milliseconds. And with synchronization enabled, the image output time is rigidly tied to the monitor's refresh rate. But as we know, the frame rendering rate on the GPU is always variable, and the rendering time of each frame differs depending on the ever-changing complexity of the 3D scene and quality settings.

It cannot always be equal to 16.7 ms, but it will be either less than this value or more. When synchronization is enabled, the work of the GPU on frames again ends earlier or later than the time the screen is refreshed. If the frame was drawn faster than this moment, then there are no special problems - the visual information just waits for the monitor refresh time to display the entire frame on the screen, and the GPU is idle. But if the frame does not have time to render in the allotted time, then it has to wait for the next cycle of updating the image on the monitor, which causes an increase in the delay between the player's actions and their visual display on the screen. In this case, the image of the previous "old" frame is displayed on the screen again.

Although all this happens quite quickly, the increase in latency is easily visible visually, and not only by professional players. And since the frame rendering time is always variable, turning on the binding to the monitor refresh rate causes jerks when displaying a dynamic image, because the frames are displayed either quickly (equal to the monitor refresh rate), or twice, three or four times slower. Let's consider a schematic example of such work:

The illustration shows how frames are displayed on the monitor with V-Sync On. The first frame (Draw 1) is drawn by the GPU faster than 16.7ms, so the GPU does not go to work on drawing the next frame, and does not tear the image, as in the case of V-Sync Off, but waits for the first frame to be fully displayed on the monitor. And only after that the next frame (Draw 2) starts to be drawn.

But the work on the second frame (Draw 2) takes more time than 16.7 ms, so after their expiration, the visual information from the previous frame is displayed on the screen, and it is displayed on the screen for another 16.7 ms. And even after the GPU finishes working on the next frame, it is not displayed on the screen, since the monitor has a fixed refresh rate. In general, you have to wait 33.3 ms for the second frame to be displayed, and all this time is added to the delay between the player's action and the end of the frame display on the monitor.

To the problem of time lag, there is also a gap in the smoothness of the footage, noticeable by the twitching of the 3D animation. The problem is shown very clearly in a short video:

But even the most powerful GPUs in demanding modern games can not always provide a sufficiently high frame rate, exceeding the typical refresh rate of monitors of 60 Hz. And, accordingly, they will not give the possibility of a comfortable game with synchronization turned on and the absence of problems like picture tearing. Especially when it comes to games such as the multiplayer Battlefield 4, the highly demanding Far Cry 4 and Assassin's Creed Unity in high resolutions and maximum game settings.

That is, the modern player has little choice - either get a lack of smoothness and increased delays, or be content with imperfect picture quality with torn pieces of frames. Of course, in reality, everything looks not so bad, because somehow we played all this time, right? But at a time when they are trying to achieve the ideal both in quality and in comfort, you want more. Moreover, LCD displays have a fundamental technological ability to display frames when the graphics processor indicates it. There is little to do - to connect the GPU and the monitor, and there is already such a solution - the Nvidia G-Sync technology.

G-Sync Technology - Solving Nvidia Performance Issues

So, most modern games in the version with disabled synchronization cause tearing of the picture, and with enabled - irregular frame changes and increased delays. Even at high refresh rates, traditional monitors do not solve these problems. Probably, the choice between two far from ideal options for outputting frames in 3D applications over the years has bothered Nvidia employees so much that they decided to get rid of the problems by giving players a fundamentally new approach to updating information on the display.

The difference between G-Sync technology and existing display methods is that the time and frame rate in the case of the Nvidia variant is determined by the Geforce GPU, and it is dynamically changing, and not fixed, as was previously the case. In other words, in this case, the GPU takes over full control over the output of frames - as soon as it finishes working on the next frame, it is displayed on the monitor, without delays and image breaks.

Using a similar connection between the GPU and specially adapted hardware of the monitor gives players best method the conclusion is simply ideal, from the point of view of quality, depriving all of the above problems. G-Sync provides perfectly smooth frame changes on the monitor, without any delays, jerks and artifacts caused by the display of visual information on the screen.

Naturally, G-Sync does not work magically, and for the technology to work on the monitor side, it requires the addition of special hardware logic in the form of a small board supplied by Nvidia.

The company is working with monitor manufacturers to include G-Sync cards in their gaming display models. For some models, there is even an upgrade option by the user's own hands, but this option is more expensive, and it doesn't make sense, because it's easier to buy a G-Sync monitor right away. From a PC, it is enough to have any of the modern Nvidia Geforce video cards in its configuration, and the installed G-Sync-optimized video driver - any of the fresh versions will do.

When Nvidia G-Sync technology is enabled, after processing the next frame of a 3D scene, the Geforce GPU sends a special signal to the G-Sync controller board built into the monitor, which tells the monitor when to update the image on the screen. This allows you to achieve just perfect smoothness and responsiveness when playing on a PC - you can see this by watching a short video (always at 60 frames per second!):

Let's see how the configuration works with the enabled G-Sync technology, according to our diagram:

As you can see, everything is very simple. Enabling G-Sync binds the monitor's refresh rate to the end of rendering each frame on the GPU. The GPU completely controls the work: as soon as it finishes rendering the frame, the image is immediately displayed on a G-Sync-compatible monitor, and as a result, it is not a fixed display refresh rate, but a variable one - exactly like the GPU frame rate. This eliminates image tearing problems (after all, it always contains information from one frame), minimizes frame rate jerks (the monitor does not wait longer than the frame is physically processed on the GPU) and reduces output delays relative to the method with Vsync enabled.

I must say that this solution was clearly not enough for the players, a new method of GPU synchronization and Nvidia monitor G-Sync really has a very strong effect on the comfort of gaming on a PC - that almost perfect smoothness appears, which was not there until now - in our time of super-powerful video cards! Since the announcement of G-Sync technology, the old methods have instantly become an anachronism and the upgrade to a G-Sync monitor capable of a variable refresh rate up to 144 Hz seems to be a very attractive option, allowing you to finally get rid of problems, lags and artifacts.

Does G-Sync have any drawbacks? Of course, like any technology. For example, G-Sync has an unpleasant limitation, which is that it provides a smooth display of frames to the screen at a frequency of 30 FPS. And the selected refresh rate for the monitor in G-Sync mode sets the upper bar for the refresh rate of the screen content. That is, when the refresh rate is set to 60 Hz, the maximum smoothness will be provided at a frequency of 30-60 FPS, and at 144 Hz - from 30 to 144 FPS, but not less than the lower limit. And with a variable frequency (for example, from 20 to 40 FPS), the result will no longer be ideal, although it is noticeably better than traditional V-Sync.

But the biggest drawback to G-Sync is that it is Nvidia's own technology that competitors don't have access to. Therefore, at the beginning of the outgoing year, AMD announced a similar FreeSync technology - also consisting in dynamically changing the frame rate of the monitor in accordance with the preparation of frames from the GPU. An important difference The fact that AMD's development is open and does not require additional hardware solutions in the form of specialized monitors, since FreeSync has been converted to Adaptive-Sync, which has become an optional part of the DisplayPort 1.2a standard from the notorious VESA (Video Electronics Standards Association). It turns out that AMD will skillfully use the theme developed by a competitor for its own benefit, since without the appearance and popularization of G-Sync, they would not have any FreeSync, as we think.

Interestingly, Adaptive-Sync is also part of the VESA embedded DisplayPort (eDP) standard and is already used in many display components that use eDP for signal transmission. Another difference from G-Sync is that VESA members can use Adaptive-Sync without having to pay anything. However, it is very likely that Nvidia will also support Adaptive-Sync in the future as part of the DisplayPort 1.2a standard, since such support will not require much from them. But the company will not refuse from G-Sync either, as it considers its own solutions to be a priority.

The first monitors with Adaptive-Sync support should appear in the first quarter of 2015, they will have not only DisplayPort 1.2a ports, but also special support for Adaptive-Sync (not all DisplayPort 1.2a-capable monitors will be able to boast of this). Thus, in March 2015, Samsung plans to bring to the market the line of Samsung UD590 (23.6 and 28 inches) and UE850 (23.6, 27 and 31.5 inches) monitors with support for UltraHD resolution and Adaptive-Sync technology. AMD claims that monitors with support for this technology will be up to $ 100 cheaper than similar devices with support for G-Sync, but it is difficult to compare them, since all monitors are different and come out at different times. In addition, there are already not so expensive G-Sync models on the market.

Visual difference and subjective impressions

Above we described the theory, and now it's time to show everything clearly and describe your feelings. We have tested Nvidia G-Sync technology in practice in several 3D applications using Inno3D iChill Geforce GTX 780 HerculeZ X3 Ultra graphics card and Asus PG278Q monitor with G-Sync technology. There are several models of monitors with support for G-Sync on the market from different manufacturers: Asus, Acer, BenQ, AOC and others, and for the monitor of the Asus VG248QE model, you can even buy a kit to upgrade it to support G-Sync on your own.

The youngest graphics card to use G-Sync technology is Geforce GTX 650 Ti, with an extremely important requirement for a DisplayPort connector on board. Of the other system requirements, we note the operating system at least Microsoft Windows 7, the use of a good DisplayPort 1.2 cable, and the use of a quality mouse with high sensitivity and polling rate is recommended. G-Sync technology works with all full-screen 3D applications using the OpenGL and Direct3D graphics APIs when launched on Windows 7 and 8.1 operating systems.

Any modern driver is suitable for work, which - G-Sync has been supported by all drivers of the company for more than a year. If you have all the required components, you only need to enable G-Sync in the drivers, if you have not already done so, and the technology will work in all full-screen applications - and only in them, based on the very principle of the technology.

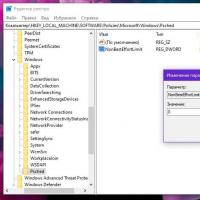

To enable G-Sync technology for full screen applications and get the best experience possible, you need to enable 144Hz refresh rate in Nvidia Control Panel or Desktop Settings operating system... Then, you need to make sure that the use of technology is allowed on the appropriate page "Configure G-Sync" ...

And also - select the appropriate item on the "Manage 3D parameters" page in the "Vertical sync pulse" parameter of the global 3D parameters. There you can also disable the use of the G-Sync technology for testing purposes or in case of any problems (looking ahead - during our testing we did not find any).

G-Sync technology works on all resolutions supported by monitors, up to UltraHD, but in our case we used the native resolution of 2560 × 1440 pixels at 144 Hz. In our comparisons to the current state of affairs, we used a 60Hz refresh rate with G-Sync disabled to emulate the behavior of typical non-G-Sync monitors that most gamers have. Most of which use Full HD monitors capable of a maximum 60Hz mode.

Be sure to mention that although with G-Sync enabled, the screen refresh will be at the ideal frequency - when the GPU "wants" it, the optimal mode will still be rendering with a frame rate of about 40-60 FPS - this is the most suitable frame rate for modern games. not too small to hit the lower limit of 30 FPS, but also not requiring lower settings. By the way, this is exactly the frequency that Nvidia's Geforce Experience program strives for, providing the appropriate settings for popular games in the software of the same name that comes with the drivers.

In addition to games, we also tested a specialized test application from Nvidia -. This application shows an easy-to-use pendulum 3D scene for assessing the smoothness and quality, allows you to simulate different frame rates and select the display mode: V-Sync Off / On and G-Sync. With this test software it is very easy to show the difference between different sync modes - for example, between V-Sync On and G-Sync:

The Pendulum Demo app allows you to test different sync methods in different conditions, it simulates an accurate 60 FPS frame rate for comparing V-Sync and G-Sync in ideal conditions for the legacy sync method - in this mode there simply should not be any difference between methods. But the 40-50 FPS mode puts V-Sync On in an uncomfortable position, when delays and non-smooth frame changes are visible to the naked eye, since the frame rendering time exceeds the refresh period at 60 Hz. When you turn on G-Sync, everything becomes perfect.

When it comes to comparing modes with V-Sync disabled and G-Sync enabled, Nvidia's app also helps to see the difference here - at frame rates between 40 and 60 FPS, picture breaks are clearly visible, although there are fewer lags than with V-Sync On. And even a non-smooth video sequence regarding the G-Sync mode is noticeable, although in theory this should not be - perhaps this is how the brain's perception of "torn" frames affects.

Well, with G-Sync enabled, any of the modes of the test application (constant frame rate or variable - it doesn't matter) always provides the smoothest video sequence. And in games, all the problems of the traditional approach to updating information on a monitor with a fixed refresh rate are sometimes even more noticeable - in this case, you can clearly assess the difference between all three modes using the example of StarCraft II (viewing a previously saved recording):

If your system and browser support playback of the MP4 / H.264 video format with a frequency of 60 FPS, then you will clearly see that in the disabled sync mode there are obvious tears in the picture, when V-Sync is turned on, jerks and irregularities in the video are observed. All this disappears when you turn on Nvidia G-Sync, in which there are no artifacts in the image, no increase in delays, or a "ragged" frame rate.

Of course, G-Sync is not a magic wand, and this technology will not save you from delays and slowdowns caused not by the process of displaying frames to a monitor with a fixed refresh rate. If the game itself has problems with the smoothness of frame output and large jerks in FPS caused by loading textures, processing data on the CPU, suboptimal work with video memory, lack of code optimization, etc., then they will remain in place. Moreover, they will become even more noticeable, since the output of the rest of the frames will be perfectly smooth. However, in practice, on powerful systems, problems are not very common, and G-Sync really improves the perception of dynamic footage.

Since Nvidia's new output technology affects the entire output pipeline, it can theoretically cause artifacts and jitter in frame rates, especially if the game artificially limits FPS at any point. Probably, such cases, if there are, are so rare that we did not even notice them. But they noted a clear improvement in comfort when playing - when playing at a monitor with G-Sync technology enabled, it seems that the PC has become so powerful that it is capable of a constant frame rate of at least 60 FPS without any drawdowns.

The feeling you get when playing with a G-Sync monitor is very difficult to describe in words. The difference is especially noticeable at 40-60 FPS - the frame rate very often found in demanding modern games. The difference compared to conventional monitors is simply amazing, and we will try not only to put it into words and show it in video examples, but also show the frame rate graphs obtained with different display modes.

In games of genres such as real-time strategy and the like, like StarCraft II, League of Legends, DotA 2, etc., the benefits of G-Sync technology are clearly visible, as you can see from the example in the video above. In addition, such games always require fast action that does not tolerate delays and irregular frame rates, and smooth scrolling plays a rather important role in comfort, which is greatly hampered by picture tearing when V-Sync Off, delays and lags when V-Sync On. So the G-Sync technology is ideal for this type of games.

First-person shooters like Crysis 3 and Far Cry 4 are even more common, they are also very demanding on computing resources, and at high quality settings, players often get frame rates of just about 30-60 FPS - ideal for use G-Sync, which really greatly improves the comfort when playing in such conditions. The traditional vertical sync method will very often force you to output frames at a frequency of as little as 30 FPS, increasing lag and jerking.

Much the same goes for third-person games like Batman, Assassin's Creed, and Tomb Raider. These games also use the latest graphics technology and require quite powerful GPUs to achieve high frame rates. At maximum settings in these games and disabling V-Sync, FPS of the order of 30–90 is often obtained, which causes unpleasant tearing of the picture. Enabling V-Sync helps only in some scenes with less resource requirements, and the frame rate jumps from 30 to 60 steps, which causes slowdowns and jerks. And enabling G-Sync solves all these problems, and this is perfectly noticeable in practice.

Practice test results

In this section, we'll take a look at the impact of G-Sync and V-Sync on frame rates by looking at the performance graphs to help you understand how different technologies work. During testing, we tested several games, but not everyone is convenient to show the difference between V-Sync and G-Sync - some game benchmarks do not allow forcing V-Sync, other games do not have a convenient tool for playing the exact game sequence (most modern games, unfortunately), others are executed on our test system either too fast or within narrow frame rates.

So we settled on Just Cause 2 with maximum settings, as well as a couple of benchmarks: Unigine Heaven and Unigine Valley - also with maximum quality settings. The frame rate in these applications varies over a fairly wide range, which is convenient for our purpose - to show what happens to the output of frames in different conditions.

Unfortunately, at the moment we do not have the FCAT software and hardware system in use, and we will not be able to show real FPS charts and recorded videos in different modes. Instead, we tested the averaged and instantaneous frame rates per second using a well-known utility at 60 and 120 Hz monitor refresh rates using V-Sync On, V-Sync Off screen refresh methods, Adaptive V-Sync, and G-Sync technology at 144Hz to show the difference between the new technology and the current 60Hz monitors with traditional vertical sync.

G-Sync vs V-Sync On

We'll start our exploration by comparing V-Sync On versus G-Sync - this is the most revealing comparison that will show the difference between tearing-free methods. First, we will look at the Heaven test application at maximum quality settings in a resolution of 2560 × 1440 pixels (by clicking on the thumbnails, graphs in full resolution open):

As you can see in the graph, the frame rate with G-Sync enabled and without synchronization is practically the same, except for a frequency higher than 60 FPS. But the FPS in the mode with the vertical sync method enabled is noticeably different, because in it the frame rate can be lower or equal to 60 FPS and a multiple of integers: 1, 2, 3, 4, 5, 6 ..., since the monitor sometimes has to show the same previous frame for several update periods (two, three, four, and so on). That is, the possible "steps" of the frame rate at V-Sync On and 60 Hz: 60, 30, 20, 15, 12, 10, ... FPS.

This jaggedness is clearly visible on the red line of the graph - during the run of this test, the frame rate was often 20 or 30 FPS, and much less often - 60 FPS. Although with G-Sync and V-Sync Off (No Sync), it was often in a wider range: 35-50 FPS. With V-Sync enabled, such an output rate is not possible, so the monitor always shows 30 FPS in such cases - limiting performance and adding delays to the total output time.

It should be noted that the graph above shows not the instantaneous frame rate, but averaged values within a second, but in reality FPS can "jump" much more - almost every frame, which causes unpleasant irregularities and lags. In order to see this clearly, we present a couple of graphs with instantaneous FPS - more precisely, with graphs of the rendering time of each frame in milliseconds. First example (the lines are slightly shifted relative to each other, only approximate behavior in each mode is shown):

As you can see, in this example, the frame rate in the case of G-Sync changes more or less smoothly, and with V-Sync On - stepwise (there are single leaps in the rendering time in both cases - this is normal). With Vsync enabled, render and frame output times can be 16.7ms; 33.3 ms; 50 ms, as shown in the graph. In terms of FPS, this corresponds to 60, 30 and 20 frames per second. Besides, there is no particular difference between the behavior of the two lines; there are peaks in both cases. Consider another significant period of time:

In this case, there is obvious "throwing" of the frame rendering time, and with them the FPS in the case with the enabled vertical sync. Look, with V-Sync On, there is an abrupt change in frame rendering time from 16.7 ms (60 FPS) to 33.3 ms (30 FPS) and vice versa - in reality, this causes the very uncomfortable irregularity and clearly visible jerks of the footage. The smoothness of the frame change in the case of G-Sync is much higher and it will be much more comfortable to play in this mode.

Let's take a look at the FPS graph in the second test application - Unigine Valley:

In this benchmark, we mark roughly the same as in Heaven. The frame rates in G-Sync and V-Sync Off modes are almost the same (except for a peak above 60 Hz), and when V-Sync is turned on, it causes a clearly staggered change in FPS, most often showing 30 FPS, sometimes dropping to 20 FPS and rising to 60 FPS - Typical behavior of this method, causing lag, jerking, and non-smooth footage.

In this subsection, it remains for us to consider a segment from the built-in test of the Just Cause 2 game:

This game perfectly shows all the flawedness of the outdated V-Sync On synchronization method! With a variable frame rate from 40 to 60-70 FPS, the G-Sync and V-Sync Off lines almost coincide, but the frame rate with V-Sync On reaches 60 FPS only for short segments. That is, with the real possibilities of the GPU for playing at 40-55 FPS, the player will be content with only 30 FPS.

Moreover, in the section of the graph where the red line jumps from 30 to 40 FPS, in reality, when viewing the image, there is a clear uneven frame rate - it jumps from 60 to 30 almost every frame, which clearly does not add smoothness and comfort when playing. But maybe Vsync will do better with a 120Hz refresh rate?

G-Sync vs V-Sync 60/120 Hz

Let's take a look at the two V-Sync On modes at 60 and 120 Hz refresh rates, comparing them to V-Sync Off (as we determined earlier, this line is almost identical to G-Sync). At a refresh rate of 120 Hz, more values are added to the already known FPS “steps”: 120, 40, 24, 17 FPS, etc., which can make the graph less stepped. Let's take a look at the frame rate in the Heaven benchmark:

Noticeably, the 120Hz refresh rate helps V-Sync On to achieve better performance and smoother frame rates. In cases where 20 FPS is observed on the graph at 60 Hz, 120 Hz mode gives an intermediate value of at least 24 FPS. And 40 FPS instead of 30 FPS on the graph is clearly visible. But there are no fewer steps, and even more, so that the frame rate at 120 Hz refresh, although it changes by a smaller amount, but it does it more often, which also adversely affects the overall smoothness.

In the Valley benchmark, there are fewer changes, as the average frame rate is closest to the 30 FPS stop available for both modes: with a refresh rate of 60 and 120 Hz. Sync Off provides smoother frame rates, but with visual artifacts, and V-Sync On modes show jagged lines again. In this subsection, it remains for us to look at the Just Cause 2 game.

And again we can clearly see how flawed vertical sync is, which does not provide a smooth frame change. Even switching to 120 Hz refresh rate gives V-Sync On mode just a few additional "steps" of FPS - the frame rate jumps back and forth from one stage to another have not gone anywhere - all this is very unpleasant when watching animated 3D scenes. you can take our word for it or watch the video examples above again.

Effect of Output Method on Average Frame Rate

And what happens to the average frame rate when all these sync modes are turned on, how does V-Sync and G-Sync affect the average performance? You can roughly estimate the speed loss even from the FPS charts shown above, but we will also give the average frame rates we got during testing. The first will again be Unigine Heaven:

The indicators in the Adaptive V-Sync and V-Sync Off modes are practically the same - after all, above 60 FPS, the speed hardly increases. It is logical that the inclusion of V-Sync leads to a decrease in the average frame rate, since in this mode, stepped FPS indicators are used. At 60Hz, the average frame rate dropped by more than a quarter, and switching on 120Hz returned only half of the average FPS loss.

The most interesting thing for us is how much the average frame rate drops in G-Sync mode. For some reason, the speed above 60 FPS is cut, although the 144 Hz mode was set on the monitor, therefore the speed when G-Sync was enabled turned out to be slightly lower than the mode with disabled synchronization. In general, we can assume that there are no losses at all, and they certainly cannot be compared with the lack of speed with V-Sync On. Consider the second benchmark, Valley.

In this case, the drop in the average rendering speed in modes with V-Sync enabled decreased, since the frame rate was close to 30 FPS throughout the test - one of the frequency "steps" for V-Sync in both modes: 60 and 120 Hz. Well, for obvious reasons, the losses in the second case were slightly lower.

When G-Sync was enabled, the average frame rate again turned out to be lower than that noted in the disabled synchronization mode, all for the same reason - enabling G-Sync "stabbed" FPS values above 60. But the difference is small, and the new Nvidia mode provides a noticeably higher speed than when vertical sync is on. Let's take a look at the last diagram - the average frame rate in the game Just Cause 2:

In the case of this game, the V-Sync On mode suffered significantly more than in the test applications on the Unigine engine. The average frame rate in this mode at 60 Hz is more than one and a half times lower than when synchronization is disabled at all! The inclusion of a refresh rate of 120 Hz greatly improves the situation, but still G-Sync allows you to achieve noticeably higher performance even in average FPS numbers, not to mention the comfort of the game, which now cannot be estimated only by numbers - you have to watch it with your own eyes.

So, in this section, we found out that the G-Sync technology provides a frame rate close to the mode with the sync off, and its inclusion has almost no effect on performance. Unlike vertical sync, V-Sync, when turned on, changes the frame rate in steps, and often jumps from one step to another, which causes non-smooth movements when displaying an animated series of frames and adversely affects the comfort in 3D games.

In other words, both our subjective impressions and test results indicate that Nvidia's G-Sync technology really changes the visual comfort of 3D games for the better. The new method is devoid of both graphic artifacts in the form of tearing of a picture consisting of several adjacent frames, as we can see in the mode with V-Sync disabled, so there are no problems with the smoothness of displaying frames to the monitor and an increase in output delays, as in the V-Sync mode. On.

Conclusion

With all the difficulties of objectively measuring the smoothness of video output, first I would like to express a subjective assessment. We were quite impressed with the comfortable gaming experience on the Nvidia Geforce and Asus' G-Sync monitor. Even a one-time "live" demonstration of G-Sync really makes a strong impression with the smoothness of the frame change, and after a long trial of this technology, continuing to play on the monitor with the old methods of displaying images on the screen becomes very dreary.

Perhaps, G-Sync can be considered the biggest change in the process of displaying visual information on the screen for a long time - finally we saw something really new in connection with displays and GPUs, which directly affects the comfort of 3D graphics perception, and even and so noticeable. And prior to the announcement of Nvidia's G-Sync technology, we have been tied to legacy display standards for years, rooted in the demands of the TV and film industries.

Of course, I would like to get such features even earlier, but now is a good time for its implementation, since in many demanding 3D games at maximum settings, top-end modern video cards provide such a frame rate at which the benefits of enabling G-Sync become maximum. And before the advent of technology from Nvidia, the realism achieved in games was simply "killed" by far from the best methods of updating the picture on the monitor, causing image tearing, increased delays and jerks in the frame rate. G-Sync technology, on the other hand, allows you to get rid of these problems by equating the frame rate to the screen with the rendering speed of the GPU (albeit with some restrictions) - this process is now in charge of the GPU itself.

We have not met a single person who tried G-Sync in work and remained dissatisfied with this technology. The first lucky people to test the technology at an Nvidia event last fall were overwhelmingly enthusiastic. Supported by trade press journalists and game developers (John Carmack, Tim Sweeney and Johan Andersson), they also gave extremely positive feedback to the new inference method. To which we now join - after several days of using a monitor with G-Sync, I do not want to return to old devices with long-outdated synchronization methods. Ah, if the choice of monitors with G-Sync were larger, and they would not be equipped exclusively with TN-matrices ...

Well, of the minuses of the technology from Nvidia, we can note that it works at a frame rate of at least 30 FPS, which can be considered an annoying drawback - it would be better if the image would be displayed clearly even at 20-25 FPS after its preparation on the GPU ... But the main disadvantage of the technology is that G-Sync is the company's own solution, which is not used by other GPU manufacturers: AMD and Intel. You can also understand Nvidia, because they spent resources on the development and implementation of the technology and agreed with monitor manufacturers to support it with the desire to make money. Actually, they once again acted as the engine of technical progress, despite the seemingly many supposed greed for profit. Let's reveal the big "secret": profit is the main goal of any commercial company, and Nvidia is no exception.

Still, the future is more likely to be more versatile open standards similar to G-Sync in essence, like Adaptive-Sync, an optional feature within DisplayPort 1.2a. But the appearance and distribution of monitors with such support will have to wait some more time - somewhere until the middle of next year, and G-Sync monitors from different companies (Asus, Acer, BenQ, AOC and others) have already been on sale for several months though not too cheap. Nothing prevents Nvidia from supporting Adaptive-Sync in the future, although they did not officially comment on this topic. Let's hope that Geforce fans not only now have a working solution in the form of G-Sync, but in the future it will also be possible to use a dynamic refresh rate within the generally accepted standard.

Among other disadvantages of the Nvidia G-Sync technology for users, we note that its support from the monitor costs the manufacturer a certain amount, which translates into an increase in the retail price relative to standard monitors. However, among G-Sync monitors there are models of different prices, including not too expensive ones. The main thing is that they are already on sale, and each player can get maximum comfort when playing right now, and so far only when using Nvidia Geforce video cards - the company vouches for this technology.

Instructions

To correct this parameter, open the menu of your game, find the menu "Options" or "Options", in the sub-item "Video" look for the item "Vertical" (Vertical Sync). If the menu is in English and the options are text, then look for the position of the Disabled or "Disabled" switch. Then click the Apply or Apply button to save this parameter. Changes take effect after restarting the game.

Another case is if there is no such parameter in the application. Then you will have to configure the synchronization through the video card driver. The setting is different for production graphics cards AMD Radeon or nVidia Geforce.