Throttling bandwidth with QoS. How to use QoS to ensure the quality of Internet access Where is the qos packet scheduler

In the first part of this series, I covered what QoS does and what it is used for. In this part I will continue the conversation by explaining how QoS works. As you read this article, please keep in mind that the information presented here is based on Windows Server 2003's QoS application, which is different from the QoS application in Windows 2000 Server.

Traffic management API

One of the main problems with prioritizing network traffic is that you cannot prioritize traffic based on the computer that generates it. It is common for single computers to use multiple applications, and create a separate traffic stream for each application (and operating system). When this happens, each traffic stream must be prioritized individually. After all, one application may need spare bandwidth, while the best delivery is ideal for another application.

This is where the Traffic Control API (Traffic Control Programming Interface) comes into play. The Traffic Control API is an application programming interface that allows you to apply QoS parameters to individual packages. The Traffic Control API works by defining individual traffic streams and applying different QoS control methods to those streams.

The first thing the Traffic Control API does is create what is known as filterspec. Filterspec is essentially a filter that defines what it means for a package to belong to a particular stream. Some of the attributes used by the filterspec include the source and destination IP address of the packet and the port number.

Once the filterspec has been defined, the API allows you to create flowspec. Flowspec defines the QoS parameters that will be applied to the sequence of packets. Some of the parameters defined by the flowspec include the transfer rate (acceptable transfer rate) and the type of service.

The third concept defined by the Traffic Control API is the flow concept. A flow is a simple sequence of packets that are subject to the same flowspec. In simple terms, the filterspec defines which packages will be included in the flowspec. Flowspec determines whether packets will be processed with higher priorities, and flow is the actual transfer of packets that are processed by the flowspec. All packets in the stream are processed equally.

It should be mentioned that one of the advantages of the Traffic Control API over the Generic QoS API used in Windows 2000 is the ability to use aggregation (aggregation). If a node has multiple applications transmitting multiple data streams to a common destination, then these packets can be combined into a common stream. This is true even if the applications use different port numbers, provided that the source and destination IP addresses are the same.

Generic Packet Classifier

In the previous section, I discussed the relationship between flowspec, filterspec, and flow. However, it is important to remember that the Traffic Control API is simply an application programming interface. As such, its job is to define and prioritize traffic flows, not create those flows.

The Generic Packet Classifier is responsible for creating streams. As you recall from the previous section, one of the attributes that was defined in the flowspec was the service type. The service type essentially determines the priority of the thread. The Generic Packet Classifier is responsible for determining the type of service that has been assigned to the flowspec, after which it queues the associated packets according to the type of service. Each thread is placed in a separate queue.

QoS Packet Scheduler

The third QoS component you need to be aware of is the QoS packet scheduler. Simply put, the primary job of a QoS packet scheduler is traffic shaping. To do this, the packet scheduler receives packets from various queues, and then marks these packets with priorities and flow rate.

As I discussed in the first part of this article series, for QoS to work correctly, the various components located between the source of packets and their destination must support (i.e. be aware of) QoS. While these devices need to know how to deal with QoS, they also need to know how to handle normal traffic without priorities. To make this possible, QoS uses a technology called tagging.

In fact, there are two types of markings here. The QoS Packet Scheduler uses Diffserv tagging, which is recognized by Layer 3 devices, and 802.1p tagging, which is recognized by Layer 2 devices.

Configuring the QoS Packet Scheduler

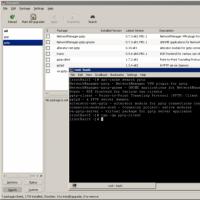

Before I show you how tagging works, it should be noted that you will need to configure the QoS packet scheduler for this to work. In Windows Server 2003, the QoS Packet Scheduler is an optional networking component, just like the Client for Microsoft Networks or the TCP / IP protocol. To enable QoS Packet Scheduler, open the properties page of your server's network connection and check the box next to QoS Packet Scheduler, as shown in Figure A. If the QoS Packet Scheduler is not listed, click the Install button and follow the instructions.

Figure A: QoS Packet Scheduler must be enabled before you can use QoS

Another thing you need to know about the QoS Packet Scheduler is that your network adapter must support 802.1p tagging for it to work properly. To test your adapter, click the Configure button, Figure A, and Windows will display the properties for your network adapter. If you look at the Advanced tab on the property page, you will see the various properties that your network adapter supports.

If you look at Figure B, you can see that 802.1Q / 1P VLAN Tagging is one of the properties listed. You can also see that this property is disabled by default. To enable 802.1p tagging, simply enable this property and click OK.

Figure B: You must enable 802.1Q / 1P VLAN Tagging

You may have noticed in Figure B that the feature you enabled is VLAN tagging, not packet tagging. This is because priority markers are included in VLAN tags. The 802.1Q standard defines VLANs and VLAN tags. This standard actually reserves three bits in the VLAN packet, which are used to write the priority code. Unfortunately, the 802.1Q standard never specifies what these priority codes should be.

The 802.1P standard was created to complement 802.1Q. 802.1P defines the priority tagging that can be enclosed in a VLAN tag. I will tell you how these two standards work in part three.

Conclusion

In this article, we have discussed some of the basic concepts in Windows Server 2003's QoS architecture. In Part 3, I'll go into more detail on how the QoS Packet Scheduler marks packets. I will also discuss how QoS works in a low bandwidth network environment.

In this article, we'll take a look at how to configure the reserved bandwidth in Windows 10. By default, Windows reserves 20% of the total Internet bandwidth.

Yes, yes, the Windows 10 operating system reserves a certain percentage of your Internet connection bandwidth for quality of service (QoS).

According to Microsoft:

QoS can include critical system operations such as updating the Windows system, managing the licensing status, etc. The concept of reserved bandwidth applies to all programs running on the system. Typically the packet scheduler will limit the system to 80% of the connectivity bandwidth. This means that Windows reserves 20% of your Internet bandwidth exclusively for QoS.

In case you want to get that percentage of bandwidth reserved, this article is for you. Below are two ways to configure the reserved bandwidth in the Windows 10 operating system.

NOTE: If you turn off all the reserved bandwidth for your system, that is, set it to 0%, this will affect the actions of the operating system, especially automatic updates.

Denial of responsibility: further steps will include editing the registry. Errors while editing the registry can adversely affect your system. Therefore, be careful when editing registry entries and create a system restore point first.

Step 1: Open Registry Editor(if you are not familiar with Registry Editor click).

Step 2: In the left pane of the Registry Editor window, navigate to the following section:

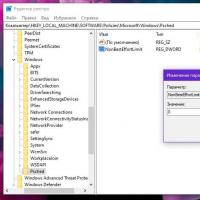

HKEY_LOCAL_MACHINE \ SOFTWARE \ Policies \ Microsoft \ Windows \ Psched

Note: If the section and parameter " NonBestEffortLimit"Don't exist just create them.

Step 3: Now in the right pane of the registry key "Psched" find the DWORD (32 bit) parameter named NonBestEffortLimit... Double click on it to change its values:

By default, the parameter is set to 50 in hexadecimal or 80 in decimal notation.

Step 4: Select the decimal system and set the value equal to the percentage of the required reserved bandwidth.

For example if you set the value to be 0 , the reserved bandwidth for your Windows operating system will be completely disabled, i.e. equal to 0%. Click the button "OK" and close Registry Editor.

Step 5: Restart your PC for the changes to take effect.

If you want to configure or limit the reserved bandwidth on multiple computers in your organization / workplace, you can deploy the appropriate setting in the GPO.

Step 1: Open the Local Group Policy Editor

Step 2: Go to the section: Computer Configuration → Administrative Templates → Network → Qos Package Scheduler

Step 3: In the right window, double-click the policy.

By default, this policy is not set and the system reserves 20% bandwidth of the Internet connection. You need to enable it, set the parameter "Limit the reserved bandwidth" meaning "Included".

There is not a single person who has not read any FAQ on Windows XP at least once. And if so, then everyone knows that there is such a harmful Quality of Service - for short, QoS. When configuring the system, it is highly recommended to disable it, because it limits the network bandwidth to 20% by default, and as if this problem exists in Windows 2000 as well.

These are the lines:

Q: How to completely disable the QoS (Quality of Service) service? How do I set it up? Is it true that it limits the network speed?

A: Indeed, by default Quality of Service reserves 20% of the channel bandwidth for its needs (any - even a modem for 14400, even a gigabit Ethernet). Moreover, even if you remove the QoS Packet Scheduler service from the Properties connection, this channel is not released. You can release the channel or simply configure QoS here. Launch the Group Policy applet (gpedit.msc). In Group Policy, find Local computer policy and click on Administrative templates. Select the item Network - QoS Packet Sheduler. Turn on Limit reservable bandwidth. Now we reduce the Bandwidth limit 20% to 0% or simply disable it. If desired, you can also configure other QoS parameters here. To activate the changes made, all that remains is to reboot.

20% is, of course, a lot. Truly Microsoft is Mazdai. Statements of this kind wander from FAQ to FAQ, from forum to forum, from media to media, are used in all kinds of "tweaks" - programs for "tuning" Windows XP (by the way, open "Group Policies" and "Local Security Policies", and no "tweak" can match them in the wealth of customization options). It is necessary to be careful to expose unfounded allegations of this kind, which we will now do using a systematic approach. That is, we will thoroughly study the problematic issue, relying on official primary sources.

What is a quality service network?

Let's adopt the following simplified definition of a networked system. Applications run and run on hosts and communicate with each other. Applications send data to the operating system for transmission over the network. Once the data is transferred to the operating system, it becomes network traffic.

Network QoS relies on the ability of the network to process this traffic to ensure that the requests of some applications are fulfilled. This requires a fundamental mechanism for handling network traffic that is capable of identifying traffic that is eligible for and control of specific handling.

The QoS functionality is designed to satisfy two network actors: network applications and network administrators. They often have disagreements. The network administrator limits the resources used by a specific application, while the application tries to grab as many network resources as possible. Their interests can be reconciled, taking into account the fact that the network administrator plays a leading role in relation to all applications and users.

Basic QoS parameters

Different applications have different requirements for handling their network traffic. Applications are more or less tolerant of latency and traffic loss. These requirements have found application in the following QoS-related parameters:

- Bandwidth - The rate at which traffic generated by the application must be sent over the network.

- Latency (latency) - the delay that the application can tolerate in the delivery of the data packet;

- Jitter - change the delay time;

- Loss - The percentage of data lost.

If infinite network resources were available, then all application traffic could be transmitted at the required rate, with zero latency, zero latency variation, and zero loss. However, network resources are not limitless.

The QoS mechanism controls the allocation of network resources for application traffic to meet its transmission requirements.

Fundamental QoS Resources and Traffic Handling Mechanisms

The networks that connect hosts use a variety of networking devices including host network adapters, routers, switches, and hubs. Each of them has network interfaces. Each network interface can receive and transmit traffic at a finite rate. If the rate at which traffic is directed to an interface is higher than the rate at which the interface is forwarding traffic, congestion occurs.

Network devices can handle the congestion condition by queuing traffic in the device memory (in a buffer) until the congestion is over. In other cases, network equipment can drop traffic to ease congestion. As a result, applications are faced with a change in latency (as traffic is stored in queues on interfaces) or with a loss of traffic.

The ability of network interfaces to forward traffic and the availability of memory to store traffic on network devices (until traffic can be sent further) constitute the fundamental resources required to provide QoS for application traffic streams.

Allocating QoS Resources to Network Devices

Devices that support QoS use network resources intelligently to carry traffic. That is, the traffic of applications that are more tolerant of latencies becomes queued (stored in a buffer in memory), and the traffic of applications that are critical to latency is forwarded on.

To accomplish this task, a network device must identify traffic by classifying packets, and must have queues and mechanisms for serving them.

Traffic processing engine

The traffic processing mechanism includes:

- 802.1p;

- Differentiated per-hop-behaviors (diffserv PHB);

- Integrated services (intserv);

- ATM, etc.

Most local area networks are based on IEEE 802 technology including Ethernet, token-ring, etc. 802.1p is a traffic processing mechanism to support QoS in such networks.

802.1p defines a field (layer 2 in the OSI networking model) in an 802 packet header that can carry one of eight priority values. Typically, hosts or routers, when sending traffic to the local network, mark each packet sent, assigning it a certain priority value. Networking devices such as switches, bridges, and hubs are expected to handle packets appropriately using queuing mechanisms. 802.1p is limited to a local area network (LAN). As soon as the packet traverses the LAN (via OSI Layer 3), 802.1p priority is removed.

Diffserv is a Layer 3 mechanism. It defines a field in Layer 3 of the header of IP packets called diffserv codepoint (DSCP).

Intserv is a whole range of services that define a guaranteed service and a service that manages downloads. The guaranteed service promises to carry some amount of traffic with measurable and limited latency. The service that manages the load agrees to carry some amount of traffic with "light network congestion". These are measurable services in the sense that they are defined to provide measurable QoS to a specific amount of traffic.

Because ATM technology fragments packets into relatively small cells, it can offer very low latency. If you need to send a packet urgently, the ATM interface can always be free for transmission for the time it takes to transmit one cell.

QoS has many more complex mechanisms that make this technology work. Let's note just one important point: in order for QoS to work, it is necessary to support this technology and appropriate configuration throughout the transmission from the start point to the end point.

One of the most popular areas in today's networking is the mixing of voice and video over traditional data networks. One of the problems with this kind of information is that for it to work properly, video and audio data packets must be transmitted to the recipient quickly and reliably, without interruptions or too long delays. However, at the same time, this type of traffic should not impede the transmission of more traditional data packets.

One possible solution to this problem is QoS. QoS, or quality of service, is a technology for prioritizing data packets. QoS allows you to transmit time-sensitive packets with a higher priority than other packets.

QoS is an industry standard, not a Microsoft standard. However, Microsoft first introduced this QoS standard in Windows 2000. Microsoft's version of QoS has evolved quite a bit since then, but still meets industry standards.

In Windows XP Professional, QoS primarily acts as a bandwidth reservation mechanism. When QoS is enabled, the application is allowed to reserve up to 20% of the total network bandwidth provided by each machine's NIC. However, the amount of network bandwidth that the application reserves is configurable. I'll show you how to change the amount of bandwidth reserved in Part 3.

To see how the spare bandwidth is being used, suppose you have a video conferencing application that requires prioritized bandwidth to function properly. Assuming QoS is enabled for this application, we can say that it reserves 20% of the machine's total bandwidth, leaving 80% of the bandwidth for the rest of the network traffic.

All applications except video conferencing applications use a technology called best effort delivery. This means that packets are sent with the same first-delivered-first-served priority. On the other hand, video conferencing application traffic will always be prioritized over other traffic, but the application will never be allowed to consume more than 20% of all bandwidth.

However, just because Windows XP reserves some of the bandwidth for priority traffic does not mean that applications with normal priority will not be able to use the spare bandwidth. Although video conferencing applications take advantage of the higher priority reserved bandwidth, the chances of such applications being constantly used are very small. In this case, Windows allows other applications to use the spare and non-spare bandwidth for the best possible delivery, as long as the applications for which a portion of the network bandwidth is reserved are not used.

As soon as the video conferencing application starts, Windows starts to enforce the reservation. Even so, the reservation is not absolute. Suppose Windows has reserved 20% of the network bandwidth for a video conferencing application, but this application does not need all 20%. In these cases, Windows allows other applications to use the remaining bandwidth, but will continually monitor the needs of the higher priority application. In case the application needs more bandwidth, the bandwidth will be allocated to it up to a maximum value of 20%.

As I said, QoS is an industry standard, not a Microsoft technology. As such, QoS is used by Windows, but Windows cannot do the job on its own. For QoS to work, every piece of equipment between the sender and receiver must support QoS. This means that network adapters, switches, routers and all other devices in use need to know about QoS, as well as the operating systems of the recipient and sender.

If you're curious, then you don't need to install some crazy exotic networking infrastructure to use QoS. Asynchronous Transfer Mode (ATM) is an excellent networking technology for using QoS as it is a connection-oriented technology, however you can use QoS with other technologies such as Frame Relay, Ethernet, and even Wi-FI (802.11 x).

The reason ATM is such an ideal choice for QoS is because it is capable of implementing bandwidth reservation and resource allocation at the hardware level. This type of distribution goes beyond the capabilities of Ethernet and similar networking technologies. This does not mean that QoS cannot be used. It just means that QoS has to be applied differently than in an ATM environment.

In an ATM environment, resources are allocated immediately, at the physical device level. Since Ethernet and other similar technologies cannot allocate resources in this way, technologies of this type are based on prioritization rather than true resource allocation. This means that bandwidth reservations occur at the higher layer of the OSI model. Once the bandwidth has been reserved, the higher priority packets are sent first.

One point to consider if you are going to apply QoS over Ethernet, Wi-Fi, or other similar technologies is that such technologies are unconnected. This means that there is no way for the sender to check the status of the receiver or the status of the network between the sender and the receiver. This, in turn, means that the sender can guarantee that the packets with higher priorities will be sent first, but cannot guarantee that these packets will be delivered within a certain time frame. On the other hand, QoS is capable of providing this kind of assurance on ATM networks, since ATM is a connection-oriented technology.

Windows 2000 vs. Windows Server 2003

Earlier I talked about how Microsoft first introduced QoS in Windows 2000, and that this application of QoS has evolved significantly since then. Therefore, I want to talk a little about the differences between QoS in Windows 2000 and in Windows XP and Windows Server 2003 (in which this standard is used about the same).

In Windows 2000, QoS was based on the Intserv architecture, which is not supported in Windows XP or Windows Server 2003. The reason Microsoft chose not to use such an architecture was that the underlying API was difficult to use and the architecture had problems. with scale.

Some organizations still use Windows 2000, so I decided to give you some information on how the Windows 2000 QoS architecture works. Windows 2000 uses a protocol called RSVP to reserve bandwidth resources. When bandwidth is requested, Windows needs to determine when packets can be sent. To do this, Windows 2000 uses a signaling protocol called SBM (Sunbelt Bandwidth manager) to tell the sender that it is ready to receive packets. The Admission Control Service (ACS) verifies that effective bandwidth is available and then either grants or denies the bandwidth request.

Traffic management API

One of the main problems with prioritizing network traffic is that you cannot prioritize traffic based on the computer that generates it. It is common for single computers to use multiple applications, and create a separate traffic stream for each application (and operating system). When this happens, each traffic stream must be prioritized individually. After all, one application may need spare bandwidth, while the best delivery is ideal for another application.

This is where the Traffic Control API (Traffic Control Programming Interface) comes into play. The Traffic Control API is an application programming interface that allows you to apply QoS parameters to individual packages. The Traffic Control API works by defining individual traffic streams and applying different QoS control methods to those streams.

The first thing the Traffic Control API does is create what is known as filterspec. Filterspec is essentially a filter that defines what it means for a package to belong to a particular stream. Some of the attributes used by the filterspec include the source and destination IP address of the packet and the port number.

Once the filterspec has been defined, the API allows you to create flowspec. Flowspec defines the QoS parameters that will be applied to the sequence of packets. Some of the parameters defined by the flowspec include the transfer rate (acceptable transfer rate) and the type of service.

The third concept defined by the Traffic Control API is the flow concept. A flow is a simple sequence of packets that are subject to the same flowspec. In simple terms, the filterspec defines which packages will be included in the flowspec. Flowspec determines whether packets will be processed with higher priorities, and flow is the actual transfer of packets that are processed by the flowspec. All packets in the stream are processed equally.

It should be mentioned that one of the advantages of the Traffic Control API over the Generic QoS API used in Windows 2000 is the ability to use aggregation (aggregation). If a node has multiple applications transmitting multiple data streams to a common destination, then these packets can be combined into a common stream. This is true even if the applications use different port numbers, provided that the source and destination IP addresses are the same.

Generic Packet Classifier

In the previous section, I discussed the relationship between flowspec, filterspec, and flow. However, it is important to remember that the Traffic Control API is simply an application programming interface. As such, its job is to define and prioritize traffic flows, not create those flows.

The Generic Packet Classifier is responsible for creating streams. As you recall from the previous section, one of the attributes that was defined in the flowspec was the service type. The service type essentially determines the priority of the thread. The Generic Packet Classifier is responsible for determining the type of service that has been assigned to the flowspec, after which it queues the associated packets according to the type of service. Each thread is placed in a separate queue.

QoS Packet Scheduler

The third QoS component you need to be aware of is the QoS packet scheduler. Simply put, the primary job of a QoS packet scheduler is traffic shaping. To do this, the packet scheduler receives packets from various queues, and then marks these packets with priorities and flow rate.

As I discussed in the first part of this article series, for QoS to work correctly, the various components located between the source of packets and their destination must support (i.e. be aware of) QoS. While these devices need to know how to deal with QoS, they also need to know how to handle normal traffic without priorities. To make this possible, QoS uses a technology called tagging.

In fact, there are two types of markings here. The QoS Packet Scheduler uses Diffserv tagging, which is recognized by Layer 3 devices, and 802.1p tagging, which is recognized by Layer 2 devices.

Configuring the QoS Packet Scheduler

Before I show you how tagging works, it should be noted that you will need to configure the QoS packet scheduler for this to work. In Windows Server 2003, the QoS Packet Scheduler is an optional networking component, just like the Client for Microsoft Networks or the TCP / IP protocol. To enable QoS Packet Scheduler, open the properties page of your server's network connection and check the box next to QoS Packet Scheduler, as shown in Figure A. If the QoS Packet Scheduler is not listed, click the Install button and follow the instructions.

Figure A: QoS Packet Scheduler must be enabled before you can use QoS

Another thing you need to know about the QoS Packet Scheduler is that your network adapter must support 802.1p tagging for it to work properly. To test your adapter, click the Configure button, Figure A, and Windows will display the properties for your network adapter. If you look at the Advanced tab on the property page, you will see the various properties that your network adapter supports.

If you look at Figure B, you can see that 802.1Q / 1P VLAN Tagging is one of the properties listed. You can also see that this property is disabled by default. To enable 802.1p tagging, simply enable this property and click OK.

Figure B: You must enable 802.1Q / 1P VLAN Tagging

You may have noticed in Figure B that the feature you enabled is VLAN tagging, not packet tagging. This is because priority markers are included in VLAN tags. The 802.1Q standard defines VLANs and VLAN tags. This standard actually reserves three bits in the VLAN packet, which are used to write the priority code. Unfortunately, the 802.1Q standard never specifies what these priority codes should be.

The 802.1P standard was created to complement 802.1Q. 802.1P defines the priority tagging that can be enclosed in a VLAN tag.

802.1P signal

As I said in the previous part, 802.1p signaling is carried out at the second layer of the OSI model. This layer is used by physical devices such as switches. Layer 2 devices that support 802.1p can view the priority markings that are assigned to packets and then group those packets into separate traffic classes.

On Ethernet networks, priority marking is included in VLAN tags. VLANs and VLAN tags are defined by the 802.1Q standard, which defines a three-bit priority field, but does not really define how this priority field should be used. This is where the 802.1P standard comes into play.

802.1P defines various priority classes that can be used in conjunction with the 802.1Q standard. Ultimately 802.1Q leaves it up to the administrator to choose the priority marking, so technically you don't have to follow 802.1P's guidelines, but 802.1P seems to be the one that everyone chooses.

While the idea of using 802.1P standards to provide Layer 2 marking probably sounds like pure theory, it can actually be defined using Group Policy settings. The 802.1P standard provides eight different priority classes (ranging from 0 to 7). Higher priority packets are processed by QoS with higher delivery priority.

By default, Microsoft assigns the following priority markings:

But as I mentioned earlier, you can change these priorities by modifying various Group Policy settings. To do this, open the Group Policy Editor and navigate in the console tree to the Computer Configuration \ Administration Templates \ Networks \ QoS Package Scheduler \ Second-level priority value. As you can see in Figure A, there are Group Policy settings corresponding to each of the priority labels I listed above. You can assign your own priority marking levels to any of these service types. Keep in mind, however, that these Group Policy settings only apply to hosts running Windows XP, 2003, or Vista.

Figure A: You can use the Group Policy Editor to customize second-level priority marking.

Differentiated Services

As I explained in the previous article, QoS performs priority marking at the second and third layers of the OSI model. This ensures that priorities are taken into account throughout the package delivery process. For example, switches operate at the second layer of the OSI model, but routers typically operate at the third layer. Thus, if packets were only using 802.1p priority marking, the switch would prioritize these packets, but these priorities would be ignored by the network routers. To counter this, QoS uses the Differentiated Services protocol (Diffserv) to prioritize traffic on the third layer of the OSI model. Diffserv marking is included in the IP headers of packets using TCP / IP.

The architecture used by Diffserv was originally defined by RFC 2475. However, many of the architecture specifications have been rewritten in RFC 2474. RFC 2474 defines the Diffserv architecture for IPv4 and IPv6.

An interesting point about IPv4 in RFC 2474 is that even though Diffserv has been completely redefined, it is still backward compatible with the original RFC 2475 specification. This means that older routers that do not support the new specifications can recognize the assigned priorities.

The current Diffserv application uses the Type of Service (TOS) packet type octets to store the Diffserv value (called the DSCP value). Within this octet, the first six bits hold the DSCP value, and the last two bits are unused. The reason these markings are backward compatible with the RFC 2475 specification is because RFC 2475 required the first three bits in the same octet to be used in IP sequence information. Although the DSCP values are six bits in length, the first three bits still reflect the IP sequence.

As with 802.1p tagging that I demonstrated earlier, you can configure Diffserv priorities through various Group Policy settings. Before I show you how, I will introduce the standard Diffserv priorities used in Windows:

You may have noticed that Diffserv priority markings use a completely different range than 802.1P. Instead of supporting a range of 0-7, Diffserv supports a priority marking range of 0 to 63, with larger numbers having higher priorities.

As I said earlier, Windows allows you to define Diffserv priority marking using Group Policy settings. Keep in mind, however, that some more advanced routers will assign their own Diffserv values to packets, regardless of what Windows assigns.

With this in mind, you can configure Diffserv Priority Marking by opening the Group Policy Editor and navigating to Computer Configuration \ Administrative Templates \ Network \ QoS Package Scheduler in the console tree.

If you look at Figure B, you will notice that there are two DSCP related tabs under the QoS Packet Scheduler tab. One of these tabs allows you to assign DSCP priority marking for packets that match the flowspec, and the other allows you to set DSCP priority marking for non-compliant packets. The actual parameters themselves are similar for both tabs, as shown in Figure C.

Figure B: Windows manages DSCP priority markings separately for packets that match the flowspec and those that don't.

Figure C: You can manually assign DSCP priority marking for different types of services.

Miscellaneous Group Policy Settings

If you look at Figure B, you will notice that there are three Group Policy settings that I did not mention. I wanted to briefly mention what these parameters are and what they do, for those of you who might be interested.

The Limit Outstanding Packets parameter is essentially a service threshold value. If the number of surpassed packets reaches a certain value, then QoS will deny any additional bandwidth allocation for the network adapter until the value falls below the maximum allowed threshold.

The Limit Reservable Bandwidth parameter controls the percentage of total bandwidth that QoS-enabled applications can reserve. By default, QoS-enabled applications can reserve up to 80 percent of the network bandwidth. Of course, any portion of the bandwidth that is reserved that is not currently being used by QoS applications can be used by other applications.

The Set Timer Resolution parameter controls the minimum time units (in microseconds) that the QoS packet scheduler will use to schedule packets. Essentially, this setting controls the maximum rate at which packets can be queued for delivery.

QoS and modems

In this age of almost universal availability of broadband technologies, it seems strange to talk about modems. However, there are still many small businesses and home users who use modems as a mechanism to connect to the Internet. Recently, I even saw a large corporation using modems to communicate with satellite offices that are located in remote locations where broadband technology is not available.

Of course, the biggest problem with using modems is the limited bandwidth they have. A less obvious but equally important issue is that users generally do not change their online behavior when using dial-up connections. Of course, users may not feel much like downloading large files when connected to the Internet via a modem, but the rest of the user behavior remains the same as if they were connected via a broadband connection.

Typically, users don't worry too much about keeping Microsoft Outlook open all the time, or browsing while files are downloading in the background. Some users also keep their instant messaging system open at all times. The problem with this type of behavior is that each of these applications or tasks consumes a certain amount of bandwidth on your internet connection.

To see how QoS can help, let's take a look at what happens under normal circumstances when QoS is not used. Usually, the first application that tries to access the internet has the most rights to use the connection. This does not mean that other applications cannot use the connection, but rather that Windows thinks that other applications will not use the connection.

Once the connection is established, Windows starts dynamically adjusting the TCP receive window size. The TCP receive window size is the amount of data that can be sent before waiting for an acknowledgment that the data has been received. The larger the TCP receive window, the larger the packets that the sender can transmit before waiting for a successful delivery confirmation.

The TCP receive window size must be tuned carefully. If TCP's receive window is too small, efficiency will suffer, as TCP requires very frequent acknowledgments. However, if the TCP receive window is too large, then the machine may transmit too much data before it knows that there was a problem during the transfer. As a result, a large amount of data is required to be retransmitted, which also affects efficiency.

When an application starts using a dial-up Internet connection, Windows dynamically adjusts the TCP receive window size as it sends packets. The goal of Windows here is to achieve a stable state in which the TCP receive window size is optimally tuned.

Now, suppose the user opens a second app that also requires an internet connection. After it does so, Windows initiates the TCP slow start algorithm, which is the algorithm that is responsible for adjusting the TCP receive window size to the optimal value. The problem is that TCP is already in use by an application that was started earlier. This affects the second application in two ways. First, the second application takes much longer to achieve the optimal TCP receive window size. Second, the baud rate for the second application will always be slower than the baud rate for the forward running application.

The good news is that you can avoid these problems on Windows XP and Windows Server 2003 by simply running the QOS Package Scheduler. After that, the QOS Packet Scheduler will automatically use a technology called Deficit Round Robin whenever Windows detects a slow connection speed.

Deficit Round Robin works by dynamically creating separate queues for each application that requires Internet access. Windows serves these queues in a round robin fashion that dramatically improves the efficiency of all applications that need to access the Internet. If you're curious, Deficit Round Robin is also available in Windows 2000 Server, but it doesn't automatically turn on.

Internet connection sharing

In Windows XP and Windows Server 2003, QoS also facilitates Internet connection sharing. As you probably know, Internet connection sharing is a simplified way of creating a NAT-based router. The computer to which the Internet connection is physically connected acts as a router and DHCP server for other computers on the network, thereby providing them with access to the Internet through this host. Internet connection sharing is typically only used on small, peer-to-peer networks that lack domain infrastructure. Large networks typically use physical routers or routing and remote access services.

In the section above, I have already explained how Windows dynamically adjusts the TCP receive window size. However, this dynamic setting can cause problems when sharing an Internet connection. The reason for this is that connections between computers on a local network are usually relatively fast. Typically, such a connection consists of 100 Mb Ethernet, or 802.11G wireless connection. While these types of connections are far from the fastest, they are much faster than most internet connections available in the United States. This is where the problem lies.

The client computer needs to communicate over the Internet, but it cannot do this directly. Instead, it uses the internet connection sharing host as an access module. When Windows calculates the optimal TCP receive window size, it does so based on the speed of the connection between the local machine and the Internet Connection Sharing machine. The difference between the amount of data that the local machine can actually receive from the Internet and the amount that it thinks it can receive, based on the speed of the Internet Connection Sharing host, can cause problems. More specifically, the difference in connection speed can potentially cause situations in which data is backed up in a queue connected to a slow connection.

This is where QoS comes into play. If you install the QOS Packet Scheduler on an Internet Connection Sharing site, the Internet Connection Sharing host will invalidate the TCP receive window size. This means that the Internet Connection Sharing host will set the TCP receive window size for local hosts to the same size as they would if they were directly connected to the Internet. This fixes problems caused by mismatched network connection speeds.

Conclusion

In this article series, I have covered QoS and how it can be used to shape traffic flow across various types of network connections. As you can see, QoS can make the network perform much more efficiently by shaping traffic in such a way that it can take advantage of the lightest network congestion and ensure faster traffic delivery with higher priority.

Brien Posey

The QoS myth

There is not a single person who has not read any FAQ on Windows XP at least once. And if so, then everyone knows that there is such a harmful Quality of Service - for short, QoS. When configuring the system, it is highly recommended to disable it, because it limits the network bandwidth to 20% by default, and as if this problem exists in Windows 2000 as well.

These are the lines:

"Q: How to completely disable the QoS (Quality of Service) service? How to configure it? Is it true that it limits the network speed?"

A: Indeed, by default Quality of Service reserves 20% of the channel bandwidth for its needs (any - even a modem for 14400, even a gigabit Ethernet). Moreover, even if you remove the QoS Packet Scheduler service from the Properties connection, this channel is not released. You can release the channel or simply configure QoS here. Launch the Group Policy applet (gpedit.msc). In Group Policy, find Local computer policy and click on Administrative templates. Select the item Network - QoS Packet Sheduler. Turn on Limit reservable bandwidth. Now we reduce the Bandwidth limit 20% to 0% or simply disable it. If desired, you can also configure other QoS parameters here. To activate the changes you just need to reboot. "

20% is, of course, a lot. Truly Microsoft is Mazdai. Statements of this kind wander from FAQ to FAQ, from forum to forum, from media to media, are used in all kinds of "tweaks" - programs for "tuning" Windows XP (by the way, open "Group Policies" and "Local Security Policies", and no "tweak" can match them in the wealth of customization options). It is necessary to be careful to expose unfounded allegations of this kind, which we will now do using a systematic approach. That is, we will thoroughly study the problematic issue, relying on official primary sources.

What is a quality service network?

Let's adopt the following simplified definition of a networked system. Applications run and run on hosts and communicate with each other. Applications send data to the operating system for transmission over the network. Once the data is transferred to the operating system, it becomes network traffic.

Network QoS relies on the ability of the network to process this traffic to ensure that the requests of some applications are fulfilled. This requires a fundamental mechanism for handling network traffic that is capable of identifying traffic that is eligible for and control of specific handling.

The QoS functionality is designed to satisfy two network actors: network applications and network administrators. They often have disagreements. The network administrator limits the resources used by a specific application, while the application tries to grab as many network resources as possible. Their interests can be reconciled, taking into account the fact that the network administrator plays a leading role in relation to all applications and users.

Basic QoS parameters

Different applications have different requirements for handling their network traffic. Applications are more or less tolerant of latency and traffic loss. These requirements have found application in the following QoS-related parameters:

Bandwidth - The rate at which traffic generated by the application must be sent over the network.

Latency - The latency that an application can tolerate in delivering a data packet.

Jitter - change the delay time.

Loss - The percentage of data lost.

If infinite network resources were available, then all application traffic could be transmitted at the required rate, with zero latency, zero latency variation, and zero loss. However, network resources are not limitless.

The QoS mechanism controls the allocation of network resources for application traffic to meet its transmission requirements.

Fundamental QoS Resources and Traffic Handling Mechanisms

The networks that connect hosts use a variety of networking devices including host network adapters, routers, switches, and hubs. Each of them has network interfaces. Each network interface can receive and transmit traffic at a finite rate. If the rate at which traffic is directed to an interface is higher than the rate at which the interface is forwarding traffic, congestion occurs.

Network devices can handle the congestion condition by queuing traffic in the device memory (in a buffer) until the congestion is over. In other cases, network equipment can drop traffic to ease congestion. As a result, applications are faced with a change in latency (as traffic is stored in queues on interfaces) or with a loss of traffic.

The ability of network interfaces to forward traffic and the availability of memory to store traffic on network devices (until traffic can be sent further) constitute the fundamental resources required to provide QoS for application traffic streams.

Allocating QoS Resources to Network Devices

Devices that support QoS use network resources intelligently to carry traffic. That is, the traffic of applications that are more tolerant of latencies becomes queued (stored in a buffer in memory), and the traffic of applications that are critical to latency is forwarded on.

To accomplish this task, a network device must identify traffic by classifying packets, and must have queues and mechanisms for serving them.

Traffic processing engine

The traffic processing mechanism includes:

802.1p

Differentiated per-hop-behaviors (diffserv PHB).

Integrated services (intserv).

ATM, etc.

Most local area networks are based on IEEE 802 technology including Ethernet, token-ring, etc. 802.1p is a traffic processing mechanism to support QoS in such networks.

802.1p defines a field (layer 2 in the OSI networking model) in an 802 packet header that can carry one of eight priority values. Typically, hosts or routers, when sending traffic to the local network, mark each packet sent, assigning it a certain priority value. Networking devices such as switches, bridges, and hubs are expected to handle packets appropriately using queuing mechanisms. 802.1p is limited to a local area network (LAN). As soon as the packet traverses the LAN (via OSI Layer 3), 802.1p priority is removed.

Diffserv is a Layer 3 mechanism. It defines a field in Layer 3 of the header of IP packets called diffserv codepoint (DSCP).

Intserv is a whole range of services that define a guaranteed service and a service that manages downloads. The guaranteed service promises to carry some amount of traffic with measurable and limited latency. The service that manages the load agrees to carry some amount of traffic with "light network congestion". These are measurable services in the sense that they are defined to provide measurable QoS to a specific amount of traffic.

Because ATM technology fragments packets into relatively small cells, it can offer very low latency. If you need to send a packet urgently, the ATM interface can always be free for transmission for the time it takes to transmit one cell.

QoS has many more complex mechanisms that make this technology work. Let's note just one important point: in order for QoS to work, it is necessary to support this technology and appropriate configuration throughout the transmission from the start point to the end point.

For clarity, consider Fig. 1.

We accept the following:

All routers participate in the transmission of the required protocols.

One QoS session, requiring 64 Kbps, is provisioned between Host A and Host B.

Another session, requiring 64 Kbps, is initialized between Host A and Host D.

To simplify the diagram, we assume that the routers are configured so that they can reserve all network resources.

In our case, one 64 Kbps reservation request would reach three routers on the data path between Host A and Host B. Another 64 Kbps request would reach three routers between Host A and Host D. The routers would fulfill these resource reservation requests because they do not exceed the maximum. If, instead, both hosts B and C were to simultaneously initiate a 64 Kbps QoS session with host A, then the router serving these hosts (B and C) would deny one of the connections.

Now suppose the network administrator turns off QoS processing on the bottom three routers serving hosts B, C, D, E. In this case, requests for resources up to 128 Kbps would be satisfied regardless of the location of the participating host. However, the quality assurance would be low because traffic for one host would compromise traffic for another. QoS could be maintained if the top router limited all requests to 64 Kbps, but this would result in inefficient use of network resources.

On the other hand, the bandwidth of all network connections could be increased to 128 Kbps. But the increased bandwidth will only be used when hosts B and C (or D and E) are simultaneously requesting resources. If this is not the case, then network resources will again be used inefficiently.

Microsoft QoS Components

Windows 98 contains only user-level QoS components including:

Application components.

GQoS API (part of Winsock 2).

QoS service provider.

The Windows 2000 / XP / 2003 operating system contains all of the above and the following components:

Resource Reservation Protocol Service Provider (Rsvpsp.dll) and RSVP Services (Rsvp.exe) and QoS ACS. Not used in Windows XP, 2003.

Traffic control (Traffic.dll).

Generic Packet Classifier (Msgpc.sys). The package classifier identifies the class of service to which the package belongs. In this case, the package will be delivered to the appropriate queue. The queues are managed by the QoS Packet Scheduler.

QoS Package Scheduler (Psched.sys). Defines the QoS parameters for a specific data stream. Traffic is tagged with a specific priority value. The QoS Packet Scheduler determines the queuing schedule for each packet and handles competing requests between the queued packets that need concurrent network access.

The diagram in Figure 2 illustrates the protocol stack, Windows components, and how they interact on a host. Items used in Windows 2000 but not used in Windows XP / 2003 are not shown in the diagram.

Applications are at the top of the stack. They may or may not know about QoS. To harness the full power of QoS, Microsoft recommends using Generic QoS API calls in applications. This is especially important for applications requiring high quality service guarantees. Several utilities can be used to invoke QoS on behalf of applications that are not QoS aware. They work through the traffic management API. For example, NetMeeting uses the GQoS API. But for such applications, the quality is not guaranteed.

The last nail

The aforementioned theoretical points do not give an unambiguous answer to the question of where the notorious 20% go (which, I note, no one has accurately measured yet). Based on the foregoing, this should not be the case. But opponents put forward a new argument: the QoS system is good, but the implementation is crooked. Consequently, 20% are "gorged" after all. Apparently, the problem has worn out the software giant as well, since it has already separately denied such fabrications for quite a long time.

However, let's give the floor to the developers and present selected moments from the article "316666 - Windows XP Quality of Service (QoS) Enhancements and Behavior" in literary Russian:

"One hundred percent of the network bandwidth is available for sharing among all programs unless a program explicitly requests the prioritized bandwidth. This" reserved "bandwidth is available to other programs if the program that requested it does not send data.

By default, programs can reserve up to 20% of the main connection speed on each computer interface. If the program that reserved the bandwidth is not sending enough data to use up the entire bandwidth, the unused portion of the reserved bandwidth is available for other data streams.

There have been statements in various technical articles and newsgroups that Windows XP always reserves 20% of the available bandwidth for QoS. These statements are wrong. "

If now someone is still eating 20% of the bandwidth, well, I can advise you to continue to use more all kinds of "tweaks" and lopsided network drivers. And not so much will be "fattened up".

Everyone, the QoS myth, die!

Yuri Trofimov,

Calculation of the stability characteristics of the operational communication system

Calculation of the stability characteristics of the operational communication system PDF creator software

PDF creator software Discrete channel. Interference in communication channels

Discrete channel. Interference in communication channels How to use QoS to ensure the quality of Internet access Where is the qos packet scheduler

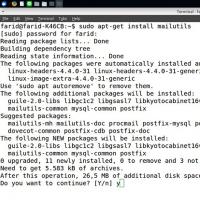

How to use QoS to ensure the quality of Internet access Where is the qos packet scheduler Basic Sendmail Installation and Configuration on Ubuntu Server

Basic Sendmail Installation and Configuration on Ubuntu Server Install VPN in Ubuntu Vpn ubuntu connection

Install VPN in Ubuntu Vpn ubuntu connection Distributed System Architecture Large Scale Cloud IoT Platform

Distributed System Architecture Large Scale Cloud IoT Platform